Back to blog

An engineer’s guide to mobile biometrics: Android Keystore pitfalls and best practices

Engineering

Jul 10, 2023

Author: Jordan Haven

At Stytch, we uncovered a variety of interesting challenges and oft-overlooked choices when building our mobile biometrics product. Each problem required us to think deeply about security implications, architecture, and mobile development patterns.

We discovered, unsurprisingly, that when it comes to mobile biometrics, the devil really is in the details. This is the third and final post of a three-part series on mobile biometric authentication.

This content is intended for engineers or folks interested in diving deeper into mobile biometrics implementation.

In this post, we’ll build on the result-based architecture concepts of the last post and look specifically at some of the idiosyncrasies of the Android ecosystem, and how to prepare for and build around those.

Part one | An engineer's guide to mobile biometrics: step-by-step

Part two | An engineer's guide to mobile biometrics: event- vs result-based

Part three | An engineer's guide to mobile biometrics: Android Keystore pitfalls and best practices

Table of contents

Insecure mobile biometrics in the wild

Context on the Android ecosystem

Recap: result-based architecture and key configuration

The CryptoObject

Tink, BouncyCastle, and the Keystore

Android Keystore support

Empower the developer

Concluding thoughts

Insecure mobile biometrics in the wild

Given how mature the Android ecosystem is, you might assume there would be a well-defined and easy-to-follow path to build secure authentication for mobile applications. You might also assume that most security-focused products (like banking applications!) would be using best practices to guard against common vulnerabilities and threats. But when it comes to authentication, the only way to validate your assumptions – of what is or isn’t secure – is by testing it yourself.

As part of our research into the Android ecosystem when building our mobile biometrics product, we tested biometric authentication flows for dozens of popular mobile applications. Our testing focused on applications with large usership and a variety of use cases, including healthcare, social media, sportsbooks, insurance, trading, banking, and other high-security industries.

We found that 50% of the Android apps we tested failed to meet OWASP’s AUTH-2 security standards. And according to F-Secure Labs, “about 70% of the assessed [Android] applications that utilized fingerprint authentication were unlocked without even requiring a valid fingerprint.”

Context on the Android ecosystem

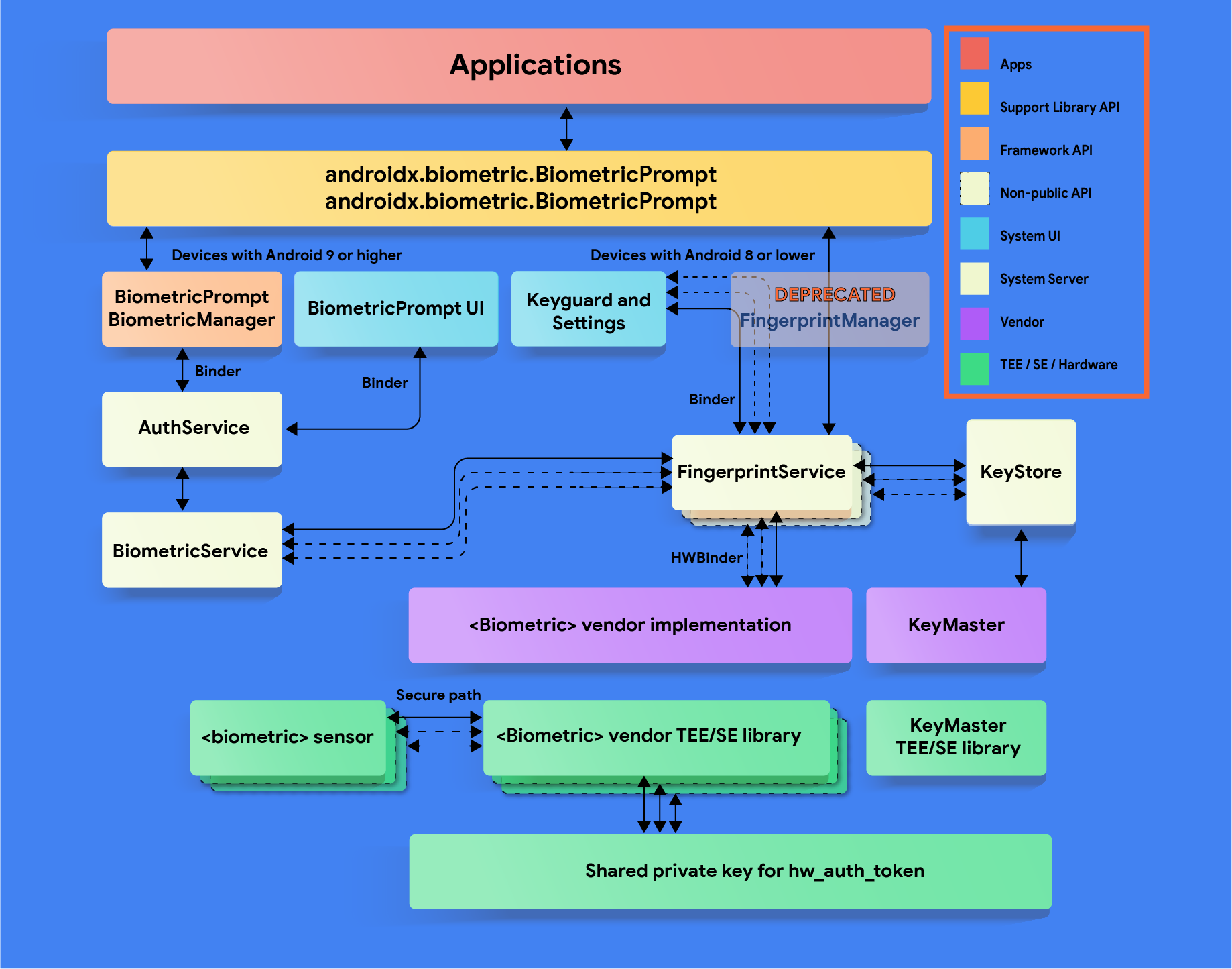

Android biometric stack (source)

You may still be wondering what makes Android so special as to warrant its own dedicated blog in our biometric series. The answer is – it’s fraught with pitfalls due to a complicated history.

Unlike competitors like Apple, the Android ecosystem was initially designed to grow via open, fairly unregulated access. The thought was the easier Google made it for Original Equipment Manufacturers (OEMs) – think Samsung, Motorola, LG, OnePlus, etc. – to build on the Android OS, the quicker they could capture mobile market share.

As a result, for the first decade or so of Android’s existence, what it meant to “be an Android” was a bit of a wild west. Each OEM could make significant software modifications and tweaks to suit their product line, with little oversight or regulation from Google. As a downstream effect of this open approach, whenever Google pushed a patch or over-the-air update (OTA), many OEMs couldn’t deploy them without significant adaptations and resourcing, creating a very uneven and unpredictable ecosystem with idiosyncrasies and bugs specific to each phone manufacturer.

Around Android 8, Google began to crack down on the use of Android OS, creating tighter restrictions on what could be adapted and what could not. But the reality is for any devices still on Android 2-7, quirks still often abound.

The Android Keystore

Of these Android bugs and idiosyncrasies, our team was especially focused on those that affected the Android Keystore, the system that locally stores and handles cryptographic keys. It is an essential component of a secure, effective mobile biometric authentication flow.

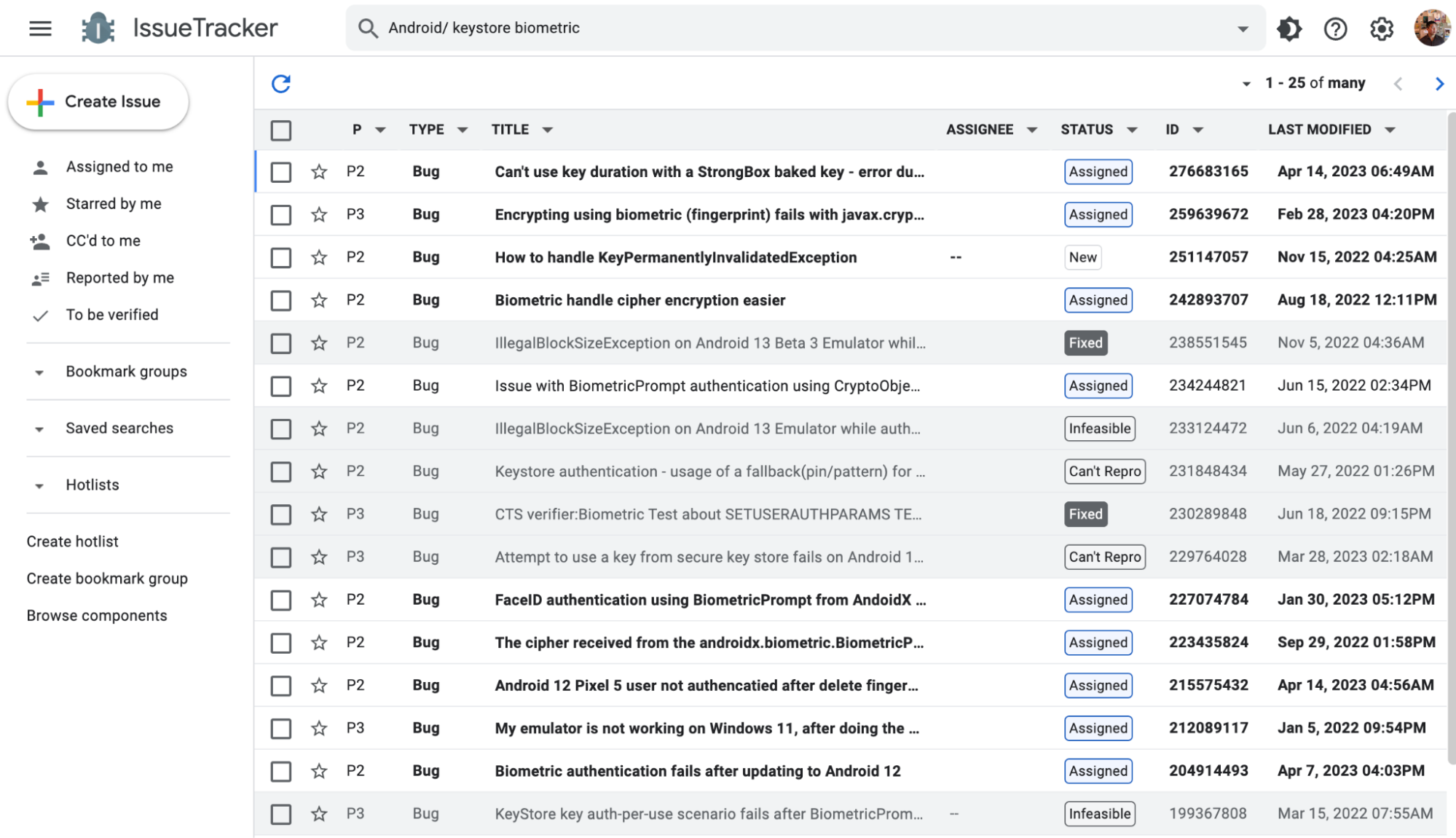

Android IssueTracker results for "keystore biometrics"

But in a diverse, open source Android ecosystem, devices may not support the Keystore – which subjects all applications on that device to security vulnerabilities like account takeover. It’s impossible to prove or disprove that all Android phones will work reliably with the Keystore. Unfortunately, developers must factor this severe possibility into their application’s auth logic in order to be secure.

We’ll show you the best practices on implementing mobile biometrics in both scenarios: with and without the Android Keystore.

Recap: result-based architecture and key configuration

In the previous post we discussed the differences between what we’re calling event and result-based biometric architectures. We’ve already established that a result-based architecture is more secure than an event-based version, but there’s slightly more to it than that. When using a result-based architecture, you also need to think about:

- How the key is configured.

- What you're doing with that key once the biometric prompt has passed.

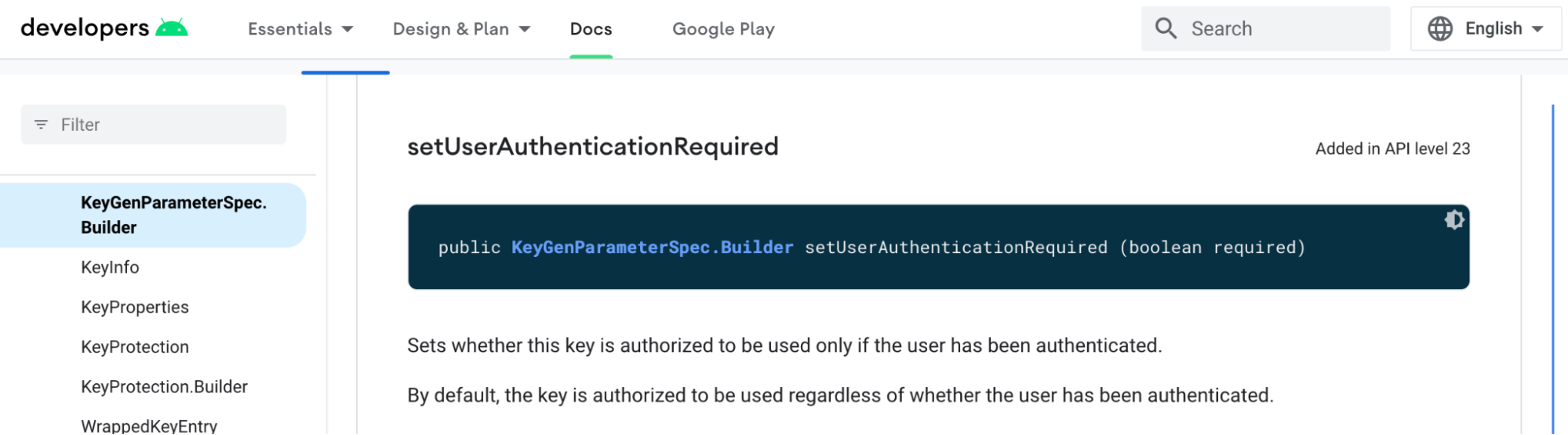

When configuring the secret key that you will be using to encrypt/decrypt sensitive information, you have the option of enforcing whether or not the key is authorized for use with or without user authentication. By default, the key is authorized to be used regardless of whether the user has been authenticated, but Android allows you to enable stronger behavior when configuring the KeyGenerator by setting a property called UserAuthenticationRequired.

Android Keystore docs for KeyGenerator and UserAuthenticationRequired

When UserAuthenticationRequired is set, there are some guarantees that the device makes in order to allow the key to be used:

- The key can only be generated if a lock screen is configured.

- At least one biometric must be enrolled (on Android 11 and greater).

- The use of the key must be authorized by the user after authenticating with secure lock screen credentials such as a password/PIN/pattern or biometric.

- The key will become irreversibly invalidated once the secure lock screen is disabled, or when the secure lock screen is forcibly reset, or (on Android 11 and greater) once a new biometric is enrolled or all biometrics are deleted.

It’s this step where many widely used mobile applications, mentioned at the beginning of this post, failed to implement secure mobile biometrics. Without UserAuthenticationRequired, they lack the strong security guarantees around how their secret key is used, thus exposing their user bases to account takeover via mobile biometrics.

Once you’ve configured your key to require user authentication, it’s also important to consider how you use that key. If you’re not performing a cryptographic operation on your private data with that key, and instead are just using the presence of a CryptoObject to assume user authentication, you’re no better off than if you were using an event-based approach. Both the event-based approach and the result-based-but-not-doing-cryptography-approach are susceptible to simple attacks like these:

- https://github.com/ax/android-fingerprint-bypass

- https://github.com/WithSecureLabs/android-keystore-audit/blob/master/frida-scripts/fingerprint-bypass-via-exception-handling.js

Want mobile biometrics for your app? Switch to Stytch.

Pricing that scales with you • No feature gating • All of the auth solutions you need plus fraud & risk

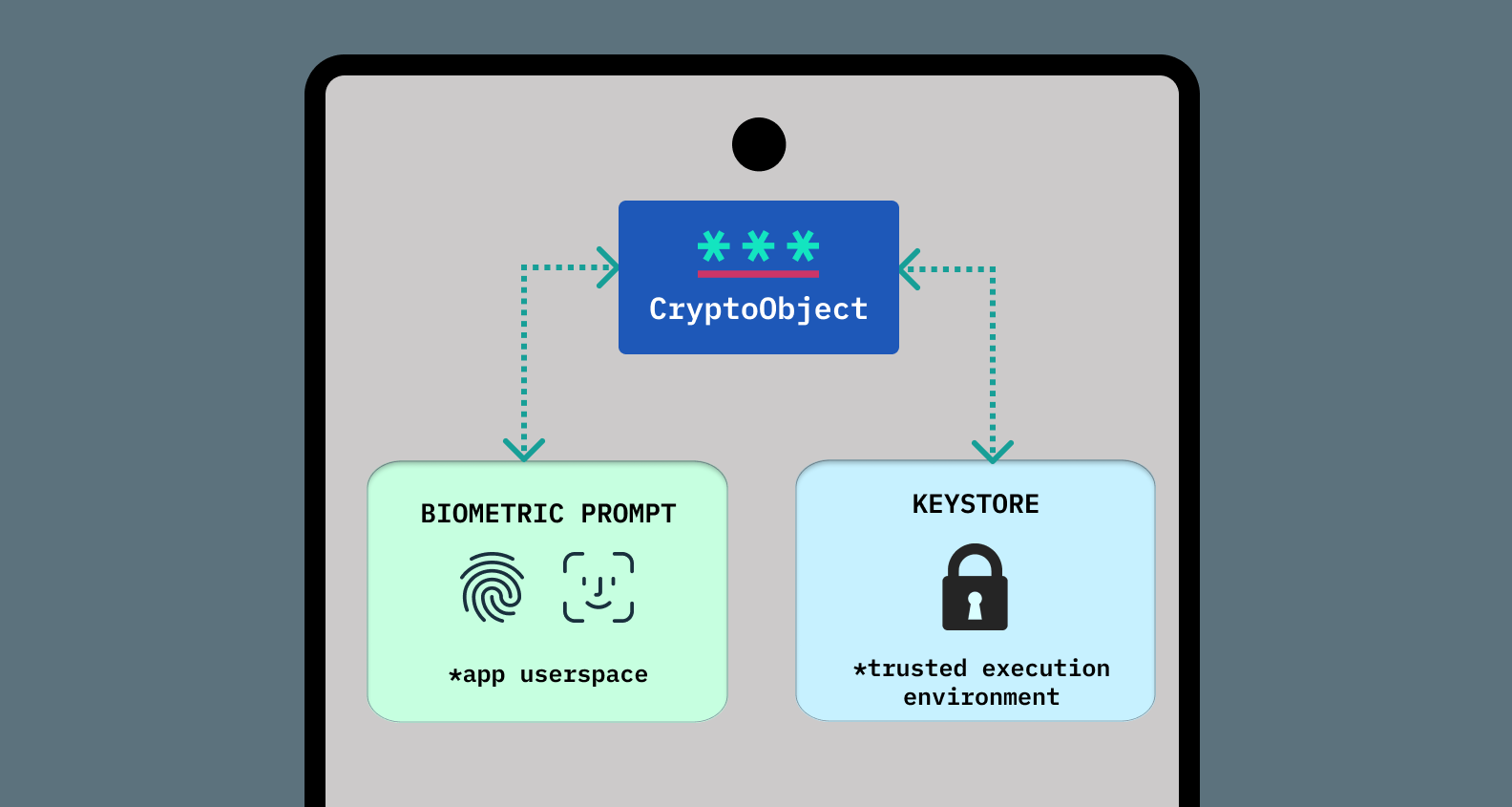

The CryptoObject

But what does it mean to use the CryptoObject to encrypt your private data? Well, once you’ve securely configured your key, and it’s only accessible after passing user authentication, the CryptoObject gives you one opportunity to perform a cryptographic operation (encryption or decryption), which you should use either to encrypt your private data before persisting it to device or decrypting your previously-encrypted private data.

For Stytch’s biometric implementation, we use a public/private keypair to sign and verify challenges provided by our backend. What this means is that a string encoded with our public key can only be decoded by our private key, and we must safely persist our private key to the device for future usage.

The CryptoObject brokers access between the stored, encrypted private key and the biometric prompt.

When registering a new biometric authentication with Stytch, we:

- Generate a biometrically-secure key, as described above, in the Android Keystore.

- Generate an Ed25519 keypair (using BouncyCastle).

- Register the public key with our servers.

- Use the private key to sign a challenge string and authenticate that with our servers.

- Use the

CryptoObjectreturned from the biometric prompt to access the biometrically-secure key, which we use our one cryptographic operation allowance to encrypt the private key. - Encrypt the key again (with Tink), and persist it to

SharedPreferences.

Authenticating with biometrics follows a similar flow, but in reverse:

- Use Tink to decrypt the first-pass encryption of the private key from

SharedPreferences. - Use the

CryptoObjectreturned from the biometric prompt to access the biometrically-secure key, which we use to decrypt the private key recovered in step 1. - Derive the public key from the private key.

- Request a challenge from the Stytch servers.

- Use the decrypted private key to sign the challenge and authenticate that with our servers.

When it comes to persisting your now-encrypted private data, you have a few options on Android: saving it to a file in internal/external storage, in a database, or to SharedPreferences (a simple key-value store). For our use, we’re simply storing an encrypted private key, so SharedPreferences makes the most sense; it avoids having to do any file and string parsing, and avoids the unnecessary overhead of a full database.

On Android, we also encrypt all data before saving it to SharedPreferences (using Tink), so your private key is protected with two layers of encryption, using two different keys. This means that even if the SharedPreferences master key is compromised (more on that later), the Ed25519 private key is still encrypted by the biometrically-locked key.

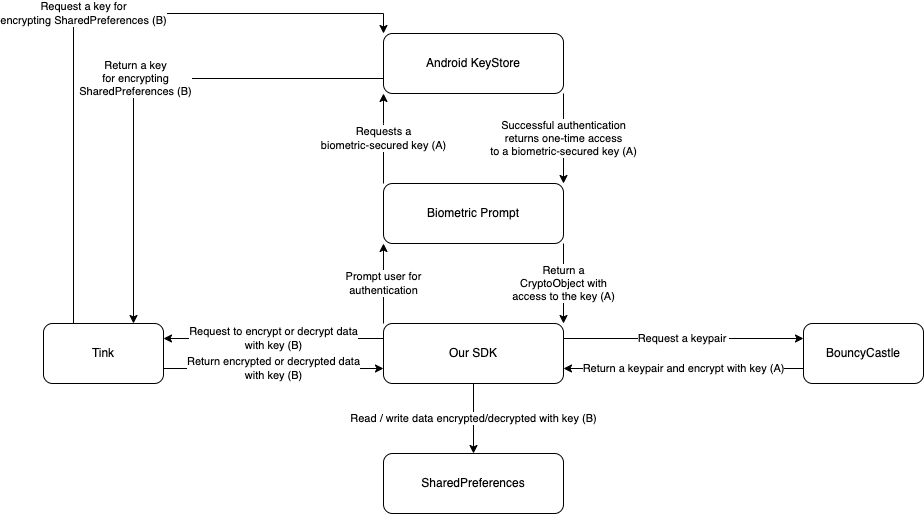

A high-level diagram of how our flow uses the Android Keystore and SharedPreferences looks something like this:

Tink, BouncyCastle, and the Keystore

As mentioned above, we use a library called BouncyCastle for generating Ed25519 keypairs and Tink for encrypting data in SharedPreferences.

BouncyCastle and Tink are both industry standard, open source libraries for performing cryptographic operations. They can perform similar functions, but due to a limitation in Tink around support for our chosen public/private keypair implementation (Ed25519), we chose to augment our existing Tink infrastructure with BouncyCastle specifically for our biometrics product.

Tink, which is a Google product, is a multi-language, cross-platform cryptography solution which integrates with both on-device (Android Keystore, iOS Keychain) and online (Amazon KMS, Google Cloud KMS) keystores. From the beginning of the Stytch Android SDK, we’ve used Tink to encrypt all data in SharedPreferences using an AES-256 key saved in the Android Keystore. When it came time to implement biometrics, we initially turned to our existing Tink implementation; but while Tink theoretically supports Ed25519 keypairs, that functionality is provided within a package that is not recommended for public consumption.

Enter BouncyCastle. BouncyCastle is a 20+ year strong, actively supported cryptography library available for both Java and C#, and a stripped-down version comes natively bundled on Android devices. On early Android versions (pre-4.0), this caused a namespace conflict when trying to use full-or-newer versions within application dependencies, but is no longer a problem on our supported platforms (Android 6.0+). BouncyCastle officially supports generating and interacting with Ed25519 keypairs, and given its long track record, it was a no-brainer to choose for our biometrics product.

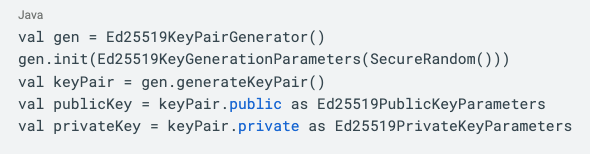

Generating an Ed25519 keypair with BouncyCastle is easy:

Once we have this keypair, we can Base64 encode it, register the public key with our servers, encrypt the private key with a biometrically-secured key from the Android Keystore, and save it to SharedPreferences, where it is encrypted a second time by Tink.

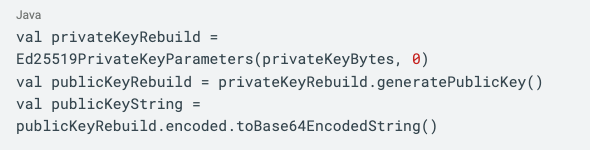

On authentication flows, we make one decryption pass to load the private key from SharedPreferences (using Tink), then decrypt it again using the biometrically-secured key from Android Keystore. Once we have the unencrypted private key byte array, we can derive the public key from it, like so:

Now that we have a public key and a private key, we can request the code challenge from the Stytch servers (with the public key), and sign and authenticate it (with the private key).

Android Keystore support

There is one big GOTCHA when it comes to Tink and the Android Keystore that we need to discuss: some devices may not have a functioning Keystore, and in those cases, Tink deactivates the Keystore and stores its keys in the application sandbox. From the Tink documentation:

"When Android Keystore is disabled or otherwise unavailable, keysets will be stored in cleartext. This is not as bad as it sounds because keysets remain inaccessible to any other apps running on the same device. Moreover, as of July 2020, most active Android devices support either full-disk encryption or file-based encryption, which provide strong security protection against key theft even from attackers with physical access to the device."

Tink has a self-test that it runs at runtime to determine whether or not the Keystore is “reliable”, and exposes a helper method to indicate whether or not it is using the Keystore.

In order to fully secure an encryption key behind a biometric authentication, we have to use the Android Keystore, and do so directly, regardless of whether or not Tink is using it. And it’s that key, which is always saved in the Keystore, that is used as the first-pass encryption of the private key.

Since we only use Tink for encrypting the already-encrypted private key bytes, even if the application sandbox were compromised, and the Tink master key were extracted, all an attacker would gain would be an encrypted string that cannot be decrypted unless they also were to break the Android Keystore itself.

Empower the developer

But the Keystore being potentially unreliable is still concerning, since we need the Keystore to biometrically secure that first-pass encryption key/second-pass decryption key.

So what do we do if the Keystore isn’t available? Empower the developer with the information to make a choice.

First and foremost, developers need to know of the security and functionality implications laid out above. To support that, we make it easy with our SDK to tell if a device passes the self-test and force developers to opt-in to using biometrics on a device without a reliable Keystore.

First off, developers can call the StytchClient.biometrics.isUsingKeystore() method to determine if the Keystore is reliable. If this returns false, the safest thing to do is disable biometric registrations for that device.

Second, when attempting a biometric registration we internally make the same check to determine Keystore reliability and, by default, reject the registration flow before doing anything with a NOT_USING_KEYSTORE error. If a developer still wants to attempt biometric registration, they must explicitly set allowFallbackToCleartext to true in the Biometrics.RegistrationParameters.

If allowFallbackToCleartext is set to true, then the methods will succeed regardless of the device’s access to the Keystore. If the parameter is set to false, then the SDK will verify that the device has a working implementation of Keystore before proceeding. If it does not, then the attempt to register or authenticate with biometrics will fail. The SDK exposes mechanisms for developers to only show biometric options if the Keystore is working, and defaults allowFallbackToCleartext to false so that developers must intentionally opt in if they are allowing this functionality.

We believe that providing options and cautious defaults empower developers to make the best decisions for their applications, while encouraging the safest practice.

Concluding thoughts on building mobile biometric authentication

Users are becoming more and more comfortable securing their accounts with biometrics and expect it in a wider variety of applications. Biometrics are a quick, trusted feature that reduces friction around authentication.

With this blog series, we hope we’ve helped you understand the many steps involved in building mobile biometrics, the implications of choosing an event- or result-based architecture, and the Android-specific considerations to take regarding key generation and persistence.

If you were to take away just four main lessons on biometrics from this series, we’d want those to be the following:

1. Biometric implementation is complex, but is a worthwhile investment for your app

From choosing an encryption algorithm, to deciding on the appropriateness of fallback authentication, to understanding the options available to you for persisting your secure data, every choice you make impacts the overall security of your biometric implementation. That said, every application will have unique choices to make based on the sensitivity of the data they handle, its value to potential hackers, and the types of user experience that matters most to your customers.

But once you understand these intricacies enough to make informed decisions, it’s difficult to come up with an authentication flow use case where biometrics would not be a good choice for your application.

2. The choice between event- vs. result-based architecture is one of the most impactful decisions you’ll make in your build

One of the most important things we’ve learned in the auth business is how to think through worst-case scenarios. So while superficially event- and result-based architectures may not seem all that different, a closer look at potential breach scenarios revealed to us that result-based was by far and away preferred, even if it took a bit more time to implement. We also felt it was our job as an auth provider to make that added security more easily attainable for our customers, which is why we’ve built it into our mobile SDKs.

3. The mobile OS ecosystem is diverse, and Android in particular includes pitfalls that require some extra vigilance and due diligence

The Android ecosystem is generally diverse and historically unregulated, so whenever building anything for this OS developers need to do some homework on legacy issues.

For biometrics in particular, this means carefully considering your options when generating your biometrically-secure Key and the steps you take to persist your secure data to the device. Specifically, we recommend ensuring that your keys are generated with the UserAuthenticationRequired flag, and that you use that key to encrypt/decrypt your secure data. You also need to be aware that some Android devices may not have a reliable Keystore and that, in those instances, the best solution is to not allow biometric registrations at all. We’ve enabled that by default, but as always, the choice is yours.

4. When in doubt…

When it comes to building any product in auth, either in-house or like in Stytch’s case for our customers, we really stick to two basic principles:

- Developer friction and user experience should always be weighed against the risk that is specific to your product. There are of course standards and general best practices one must follow, but every app has unique needs for security and UX. Take the time to evaluate your app on its own terms.

- When building for developers, always inform them when they need to make an important choice. There should be no scary default behaviors, risky edge cases or quirks they’re left to discover on their own. Build defaults for the best practice in your view, and inform them of the tradeoffs. That way, you both maximize your protection while empowering them with choice.

Our goal with this series was to offer visibility into the decisions that went into our own biometric product, whether you’re looking to build or buy. But we’re proud to have built what we’ve found is an easy, safe-by-default option for bringing biometric authentication to applications without having to worry about the nitty-gritty details explored in this blog series.

So if you’re not ready to build mobile biometrics from the ground up, try Stytch’s mobile SDKs for iOS, Android, or ReactNative, and get started with just a few lines of code.

Check out our careers page if solving problems and reading topics like this interest you!

About Latacora

A big thank you to Latacora for helping us with our research.

Latacora bootstraps security practices for startups. Instead of wasting your time trying to hire a security person who is good at everything from Android security to AWS IAM strategies to SOC2 and apparently has the time to answer all your security questionnaires plus never gets sick or takes a day off, you hire us. We provide a crack team of professionals prepped with processes and power tools, coupling individual security capabilities with strategic program management and tactical project management.

Other awesome resources to check out:

Using BiometricPrompt with CryptoObject: how and why

How secure is your android keystore authentication

How you should secure your Android’s app biometric authentication

A closer look at the security of React Native biometric libraries

Audit: Biometric authentication should always be used with a cryptographic object

Android Biometric API: Getting Started

Android Keystore

BiometricPrompt

CipherObject

Tink

BouncyCastle

Authentication & Authorization

Fraud & Risk Prevention

© 2025 Stytch. All rights reserved.