Back to blog

Model Context Protocol (MCP): A comprehensive introduction for developers

Auth & identity

Mar 28, 2025

Author: Reed McGinley-Stempel

Executive summary

Model Context Protocol (MCP) is an open standard that bridges AI models with external data and services, allowing Large Language Models (LLMs) to make structured API calls in a consistent, secure way. This post will introduce MCP, explain why it’s valuable for connecting AI systems, compare it to existing approaches like ChatGPT plugins and manual API integrations, and dive into its recent support for OAuth-based authentication. We’ll also explore a bit of code to see MCP in action.

MCP acts as a universal adapter between AI tools and external services, eliminating the need for custom integration code for each tool or API. Much like USB-C simplifies connectivity across diverse devices, MCP provides a uniform method for AI models to invoke external functions, retrieve data, or use predefined prompts. At its core, MCP offers one interface that connects to many different systems.

What is Model Context Protocol MCP?

MCP is essentially a universal adapter between AI applications and external tools or data sources. It defines a common protocol (built on JSON-RPC 2.0) that lets an AI assistant invoke functions, fetch data, or use predefined prompts from external services in a structured manner. Instead of every LLM app needing custom code for each API or database, MCP provides one standardized “language” for all interactions.

The MCP layer enables AI applications to securely access and interact with external data sources and tools. It serves as a bridge between large language models (LLMs) and various databases, applications, or APIs, facilitating seamless integration and functionality without the need for extensive custom coding.

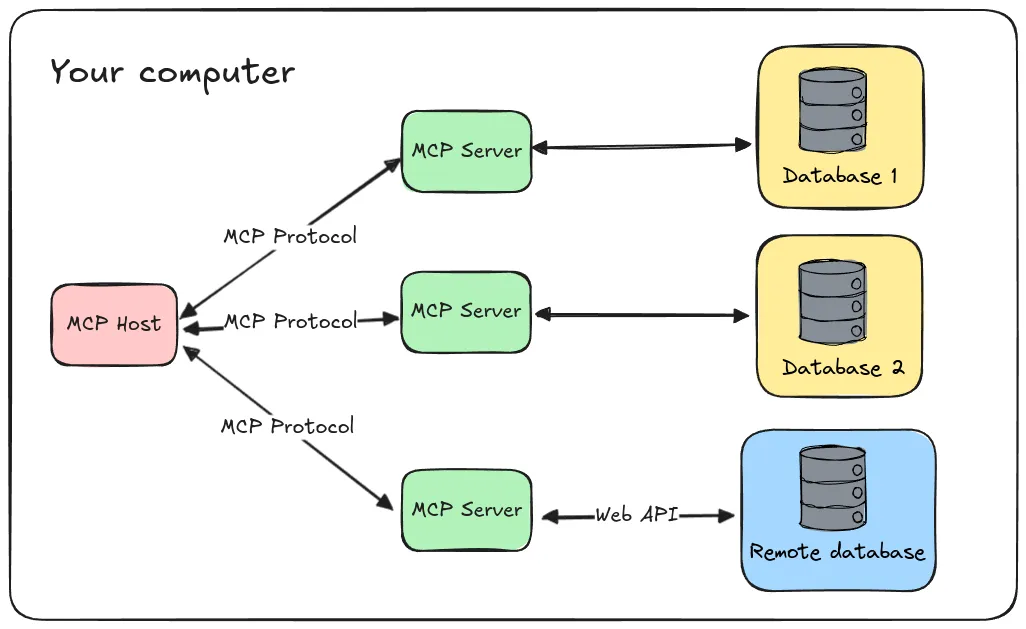

MCP uses a client-server architecture to achieve this. The AI-powered application (e.g. a chatbot, IDE assistant, or agent) acts as the host and runs an MCP client component, while each external integration runs as an MCP server. The server exposes capabilities (like functions, data resources, or prompt templates) over the MCP protocol, and the client connects to it to utilize those capabilities. This separation means the AI model doesn’t talk to APIs directly; instead, it goes through the MCP client/server handshake, which structures the exchange.

Why MCP is Valuable

In traditional setups, connecting an AI model to external data or actions was tedious and ad-hoc. Developers often had to write one-off integrations for each API or database they wanted the model to use, dealing with different auth, data formats, and error handling for each. MCP changes the game by standardizing these interactions. Key benefits include:

- Rapid Tool Integration: With MCP, you can plug in new capabilities without custom-coding each from scratch. If an MCP server exists for, say, Google Drive or a SQL database, any MCP-compatible AI app can connect to it and immediately gain that ability. This is a huge win for automation—AI agents can fetch documents, query databases, or call APIs as needed, just by adding the appropriate server. It’s like having a library of ready-made “plugins” that all speak the same language. MCP servers serve as lightweight programs that expose specific capabilities through a standardized protocol, acting as intermediaries between the Cursor and various external tools or data sources.

- Autonomous Agents: MCP empowers more autonomous AI behavior. Agents are not limited to their built-in knowledge; they can actively retrieve information or perform actions in multi-step workflows. For example, a sophisticated agent might use MCP to gather data from a CRM, then send an email via a communications tool, then log a record in a database – all in one seamless chain. By enabling fluid, context-aware, multi-step interactions, MCP helps AI agents move closer to true autonomous task execution. As one observer noted, MCP turns an AI from an isolated “brain” into a versatile “doer” by giving it standardized access to real-world tools and data.

- Reduced Friction and Setup: Because MCP acts as a universal interface, developers avoid the fragmentation of maintaining separate integrations. Once an application supports MCP, it can connect to any number of services through a single mechanism . This dramatically reduces the manual setup required each time you want your AI to use a new API. Teams can focus on higher-level logic rather than reinventing connection code for the 10th time. As Anthropic put it, MCP replaces fragmented integrations with a simpler, more reliable single protocol for data access.

- Consistency and Interoperability: MCP enforces a consistent request/response format across tools. This means your AI app doesn’t have to handle one HTTP response for Service A, another XML for Service B, etc. The model’s outputs (function calls) and the tool results are all passed in a uniform JSON structure. That consistency makes it easier to debug and scale. It also future-proofs your integration logic – even if you switch underlying model vendors, MCP’s interface to the tools remains the same.

- Two-Way Context: Unlike simple API calls, MCP supports maintaining context and ongoing dialogue between the model and the tool. An MCP server can provide Prompts (predefined prompt templates for certain tasks) and Resources (data context like documents) in addition to tools. This means the AI can not only “call an API” but also ingest reference data or follow complex workflows guided by the server. The protocol was designed to support rich interactions, not just one-off queries. This is especially useful in applications like coding assistants (where an AI might iterate with a development environment via MCP) or complex decision-making tasks that require back-and-forth with various data sources.

In short, MCP brings a scalable, plug-and-play approach to enhancing LLMs. It lets AI systems securely tap into the “live” data and actions they need, without each developer having to reinvent the wheel. Early adopters of MCP have already built servers for tools like Google Drive, Slack, GitHub, databases, and more – showcasing how AI agents can use MCP to work with enterprise content repositories, dev ops tools, and other real-world systems.

The MCP architecture – How it works at-a-glance

Client-Server structure

MCP follows a clear client-server architecture:

- MCP Client: Embedded in AI applications (chatbots, IDE assistants, automation agents).

- MCP Server: Exposes external capabilities such as functions (tools), resources (data), and prompts (templates).

All interactions occur through standardized JSON-RPC messages, maintaining a secure, structured exchange:

Example JSON-RPC Request:

For example, to list available tools, an MCP client sends a request like this:

{

"jsonrpc": "2.0",

"id": 1,

"method": "tools/list",

"params": {}

}The server would reply with a structured JSON listing the tools (each with a name, description, and input schema). For instance, a server might advertise a get_weather tool and describe what inputs it needs.

{

"jsonrpc": "2.0",

"id": 1,

"result": [

{ "name": "get_weather", "description": "Retrieves weather data.", "schema": { "location": "string" } }

]

}Later, when the LLM decides to use a tool, the client invokes a call to do so. The MCP server executes the function and returns the result in a structured JSON response. The MCP client (within the host app) can then feed that result back into the model’s context or response. In practice, the host application mediates this process: it translates the LLM’s intent (often via the model’s function call output) into an MCP request, and then passes the server’s structured result back to the LLM . This two-way exchange is secure and controlled – the model can only call the specific tools the server exposes, and all data passing in/out goes through the defined protocol.

Building and deploying MCP servers

Building and deploying MCP servers is a crucial step in leveraging the Model Context Protocol (MCP) for AI integrations. MCP servers act as intermediaries between AI models and external data sources or tools, enabling seamless communication and data exchange. One of the standout features of MCP is its flexibility in server development. Developers can use any programming language that can print to stdout or serve an HTTP endpoint, allowing them to choose their preferred language and technology stack.

When building an MCP server, it’s essential to consider the architecture and design. MCP follows a client-server architecture, where a host application can connect to multiple servers. This architecture enables scalability and flexibility, allowing developers to design MCP servers that handle various tasks and functions, such as data processing, tool integration, or AI model management.

Deploying MCP servers can be done in various environments, including local development environments, cloud platforms, or on-premises infrastructure. For instance, Cloudflare provides a robust platform for building and deploying remote MCP servers, making it easier to manage and scale MCP deployments. This flexibility ensures that MCP servers can be tailored to meet the specific needs of different applications and environments, whether it’s a local setup for development or a cloud-based solution for production.

By focusing on a well-designed architecture and leveraging the flexibility of MCP, developers can create powerful and scalable MCP servers that enhance the capabilities of AI models, enabling them to interact seamlessly with external data sources and tools.

MCP clients and tools

MCP clients and tools are essential components of the Model Context Protocol ecosystem. MCP clients are applications that connect to MCP servers to access external data sources or tools. These clients can be built using various programming languages and frameworks, such as Python, JavaScript, or Java, providing developers with the flexibility to choose the best tools for their specific needs.

MCP tools, on the other hand, are software components that provide specific capabilities or functions to MCP clients. These tools can be integrated with MCP servers to enable features such as data processing, AI model management, or tool integration. Examples of MCP tools include Claude Desktop, which provides a chat interface for interacting with AI models, and Cursor, which offers a plugin system for extending AI capabilities.

Developers can build custom MCP clients and tools to meet specific use cases or requirements. This flexibility allows for innovation and experimentation in the MCP ecosystem, enabling developers to create new and exciting applications. Whether it’s a specialized tool for data analysis or a client application that integrates with multiple MCP servers, the possibilities are vast.

By leveraging MCP clients and tools, developers can create robust and versatile applications that harness the full potential of AI models, enabling them to interact with a wide range of external data sources and tools seamlessly.

Comparing MCP to other approaches

MCP | Custom Integrations | ChatGPT Plugins | LangChain & Frameworks | |

|---|---|---|---|---|

Integration Speed | ||||

Integration Speed | ✅ Fast, plug-and-play | ❌ Slow, custom code | ⚠️ Medium, proprietary | ⚠️ Medium, custom code |

Authentication | ||||

Authentication | ✅ OAuth standard | ❌ Manual API keys | ⚠️ Plugin-specific OAuth | ❌ Varies by implementation |

Interaction Type | ||||

Interaction Type | ✅ Continuous & context-rich | ❌ Ad-hoc interactions | ❌ Single-shot interactions | ⚠️ Context limited |

Open Standard | ||||

Open Standard | ✅ Yes | ❌ No | ❌ No | ⚠️ Framework-dependent |

Let’s compare a few approaches that existed before or alongside MCP:

- Custom Integrations & API Key Management: The most common traditional approach was to write custom code for each service and supply the LLM with credentials (API keys or tokens) to use those integrations. For example, you might write a Python function to query an API, give the LLM the ability to call that function, and manually handle the API key in your backend. This approach is labor-intensive and doesn’t scale – each new data source requires new code, and each environment must securely manage API keys. It often led to brittle systems, since every integration was unique. By contrast, MCP centralizes and standardizes these interactions: the AI agent just needs to handle the MCP protocol, and any MCP server (for any service) will work in a plug-and-play way. New servers can be added without changing the client’s code at all. Moreover, MCP provides a formal structure for authentication (discussed later) so that handing API keys to the AI isn’t done ad-hoc but through a secure protocol.

- ChatGPT Plugins (OpenAI Plugins): In 2023, OpenAI introduced a plugins system for ChatGPT that allowed models to call external APIs defined by an OpenAPI spec. This was an early step toward standardized tool use, but it has limitations. Each plugin is essentially its own mini-integration (with its own API schema and authentication), and they need to be built/hosted individually. Only certain platforms (like ChatGPT or Bing Chat) can use those plugins, since it was a proprietary approach. Plugins also were mostly one-shot calls – the model would call an API and get info, without a persistent connection or ongoing exchange. MCP differs in that it’s open and universal (not tied to one provider or interface) and it supports rich two-way interactions and continuous context. Think of ChatGPT plugins as specialized tools in a closed toolbox, whereas MCP is an open-standard toolkit that any developer or AI platform can utilize. MCP’s standardized auth (especially OAuth) also means it can handle secure access to user data in a more uniform way than the plugin-by-plugin OAuth flows in ChatGPT’s system. In summary, ChatGPT plugins showed the value of standardizing API access for LLMs, but MCP takes it further by making it an open protocol and by enabling a persistent “conversation” between AI and services.

- LLM Tool Frameworks (LangChain, Agentic libraries): Before MCP, many developers used frameworks like LangChain to give models tools. In these setups, you define a set of tool functions (with descriptions) and the agent’s prompting logic so the LLM can decide to use them. This works, but each tool still requires custom implementation behind the scenes – LangChain ended up with hundreds of tool integrations maintained in its library. Essentially, LangChain provided a developer-facing standard (a Python class interface) for integrating tools into an agent’s codebase, but nothing for the model to dynamically discover new tools at runtime. MCP is complementary to these frameworks, shifting the standardization to be model-facing. With MCP, an agent can discover and use any tool that an MCP server provides, even if the agent’s code didn’t explicitly include that tool ahead of time. In fact, LangChain has added support so it can treat MCP servers as just another tool source – meaning an agent built with LangChain can call MCP tools easily, leveraging the growing ecosystem of MCP servers. The difference is that MCP formalizes the interface over a protocol (JSON-RPC, with metadata, etc.), making it easier to plug into different environments, not just Python frameworks. Similarly, OpenAI’s native function calling feature can be seen as handling the formatting of a function call (the model outputs a JSON function call), whereas MCP handles the execution of that call in a standardized way . OpenAI’s function calling and MCP often work in tandem: the LLM produces a structured call, and the MCP client/server execute it and return the result, which together enables seamless tool use.

In essence, MCP isn’t the first attempt to connect LLMs with external APIs – but it learns from those past approaches (plugins, tool libraries, etc.) and unifies the solution. It provides an open, model-agnostic protocol that simplifies integration and authentication. Particularly around security and auth, MCP’s design (with OAuth support) avoids the patchwork of per-plugin keys or giving raw API keys to the model. Instead, authentication can be handled in a consistent, standardized flow as part of the protocol – a major step up from the status quo.

MCP in action: technical deep dive

Let’s walk through a typical MCP interaction step-by-step to solidify how it works, and look at a bit of code. Imagine we have an AI assistant that wants to use an MCP server providing a set of tools (say, for a hypothetical “Neon” database service). The high-level flow is:

1. Connect to the MCP Server – The host application (AI assistant) initializes an MCP client and establishes a connection to the server. Depending on the server location, this could be via a local process (stdio) or a remote HTTP stream (SSE). Under the hood, the client sends an initialize message to handshake protocol versions and capabilities.

2. Discover Available Tools/Resources – The client then queries what the server offers. As shown earlier, it might send {"method": "tools/list"} and get back a list of tool definitions. The assistant can use this to inform the LLM (for example, by including the tool list in a system prompt or via the model’s function schema, depending on implementation). For instance, using an SDK, this could be one line of code like:

const tools = await mcpClient.request({ method: 'tools/list' }, ListToolsResultSchema);which returns a structured list of tools. Each tool entry has a name, description, and JSON schema for inputs, so the AI knows what it can do.

3. LLM Chooses a Tool – When a user asks the AI something that requires external action, the LLM determines (often via prompt engineering or function calling capabilities) that a certain tool should be used. For example, user asks: “What’s the latest price of AAPL stock?” The LLM sees it should call get_current_stock_price(company="AAPL", format="USD"). The host application captures this intent (e.g., OpenAI’s function calling API would return a function name and arguments in JSON).

4. Invoke the Tool via MCP – The client now sends a tools/call request to the server with the chosen tool name and parameters. We saw an example JSON for this earlier. In code, using an SDK, it might look like:

const result = await mcpClient.request({

method: 'tools/call',

params: { name: toolName, arguments: toolArgs }

}, CallToolResultSchema);This will cause the server to execute the tool’s handler on its side. The server might be calling an external API, performing a database query, or whatever logic that tool encapsulates. The result (could be a simple value or a complex JSON object) is sent back in a result field of the MCP response.

5. Return the Result to the LLM – The MCP client receives the tool’s output. Now the host application can integrate that back into the AI’s response. In many agent setups, the pattern is to inject the result into the conversation and ask the model to continue. For example, the assistant might then present: “The current stock price of AAPL is $173.22 (USD).” If using an automated loop, the result can be given to the model (perhaps appended to the conversation as a system message like “Result of get_current_stock_price: ...”) and the model can continue answering the user’s query with that information in mind.

Here’s a simplified illustration of calling a tool and using the result in a conversation using Anthropic’s Claude (which natively supports tool use):

// 1. Send user prompt to LLM with available tools context

const response = await anthropicClient.complete({

prompt: "User: Can you list my projects?\nAssistant: ",

model: "claude-3.5",

tools: tools // list of tools from MCP server

});

for (const msg of response.messages) {

if (msg.type === 'tool_use') {

// 2. LLM decided to use a tool

const { name, args } = msg;

// 3. Call the tool via MCP

const toolRes = await mcpClient.request({ method: 'tools/call', params: { name, arguments: args } });

// 4. Inject tool result and resume LLM

await anthropicClient.send({ role: 'system', content: `Tool result: ${toolRes.result}` });

} else {

// 5. Handle normal LLM reply (tool result likely integrated)

console.log("Assistant:", msg.content);

}

}

In reality, frameworks handle a lot of this for you, but the above pseudo-code sketches how MCP fits into the loop. The key point is that MCP provides the standardized call/response layer for tool execution, which the AI agent code can hook into. Whether you’re using OpenAI, Anthropic, or another LLM, MCP stays the same – it’s the glue between the model’s intents and the external actions.

By using MCP, developers get a clear, structured pipeline for extending AI capabilities. The code becomes more maintainable (since you’re calling a generic mcpClient.request rather than service-specific code in each place) and the AI becomes more powerful (since it can tap into any MCP-connected service). Debugging is also easier – you can monitor the JSON-RPC messages to see exactly what was requested and returned, rather than parsing model-generated text for clues.

Early limitations (no built-in authentication)

When MCP first emerged (late 2024), it offered the core protocol for tool and data exchange, but it lacked a standardized authentication mechanism for connecting to remote servers. In practice, early MCP demos and implementations often required running the MCP server locally or in a trusted environment, where authentication wasn’t a big concern (since the AI and server ran on the same machine). For example, developers could run an MCP server for Google Drive on their localhost with a pre-obtained token, then point their AI app to it. But using MCP over the internet or with third-party services was tricky without a formal auth flow.

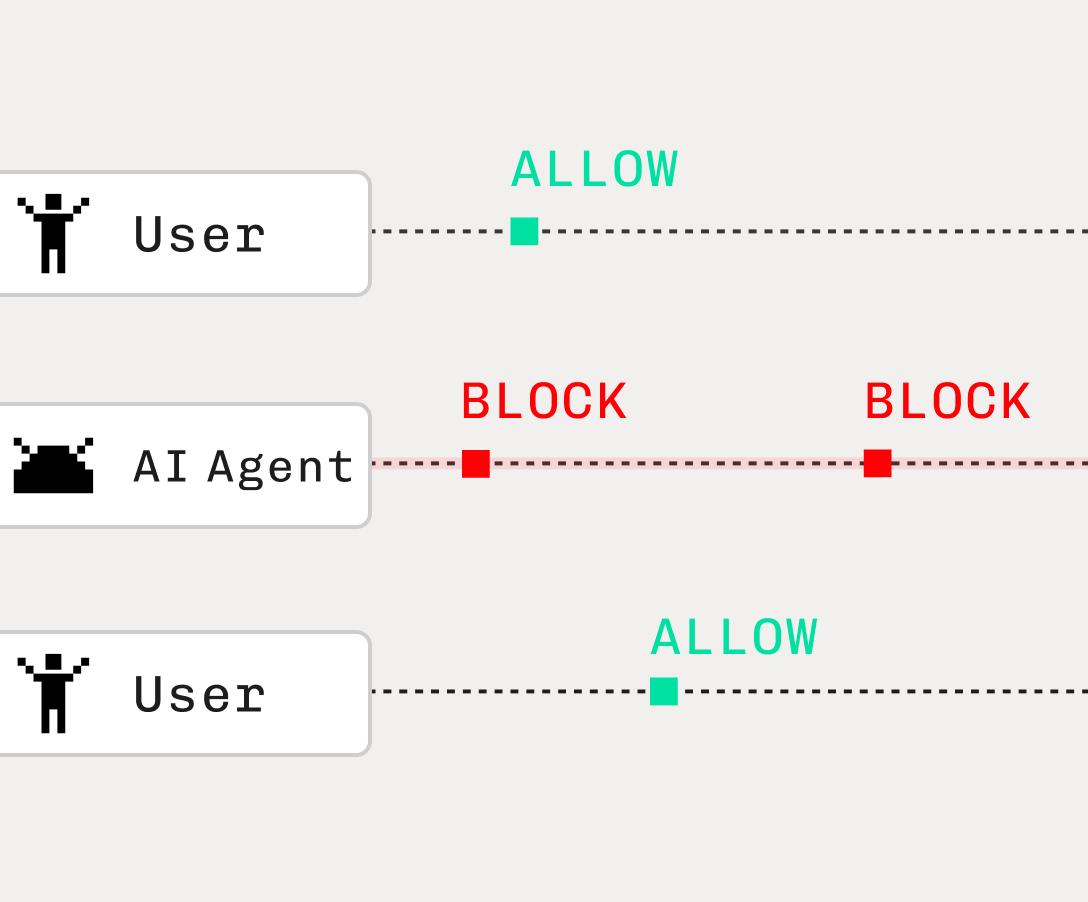

Many initial MCP servers assumed the user would manually provide credentials or API keys to the server at startup. As an example, Anthropic’s quickstart suggested running pre-built servers by supplying your own credentials (API keys, tokens) via config or command-line. That means the server itself had access to your keys and the MCP client just trusted that server. While this works for personal or single-user scenarios, it doesn’t scale well for multi-user applications or cloud-hosted agents. There was no standard handshake for an AI agent to say, “Hey, I’m allowed to access this service on behalf of User X; here are my credentials.”

Essentially, early MCP clients had no way to authenticate to an MCP server except by out-of-band means (like pre-sharing a token or running without auth). This was a notable limitation – MCP was designed to be open and internet-based, but without an auth standard, secure remote use was handicapped.

The lack of authentication standard meant that MCP clients couldn’t safely connect to arbitrary servers on their own. You either hard-coded a client ID/API key into the client (which is not ideal in distributed apps), or you had to run without auth and assume only authorized users could even reach the server (often by keeping it local). Clearly, for MCP to reach its full potential (e.g. connecting an AI agent to a cloud-hosted data source in a secure way), a better approach was needed. The good news is that the community recognized this, and work was done to bake OAuth-based authentication into MCP.

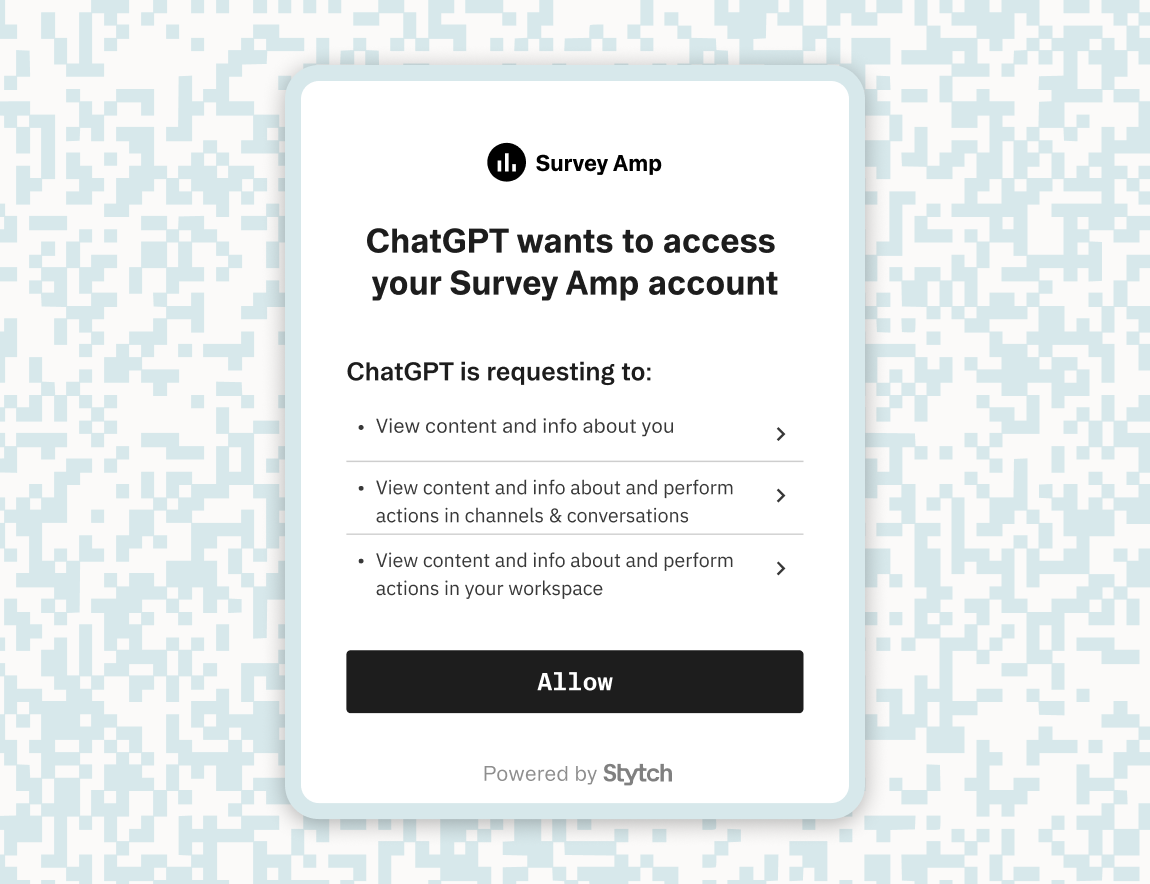

OAuth 2.0 authentication flow

To address the initial authentication limitations and enhance secure connectivity, MCP adopted OAuth 2.0, a widely-recognized and robust authentication standard. OAuth 2.0 provides a secure, scalable framework enabling MCP clients to interact safely with remote servers, cloud-hosted resources, and multi-user environments. The key components and benefits of integrating OAuth 2.0 into MCP include:

- Dynamic Client Registration (DCR): Model Context Protocol supports Dynamic Client Registration, allowing clients to register automatically with OAuth servers. This removes the need for manual client setup or hard-coded credentials, significantly streamlining deployment for developers.

- Automatic Endpoint Discovery: MCP utilizes standardized metadata URLs (following OAuth's discovery protocol) to allow clients to automatically discover OAuth endpoints. This reduces configuration overhead and makes MCP deployments easier and more flexible.

- Secure Authorization and Token Management: Clients securely obtain OAuth tokens tailored precisely to user permissions and access scopes. This ensures that clients access only the resources explicitly permitted by the user, improving security and compliance, especially in multi-user and cloud environments.

- Scalable and Secure Multi-User Support: OAuth 2.0's design inherently supports multiple concurrent users and services, addressing one of MCP's significant early limitations. Applications can now seamlessly handle authorization flows for numerous users simultaneously, critical for widespread cloud adoption.

Debugging and troubleshooting

Debugging and troubleshooting are critical aspects of working with MCP servers and clients. MCP provides various tools and techniques for debugging and troubleshooting, ensuring that developers can identify and resolve issues efficiently. One of the key tools in this process is the MCP Inspector, an interactive debugging tool for MCP servers.

The MCP Inspector allows developers to test and inspect MCP servers, identifying and resolving issues with MCP server integrations. This tool provides a detailed view of the interactions between MCP clients and servers, making it easier to pinpoint problems and understand the underlying causes. Additionally, MCP provides a comprehensive debugging guide that outlines common issues and solutions, helping developers to troubleshoot and resolve problems quickly.

When debugging MCP servers, it’s essential to consider the architecture and design of the system. Developers should identify the specific components or modules that are causing issues and use tools like the MCP Inspector to diagnose and resolve problems. By focusing on a systematic approach to debugging and leveraging the available tools, developers can ensure that their MCP integrations are robust and reliable.

Real-world applications of MCP

The Model Context Protocol (MCP) has various real-world applications across industries and domains. One of the primary use cases for MCP is in AI integrations, where MCP enables seamless communication and data exchange between AI models and external data sources or tools.

MCP can be used in various applications, such as:

- Building AI-powered chatbots: These chatbots can access external data sources or tools, providing users with accurate and up-to-date information.

- Creating AI-driven workflows: MCP enables the integration of AI models with external systems or data sources, automating complex workflows and improving efficiency.

- Developing AI models: These models can interact with external tools or data sources, enhancing their capabilities and providing more accurate and relevant outputs.

- Enabling AI-powered automation: In industries such as finance, healthcare, or manufacturing, MCP can automate tasks and processes, improving productivity and reducing errors.

MCP’s flexibility and adaptability make it an attractive solution for developers and organizations looking to leverage AI and machine learning in their applications. By providing a standardized interface for AI models to interact with data sources and tools, MCP enables innovation and experimentation in the AI ecosystem. This standardized approach not only simplifies the integration process but also ensures that AI models can access the data and tools they need to perform at their best.

In summary, MCP opens up a world of possibilities for AI applications, allowing developers to create more integrated, autonomous, and scalable solutions. Whether it’s enhancing customer service with AI-powered chatbots or automating complex workflows in industrial settings, MCP provides the tools and framework needed to bring these innovations to life.

Conclusion

Model Context Protocol (MCP) is an exciting development in AI development because it allows developers to safely and efficiently connect our increasingly intelligent language models to the extensive world of software and data previously difficult to connect with. By introducing a common protocol, MCP lets us build AI systems that are more integrated, autonomous, and easier to scale. Instead of writing one-off plugins or giving the model brittle instructions for each new tool, we have a coherent framework where AI agents can discover and use tools on the fly, with proper oversight and security.

While the protocol is still evolving (authentication was a recent addition, and more features like standardized server discovery are on the horizon, it’s clear that MCP or something like it will play a key role in the next generation of AI applications. For developers, now is a great time to familiarize yourself with MCP concepts. Whether you’re enhancing a chatbot with company-specific knowledge or building an AI agent that automates workflows, MCP can save you time and headaches by handling the “plumbing” of tool integration. And since it’s an open standard backed by a growing community (and companies like Anthropic), it’s likely to become a foundational piece of AI infrastructure moving forward.

In summary, Model Context Protocol enables a world where AI assistants are not siloed geniuses but well-equipped engineers and assistants – able to interface with many systems, follow procedures, and fetch or create information as needed, all through a unified, secure interface. That’s a powerful vision, and one that is quickly becoming reality with MCP.

At Stytch, we’re focused on easily solving the remote MCP server auth problem for customers, so they can easily stand up MCP servers for their applications to allow end users to provide permissioned access to MCP clients.

MCP auth with Stytch

Use Stytch Connected Apps to build authentication with MCP servers

Authentication & Authorization

Fraud & Risk Prevention

© 2025 Stytch. All rights reserved.