Back to blog

What does compiler theory have to do with auth?

Engineering

Jul 12, 2023

Author: Joshua Hight

Question: What does compiler theory have to do with authentication?

Beyond the fact that normal people don’t think much about either, there’s another, more fecund connection…

One of the challenges of building and maintaining an API, whether you’re building a product like Stytch or a service that’s used internally, is maintaining SDKs or client libraries, usually in many languages. One of the big hidden costs to maintaining a service like auth that’s consumed by many other services is maintaining client libraries — usually in at least a couple different languages. When building an auth API, like Stytch, this is made all the more complex by the security and reliability concerns peculiar to libraries tied to a critical security infrastructure component such as auth. Even with well factored code and extensive unit tests it’s still subject to human error, especially given what a tedious chore of a developer task it is.

Much as we love humans at Stytch (and our human developers in particular), we’re a teeny bit obsessive about systems that help eliminate those pesky errors, especially where they can cause latency or security risks for our customers. Luckily, at least one person on our team was paying attention in their compiler theory class (thanks Prof. Galles), and realized a solution for maintaining our libraries might just lie in that obscure collegiate experience.

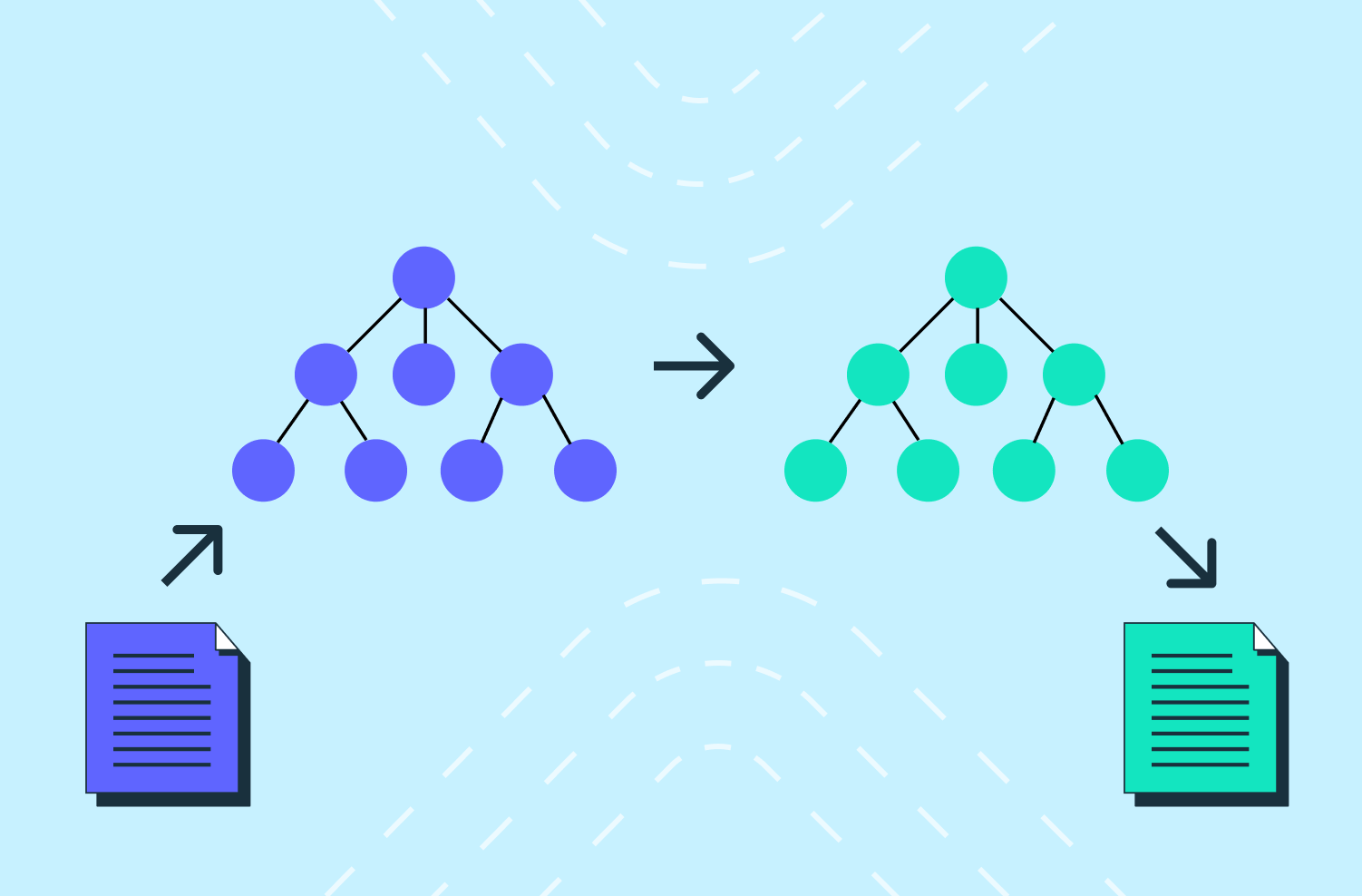

On a hunch, this engineer considered the following question: what if you transpiled your API definition (ideally strongly typed and versioned) into client code?

This article covers the first part of Stytch’s attempt to answer that “What if?” and how we began designing a code generator for our backend SDKs in Go, Python, Ruby, and Node.

Part one | What does compiler theory have to do with auth?

Part two | Generating "humanlike" code for our backend SDKs

Table of contents

The problem: maintaining clients

Step 1: Generating clients from a declarative spec

Step 2: Hacking out a protobuf transpiler in awk

Step 3: Writing a proper AST-based transpiler

Good for you, why should I care?

The problem: maintaining clients

If you’ve dabbled in API schema evolution (and who hasn’t really?) you may know that there are a number of challenges. Depending on your use case, the worst of these can include maintaining strong typing, forward and backward compatibility, and mapping complex data structures across languages. What do those things mean? Well let’s take it one thing at a time.

Strong typing

Strong typing is the ability to strongly assert that a given variable has a particular type at compile time rather than having to check at runtime. Some languages like python have traditionally made do without this, but many people (your author included) sleep better at night knowing that people can’t just pass arbitrary types as arguments to a function I only intended to work with strings.

Forward and backward compatibility

Forward and backward compatibility are things that you have to worry about when you start changing your API. You might wind up adding a new field to a request, but does that mean that old versions of the client that don’t populate that field will break? What if you need to remove a field, or use a different type for a field, or heaven forfend modify the response? If you’re using regular JSON or a naïve binary protocol, these simple things can cause breakage any time you make a small change and the server and client aren’t using the exact same version.

Mapping complex data structures

Mapping complex data structures across languages is always very interesting. Much of the value in having lots of different programming languages to work with comes from the different paradigms they offer, which lend themselves to different approaches to solving problems. The problem is that many of those different paradigms have to do with how data is represented. Some languages only have one list/array type and swap out implementations under the covers; some languages have algebraic type systems that let you construct whole worlds for others to shudder at; and some languages just have scalars, lists of scalars, and maps of scalars.

So how do you reason about sending messages between servers and clients written in these different languages? Well, you start by defining your API in a fairly generic way that only uses the very simplest types. But soon you realize that what you thought was simple was in fact totally unworkable in some weird case you have to support, and then you wake up in the bathtub covered in bourbon and cold sweat.

Luckily, there is a great deal of prior art concerning these challenges. One of the better solutions is protobufs (protocol buffers), a really lovely declarative API schema language that’s built from the ground up to support strong typing, forward and backward compatibility, as well as mapping complex types between languages. There are other options as well, like OpenAPI, Thrift, or even Cap’n Proto if you’re feeling zany, but Stytch went with protobufs because of their majestic and expansive open source ecosystem.

There is also a great deal of prior art in how to programmatically generate API clients from protobufs; unfortunately the code they generate usually looks something like this…

def __init__(self, email=None, name=None, attributes=None): # noqa: E501

"""UserCreate - a model defined in Swagger""" # noqa: E501

self._email = None

self._name = None

self._attributes = None

self.discriminator = None

self.email = email

if name is not None:

self.name = name

if attributes is not None:

self.attributes = attributes

@attributes.setter

def attributes(self, attributes):

"""Sets the attributes of this UserCreate.

:param attributes: The attributes of this UserCreate. # noqa: E501

:type: Attributes

"""

self._attributes = attributes

def to_dict(self):

"""Returns the model properties as a dict"""

result = {}

for attr, _ in six.iteritems(self.swagger_types):

value = getattr(self, attr)

if isinstance(value, list):

result[attr] = list(map(

lambda x: x.to_dict() if hasattr(x, "to_dict") else x,

value

))

elif hasattr(value, "to_dict"):

result[attr] = value.to_dict()

elif isinstance(value, dict):

result[attr] = dict(map(

lambda item: (item[0], item[1].to_dict())

if hasattr(item[1], "to_dict") else item,

value.items()

))

else:

result[attr] = value

if issubclass(UserCreate, dict):

for key, value in self.items():

result[key] = value

return result…which is sort of the opposite of a user-friendly API, and still requires a bunch of protobuf-specific boilerplate. In fact, if protobuf 3 isn’t well-supported for all your client library languages, you’ll need to figure out mapping between a StringValue and a nullable string for every language. Then, for every client language you get to spend a significant amount of time writing or finding a good library to manage mapping the protobuf types to something readable and at least vaguely idiomatic to each language.

Given that our aim was to make auth easier, this clearly wasn’t a viable option. Instead, we decided to maintain our clients manually.

As the reader can likely imagine, this manual endeavor turned out to be tremendously tedious and tiresome toil. Every API change required making changes across no less than 6 repositories, 5 of those changes effectively identical with the exception of language syntax. The result was exactly what one would expect, the languages people are most familiar with stay more up to date than others, drift crept in between languages as small irregularities due to human error built up over time, and there were numerous small oversights like missing response fields and differences in the object mappings between languages that further compounded this tech debt.

Then one day in an effort to change all that, one brave engineer at Stytch undertook a 20% project to template one of these clients.

Step 1: Generating clients from a declarative spec

Web clients range in complexity from simple Create, Read, Update, Delete (CRUD) interfaces to complex, cluster-aware, concurrent computational linguistics interfaces. Luckily, auth is one of the simpler APIs and has very few cases that deviate from simple CRUD mechanics. Auth clients therefore tend to be fairly repetitive, with most of the logic being simple serialization/deserialization (serde). This makes it extremely well suited to templating.

Such was the foundational insight of this intrepid engineer’s efforts and – spoiler alert – it was borne out to spectacular success. This engineer wrote a python program that would:

- Parse a YAML (Yet Another Markup Language) specification and

- Render it using Jinja2 templates to create a client interface that was readable, performant, secure, and required significantly less effort to keep up-to-date.

Manually writing client changes to this library became a thing of the past, the code templates since are occasionally modified to fix bugs but otherwise require very little changes. Moreover, modifying the specifications is now considerably lower effort than writing boilerplate code.

So why not stop there? Two reasons:

- The up front cost of adding a new language to this templating engine involves weeks of tedious work creating the API specification for that language.

- Even after you pay that upfront cost you do then still need to update those specifications for every client after you make an API change.

Until just a couple months ago, such was the state of affairs for our downtrodden iconoclast.

Lucky for this engineer, though, his team had just acquired a new addition, a very particular kind of lunatic who happened to have experience transpiling protobufs: your dear author.

Step 2: Hacking out a protobuf transpiler in awk

In the weeks that followed, this arguably unstable individual (but very reliable narrator) eschewed traditional tools like YACC (Yet Another Compiler Compiler) and ANTLR (ANother Tool for Language Recognition) in favor of awk and sed.

Why would one use general command line text processing languages rather than something purpose built for such a task? One would assume some combination of familiarity and some special flavor of engineering perversion, but it’s hard to say anything definitive about such a brilliant cipher of a man. Fortunately, awk and sed are tremendously adaptable tools that specialize in matching and transforming text, which is all code really is, so with something like this you can in fact lex, parse, and generate code all in one step if you can stomach what’s necessary:

!/^ *option/ && !inComment {

split($0, chars, "");

for (i=1; i <= length($0); i++) { dirtyChar=0; # innocent until proven dirty if fieldLine=substr(fieldLine, 1, length(fieldLine)-1);

split(fieldLine, fieldFields, " ")

# print "TYPE: " fieldLine

if (fieldLine ~ /^ *repeated/) {

# prefer package qualified

if (typeMap[fieldFields[1]" "package"."fieldFields[2]] != "") {

print " - name: "fieldFields[3]

print " arg_type: "typeMap[fieldFields[1]" "package"."fieldFields[2]];

} else {

print " - name: "fieldFields[3]

print " arg_type: "typeMap[fieldFields[1]" "fieldFields[2]];

}

} else {

# prefer package qualified

if (typeMap[package"."fieldFields[1]] != "") {

print " - name: "fieldFields[2]

print " arg_type: "typeMap[package"."fieldFields[1]];

} else {

print " - name: "fieldFields[2]

print " arg_type: "typeMap[fieldFields[1]];

}

}

fieldLine="";

inEolComment=0;

}

}

}So after a couple 20% project sessions, with a number of hacky scripts like this in hand, your author went to that original engineer and boldly claimed that this would solve all the maintainability problems with the existing templating solution.

That original pioneer was quite taken with this idea of transpiling protobufs, and willing to indulge your troubled author’s fascination with awk and sed long enough to win a hackathon prize by using this approach to add another couple languages to the templating engine and some CI magic to generate PRs from commits to the protobuf repo.

Luckily for everyone involved, this modern day Teddy Roosevelt refused to rely on your author’s arcane solution for production client generation, and treated it instead as a reference implementation for what was to come next.

Step 3: Writing a proper AST-based transpiler

If upon seeing that block of awk you wondered if this was something discovered in Roswell or the Mariana Trench, you’re not alone, or even that far off. It is wildly unsuitable for production usage since the only real way to debug it is to use your terminal as a REPL (Read Eval Print Loop) and add strategic print statements, and that’s assuming you’ve spent several years learning the many quirks of command line text processing.

However, it should come as no surprise that there is a significantly easier way to handle the lexing and parsing of protobufs. What is it? Well you’ll just have to read the next article in this series to find out.

Good for you, why should I care?

If you don’t have any APIs to manage and you don’t like cool computer stuff you probably don’t care and it’s quite strange you made it this far. If, on the other hand, you read through this because you’re an engineer who might have to maintain auth clients someday or you’re building an API product and trying to figure out how to manage building a myriad of different SDKs, then hopefully this walkthrough of our approach was a helpful primer on the problem space.

If solving problems and reading topics like this also excite you, check out our career page!

Authentication & Authorization

Fraud & Risk Prevention

© 2025 Stytch. All rights reserved.