Back to blog

Agent-to-agent OAuth: a guide for secure AI agent connectivity with MCP

Auth & identity

Aug 18, 2025

Author: Stytch Team

For AI agents to act safely and securely on behalf of users, they first need a way to authenticate with each other. New standards like Model Context Protocol (MCP) provide the “plumbing” for AI agents to connect with external applications, but OAuth 2.0 (and it's recent evolution, OAuth 2.1) does the heavy lifting to keep those connections secure and permissioned.

In this guide, we’ll look at agent-to-agent authentication for MCP-based connectivity, using a Node.js example to permissioned access. We’ll cover the latest in OAuth standards, infrastructure tradeoffs, user consent, and considerations for organization-wide policies. Finally, we'll look at how Stytch’s Connected Apps can simplify implementing this infrastructure and workflow.

What is agent-to-agent OAuth?

Agent-to-agent OAuth refers to using standard OAuth flows to authorize interactions between AI agents and applications’ APIs. In this model, each agent acts as an OAuth client, while the application’s API is the resource server, and its authorization server issues tokens. Put simply, it’s how an AI agent “logs in” to an app on a user’s behalf, without ever handling the user’s password or an API key. The user grants the agent a scoped access token via OAuth, and the agent then uses that token to call the application’s APIs within the approved limits.

This builds directly on familiar OAuth patterns (like when one app requests access to another app’s data), but with AI agents filling the role of third-party apps. Most importantly, the user stays in control. Tokens are scoped, time-limited, revocable, and subject to organization-wide policies set by admins.

Agent‑to‑Agent OAuth in 60 seconds

- Discovery: agent fetches your authorization server metadata (e.g.,

/.well-known/openid-configuration) to learn the auth & token URLs. - Dynamic Client Registration (optional): it self-registers, receives a

client_id. - User consent: you redirect the human user to the consent screen for scopes like

calendar:read. - Token exchange: agent swaps the auth-code for a short-lived access token (plus optional refresh token).

- Signed calls: every request carries

Authorization: Bearer <token>; your backend validates sig+exp+scope on every call.

Agent‑to‑Agent OAuth vs. Embedded (Agent‑as‑Client) Access

Agent-to-agent is often confused with embedded (agent-as-client) access. The main difference is that agent-to-agent uses external AI agents with their own OAuth tokens whereas embedded agents simply ride on the user’s session:

Agent-to-Agent OAuth | Embedded / Agent-as-Client | |

|---|---|---|

Where the agent runs | ||

Where the agent runs | Separate service (cloud AI assistant) that calls your API over the network | Inside the user’s own session (browser extension, mobile SDK, in‑app helper) |

Auth principal | ||

Auth principal | The agent is an OAuth client; receives its own scoped token | Re‑uses the human user’s session/token; no separate credential |

Permission granularity | ||

Permission granularity | Fine‑grained, revocable per agent (least privilege) | Same privileges as the hosting user |

User‑consent UX | ||

User‑consent UX | Explicit OAuth consent screen | No extra consent step; agent actions ride on existing login |

Revocation / visibility | ||

Revocation / visibility | User or admin can list and revoke each agent token individually | Must revoke the entire user session or disable the feature |

Security blast radius | ||

Security blast radius | If compromised, impact is limited to that agent’s scopes | If compromised, agent can act with full user privileges |

For embedded (browser/mobile) agents, you can follow OAuth for Native Apps (RFC 8252) and the Browser‑Based Apps specification.

Model Context Protocol (MCP)

MCP provides a framework that makes agent-to-app connections possible in a standardized way. MCP is an open standard (built on JSON-RPC 2.0 as the communication layer) that connects AI systems with external applications.

The common analogy is to think of MPC as a “USB-C port” for AI services. It defines a client-server architecture: the AI agent runs an MCP client, and the application exposes an MCP server. The agent can query the server for available “tools” or actions and invoke them.

However, before any sensitive data can be exchanged or actions executed, the agent needs authorization to access the user’s account on that server. That’s where OAuth comes in. In fact, recent updates to the MCP specification explicitly include OAuth 2.0/2.1 support for authentication.

Why OAuth for AI agent connectivity?

So why do we need OAuth at all? Can’t AI agents just use API keys or some new AI-specific auth method?

The reality is that OAuth already solves the core challenge: delegating limited, revocable access to a user’s resources. AI agents that are operating on user data have very similar needs to traditional third-party apps in terms of permissions and security. That includes:

- Delegated access without sharing credentials: Instead of giving an AI agent your account password or a raw API key (which would grant full access indefinitely), OAuth allows the agent to obtain a token with only the permissions you approve. The agent never sees your actual credentials – it just gets a token. This minimizes risk.

- Granular permission scopes: OAuth scopes let us precisely control what the agent can do. For example, you might allow an agent to “read your calendar events” but not “delete events” or “access your contacts.” These granular scopes map to specific API permissions, so the agent gets only what it needs.

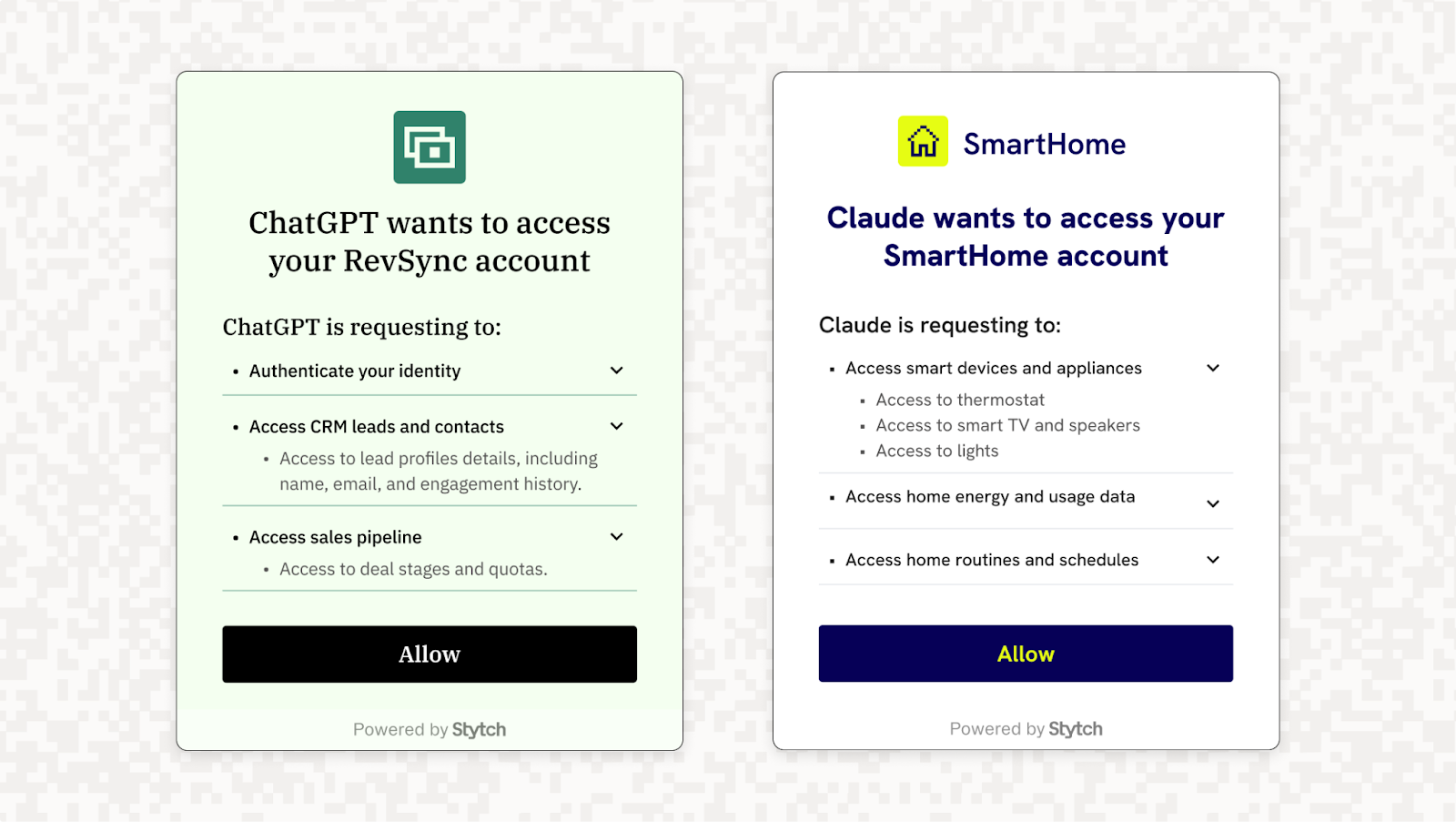

- User consent and visibility: OAuth inherently involves the user in the loop, where the user needs to explicitly grant the agent access to their account from a consent screen. This makes the process transparent, where the user sees what permissions are being requested and can deny anything they’re uncomfortable with. In an agent scenario, this helps maintain trust.

- Limited and revocable tokens: OAuth access tokens typically expire after a short time, and refresh tokens or re-authentication are required to continue access. Even if not expired, tokens can be revoked by the user or the service at any time. This built-in lifecycle means an agent’s access isn’t open-ended, unlike an API key that might remain valid until manually revoked. Using OAuth, an organization can enforce short token lifetimes and easily revoke or scope down an agent’s access.

These are the reasons why OAuth became the industry standard for third-party app integrations, and they apply equally (if not more so) to AI agents.

We’ve already seen partial solutions in the wild – for example, early AI integrations like ChatGPT plugins often used API keys or plugin-specific OAuth-like flows, resulting in a patchwork of auth implementations. Moving to a true OAuth 2.0 model via MCP brings consistency and security. In fact, MCP’s use of OAuth means AI agents no longer need a different auth for every tool; instead they follow one uniform flow to get a token for any MCP-compatible service – a major improvement over the status quo.

We’ve already seen partial solutions in the wild. Early AI integrations like ChatGPT plugins often relied on API keys or custom OAuth-like flows, leading to a patchwork of authentication patterns. ChatGPT agent isn’t much better. Authentication is handled through a virtual interface, where agents prompt users to log in or approve actions, rather than using standardized OAuth handoffs. MCP changes that. By adopting OAuth 2.0, it gives AI agents a single, uniform flow to obtain scoped tokens for any MCP-compatible service.

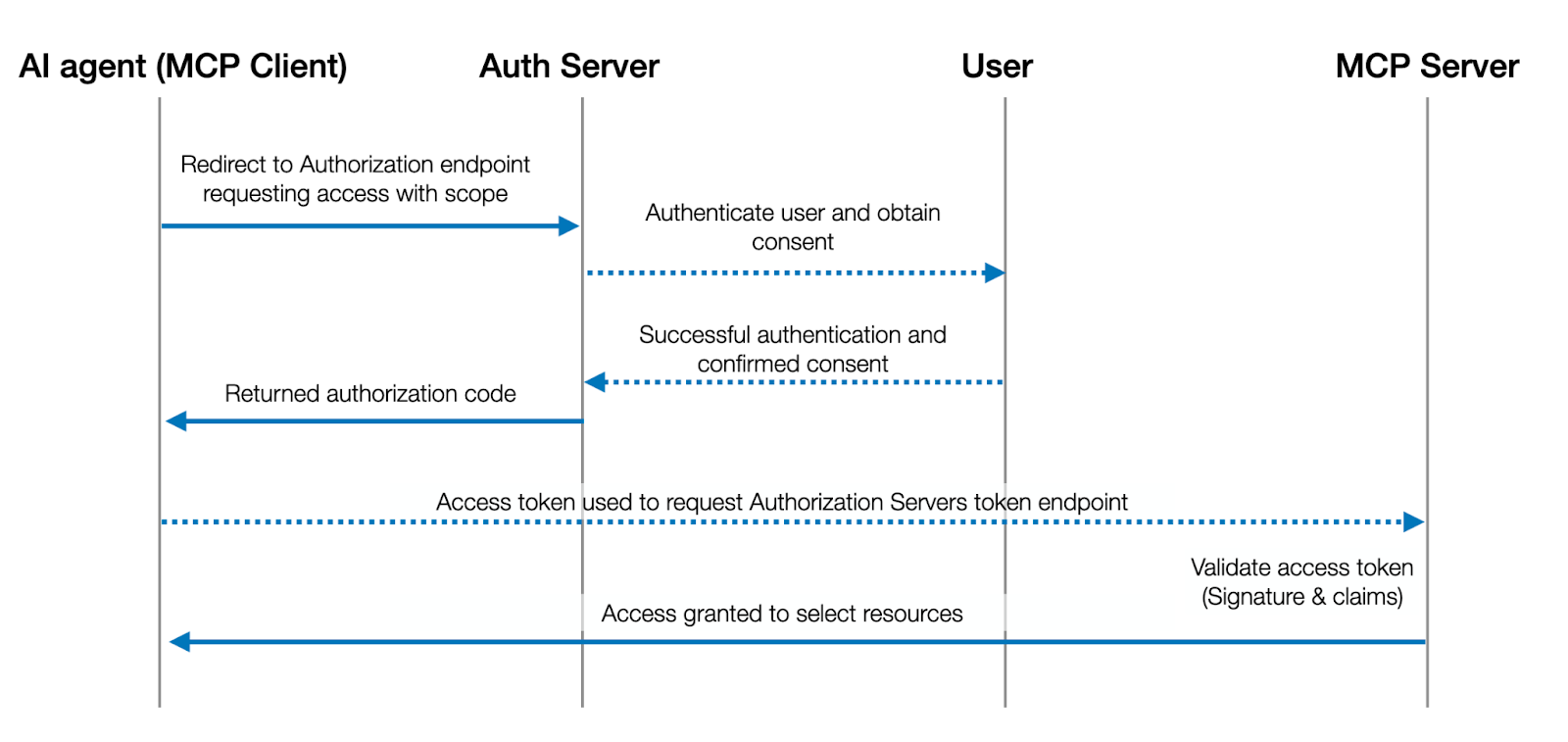

How MCP uses OAuth to connect agents and services

MCP was designed to make it easy for AI agents to discover and use external services. With OAuth now integrated, the flow for an AI agent to connect to an MCP server (an application’s endpoint) looks very much like a standard OAuth 2.0 web application flow, with a few twists to accommodate autonomous clients. Here’s how a typical agent-to-agent OAuth flow via MCP works, step by step:

Discovery of OAuth endpoints

When an AI agent (MCP client) wants to connect to an MCP server, it first needs to know how to authorize. MCP addresses this through an Authorization Server Metadata document (OpenID Connect discovery). The MCP server hosts a .well-known/oauth-authorization-server JSON document advertising the URLs for authorization, token exchange, and dynamic client registration. The agent fetches this document to bootstrap the OAuth flow (similar to how OIDC discovery works).

Dynamic Client Registration (DCR)

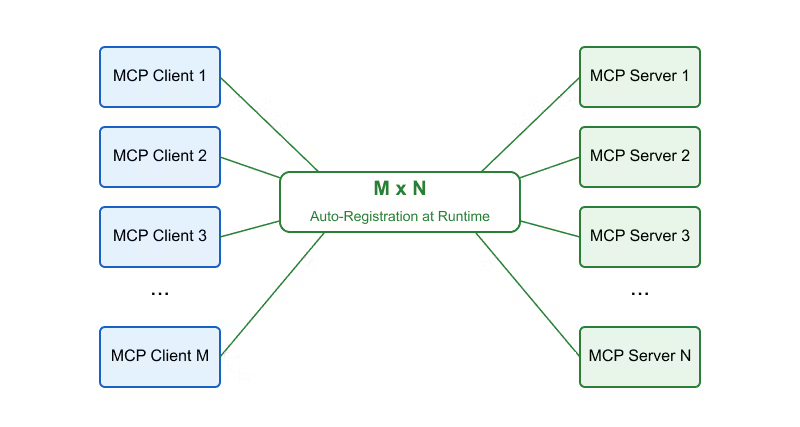

In a world of many, many AI agents, you can’t manually pre-register every one of them with every service. DCR is an extension of OAuth 2.0, allowing an agent to register itself on the fly with the authorization server.

Using the metadata from discovery, the agent sends a registration request providing details like its name and redirect URI. In return, it gets a Client ID (and potentially a client secret if needed). This step is important for scalability. It solves the M×N problem of many agents and many services by making registration self-serve.

As an example, with Stytch Connected Apps, the registration endpoint is exposed at: POST https://${projectDomain}/v1/oauth2/register. Note that PKCE is required for dynamically registered clients. This ensures OIDC compliance and avoids hard-coding of API URLs.

Authorization (user consent)

Once registered, the agent initiates the OAuth authorization code flow (typically with PKCE, since most agents are public clients without a client secret). This means directing the user to the OAuth authorization endpoint (usually via a browser or an in-app webview).

The user will see a consent screen asking them to approve the agent’s requested scopes on their account. For example, an AI coding assistant might request read/write access to a repository or database on the user’s behalf. The user must authenticate (if not already logged in) and then consent to these specific scopes. Only if the user clicks “Allow” will the process continue.

Token exchange (issuance)

After the user consents, the authorization server (the app’s IdP service) issues an authorization code to the agent (usually via a redirect). The agent then exchanges this code for tokens by calling the token endpoint. The result is an access token that the agent can use to authenticate to the MCP server, and often a refresh token if long-term access is needed (indicated by scope like offline_access). The access token is typically a JWT or opaque token representing the granted permissions. At this point, the agent has an access token representing the user’s authorization.

For redirect flows, verify the iss parameter from the authorization response (RFC 9207) to prevent mix-up attacks.

MCP connection & API calls

Armed with the access token, the AI agent can now establish a connection to the MCP server (e.g., opening a long-lived SSE connection for a remote MCP session). The agent presents the token (usually as a Bearer token in an Authorization header) when connecting. The MCP server verifies the token, and if valid, the agent is authenticated as acting on behalf of that user with the specific scopes. The agent can now invoke the server’s tools/resources within the limits of those scopes – for example, fetching data or performing an action that the user authorized. All such requests will include the token for authentication.

Where supported, bind tokens using DPoP to prevent replay (or mTLS in confidential-client settings).

Throughout this flow, OAuth 2.0 underpins the trust and consent: the app trusts that the token was issued by its authorization server (with the user’s approval), and the agent uses that token to prove it’s allowed to access resources. This standardized process replaces ad-hoc API key sharing or insecure workarounds. As a mental model, think of the access token as a signed visitor badge: it only opens the rooms the user approved, expires after an hour, and security can void it instantly. The AI agent flashes that badge at every door (API call); the building’s turnstiles (your backend) check it each time.

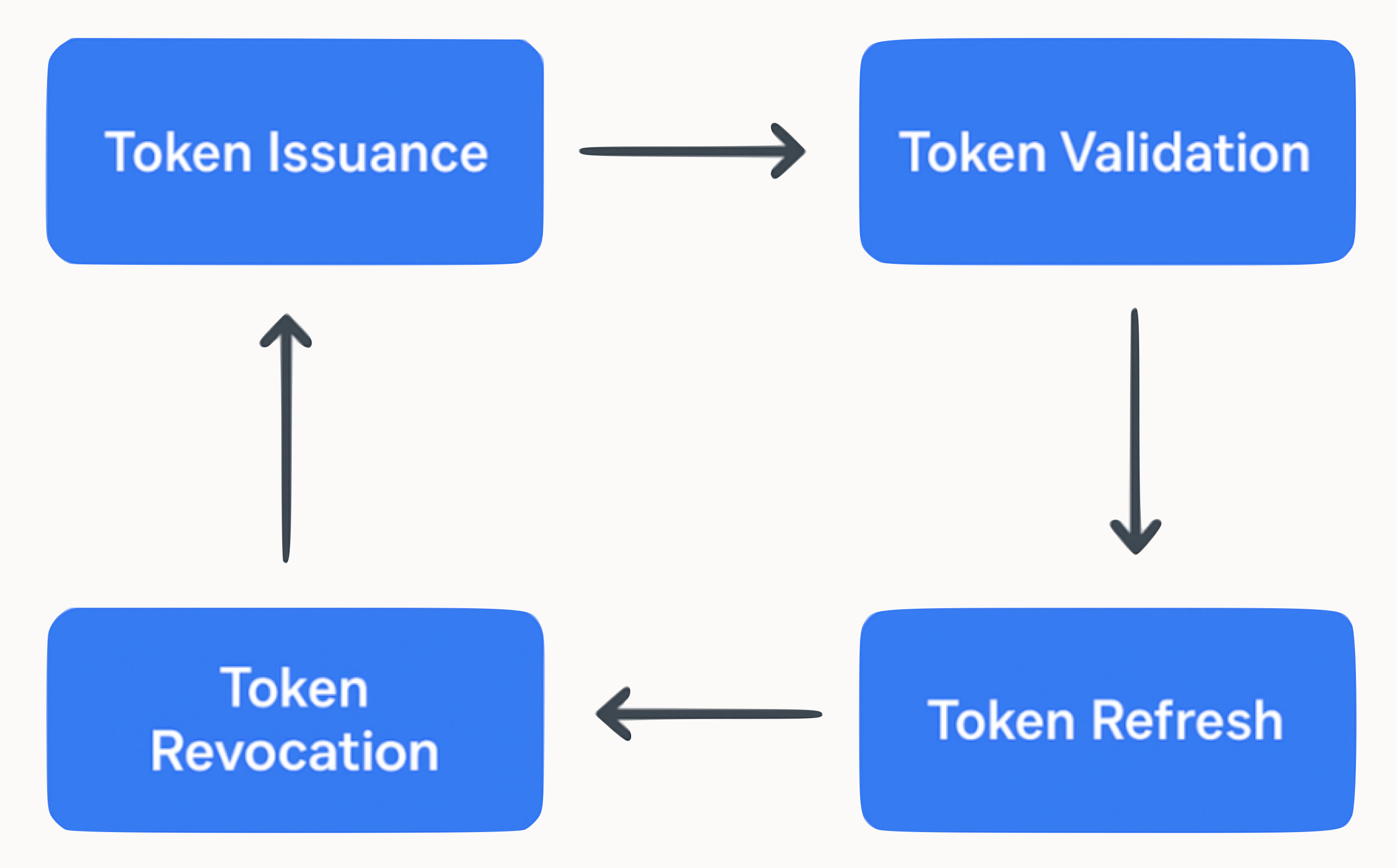

Token lifecycle in agent-to-agent OAuth

Once an AI agent obtains an access token via OAuth, that token follows a typical lifecycle. Understanding this process is key to building secure, reliable agent integrations. The OAuth token lifecycle forms a continuous loop of issuance, validation, refresh, and revocation.

Token issuance

Tokens are issued by the authorization server after user consent. In agent scenarios, access tokens are usually short-lived (e.g., 1 hour) and often JWTs, making them easy to validate. Tokens carry claims about the user (e.g., sub, aud, and scope) and, if using OpenID Connect, may be accompanied by an ID token. Agents may also receive a refresh token (e.g., when offline_access is requested) to obtain new access tokens without re-prompting the user.

Use Authorization Code + PKCE for all public clients (agents); the implicit grant is deprecated.

Use PAR (Pushed Authorization Requests) and consider JAR to keep request parameters off the front channel and ensure they’re integrity-protected

Token validation

Every time the AI agent calls the MCP server, the token needs to be validated to ensure it hasn't expired. This can be done either locally (e.g. verifying JWTs using a JWKS) or remotely (via token introspection).

JWTs are common in agent flows because they allow the MCP server to validate tokens without network calls. With opaque tokens, introspection is required to check validity and scopes. When using introspection, call Stytch’s endpoint at POST https://${projectDomain}/v1/oauth2/introspect . Client authentication uses the Connected App’s client_id and client_secret. Regardless of the method, no agent request should be trusted without validating the token on every call or session.

- For redirect flows, verify the iss parameter from the authorization response (RFC 9207) to prevent mix-up attacks.

- If using JWT access tokens, follow RFC 9068 (typical

typheader isat+jwt), and validateiss,aud,exp, and signature against the AS JWKS. - When using introspection, prefer JWT-secured introspection responses (RFC 9701) if supported.

Where supported, bind tokens using DPoP to prevent replay (or mTLS in confidential-client settings).

// Express (or Fastify) middleware – validate every agent call

app.use('/mcp', async (req, res, next) => {

const token = req.header('authorization')?.replace('Bearer ', '');

if (!token) return res.status(401).send('Missing token');

try {

const { payload } = await verifyJwt(token, JWKS); // sig + exp + aud

if (!payload.scope.includes('calendar:read')) throw new Error('scope');

req.agentId = payload.sub; // the AI agent's user

next();

} catch (_) {

res.status(401).send('Invalid or expired token');

}

});Token refresh

For ongoing access, agents use refresh tokens to renew access tokens when they expire. Refresh tokens are issued alongside access tokens (often with the offline_access scope) and must be stored securely. When the access token expires, the agent trades the refresh token for a new one at the token endpoint. Many systems rotate refresh tokens on each use to reduce replay risk. This enables long-running, autonomous agents to stay authenticated without re-engaging the user. Unused refresh tokens should be discarded to minimize risk.

Token revocation

Revocation lets users or admins terminate an agent’s access at any time, which is especially important in security incidents or policy changes. OAuth provides a revocation endpoint to invalidate access or refresh tokens. Stytch exposes this at POST https://${projectDomain}/v1/oauth2/revoke (RFC 7009), authenticated with the Connected App’s client_id and client_secret. JWT-based systems may also use blacklists or token version checks.

Revocation is essential in agent integrations. An agent should lose access immediately once its token is revoked. MCP servers can periodically check token status (especially for long-lived connections) to enforce revocation in near real-time. Disabling refresh tokens prevents the agent from renewing access.

Discoverability

Advertise your resource server’s capabilities at /.well-known/oauth-protected-resource (RFC 9728) so agents can discover required metadata.

Managing the full lifecycle can be complex, but many identity platforms handle it for you. For example, Stytch Connected Apps can manage JWT issuance, provide JWKS endpoints, rotate refresh tokens, and allow for instant revocation, so developers can focus on logic while leaving lifecycle enforcement to the identity layer.

Example implementation in Node.js

To solidify these concepts, let’s walk through a simple Node.js example of implementing agent-to-agent OAuth for an MCP server. In this scenario, our application uses Stytch as the OAuth provider (via Connected Apps), and we’ll set up an MCP server that accepts OAuth tokens from agents. We need to do a few things in code: consume OAuth discovery, validate JWT access tokens on incoming requests, and possibly handle client registration (though here it’s mostly handled by the provider). Below is a snippet illustrating key parts of an implementation like this (using a Cloudflare Workers-compatible framework for the server routes):

// Import libraries for JWT verification

import { jwtVerify, createRemoteJWKSet } from 'jose';

// Cache discovery and JWK Set

let discovery = null;

let jwks = null;

// Load OIDC discovery metadata once at startup

async function getDiscovery() {

if (!discovery) {

const url = `https://${process.env.STYTCH_PROJECT_DOMAIN}/.well-known/openid-configuration`;

discovery = await fetch(url).then(r => r.json());

}

return discovery;

}

// JWT validation function: uses Stytch's JWKS to verify token signature and claims

async function validateStytchJWT(token, env) {

const d = await getDiscovery();

if (!jwks) {

jwks = createRemoteJWKSet(new URL(d.jwks_uri));

}

const { payload } = await jwtVerify(token, jwks, {

issuer: d.issuer, // e.g. https://login.myapp.com

audience: env.STYTCH_PROJECT_ID, // aud contains your project_id

algorithms: ['RS256'],

});

const scopes = (payload.scope || "").split(" ").filter(Boolean);

return { sub: payload.sub, scopes };

}

// Protected route example: an MCP endpoint that requires a valid access token

app.use('/mcp', async (req, res, next) => {

const authHeader = req.headers['authorization'] || '';

const token = authHeader.replace(/^Bearer\s+/i, '');

if (!token) {

return res.status(401).send('Missing token');

}

try {

const { sub, scopes } = await validateStytchJWT(token, process.env);

req.user = sub; // the user's ID (subject claim)

req.tokenScopes = scopes;

next();

} catch (err) {

return res.status(401).send('Invalid or expired token');

}

});

// ... define MCP server handlers (tools/resources) under /mcp routes ...

// e.g., endpoints that allow the agent to perform certain actions if authorizedIn this code snippet there are three primary steps:

- Discovery – Instead of exposing your own

.well-known/oauth-authorization-servermetadata, the server consumes Stytch’s standard OIDC discovery document at your project domain. This provides the correctissuer,authorization_endpoint,token_endpoint,registration_endpoint, andjwks_uri. Agents should fetch these values from discovery rather than hardcoding. - Token check –

validateStytchJWTdownloads the signing keys from the discovery-providedjwks_uriand verifies each JWT’s signature,iss,aud, andexp. It also parses thescopeclaim (space-delimited string) into an array for downstream enforcement. If anything fails, the request is rejected with401 Unauthorized. - Route guard – Middleware on

/mcp/*requiresAuthorization: Bearer <token>.On success, it attaches thesubandscopesto the request object. If validation fails, the connection is rejected.

Once the middleware is in place, each MCP endpoint can simply check req.tokenScopes and act as req.user. All OAuth heavy-lifting (login, consent UI, token minting, refresh, and revocation) is handled by Stytch Connected Apps. Your server’s only jobs are: consume discovery, verify JWTs, and enforce scopes.

The authorization endpoint (consent screen) is hosted by your application and configured in Stytch. Clients discover the URL from OIDC discovery: the agent opens Stytch’s consent page, the user approves, Stytch redirects back with a code, and the agent swaps it for a token. No extra exchange logic is needed on your Node server.

Six best practices for agent-to-agent OAuth

Designing an agent-to-agent OAuth integration involves more than just the mechanics of OAuth. Here are some important best practices and considerations to keep in mind:

1. Minimal scopes & principle of least privilege

Only grant an AI agent the scopes it truly needs, nothing more. For example, if your app’s API has read and write capabilities, consider offering separate read-only vs read-write scopes, rather than one blanket scope. Agents often perform narrow tasks, so they should have narrowly scoped access. This limits potential damage if an agent malfunctions or oversteps. It also gives users confidence that the agent isn’t getting unfettered access.

Granular scopes are an OAuth strength that maps well to agent permissions.For task-specific, fine-grained permissions, consider Rich Authorization Requests (RAR) (authorization_details) instead of (or in addition to) coarse scope strings.

2. Awareness and control

Make it easy for users to see and manage their connected AI agents. This could mean providing a UI in your app where they can review which agents have access (much like reviewing authorized apps in your Google or GitHub account settings). Include details like when the agent last accessed data and what scopes it has. And critically, allow users to revoke access with a click. For example, Stytch’s Connected Apps provides a dashboard and APIs for exactly this – central visibility into all connected clients and the ability to revoke tokens instantly.

Even outside of any product, your system should record consents and offer a way to revoke. Remember, trust in AI agents can be tenuous; giving users ongoing control helps maintain that trust.

3. Dynamic client policies

Dynamic registration is powerful, but you might not want to allow any agent to register with your service unchecked. Consider implementing policies. Eg. you might auto-approve known agent platforms (like specific well-known agent frameworks), but require manual review or admin approval for unknown clients.

Some IdPs allow whitelisting or restricting DCR (for example, enterprise settings might limit which domains can register clients). At a minimum, you may want to log each registration and prompt the user “Agent X from developer Y is requesting to connect”. The MCP spec and tools don’t enforce this kind of policy. They leave it to the app’s discretion.

4. Security monitoring and rate limiting

With agents acting on user accounts, you should monitor their activity similar to how you would monitor an integration or even a user. Unusual behavior (e.g. an agent making hundreds of requests per second, or accessing data it normally wouldn’t) could indicate a buggy or malicious agent.

It’s important to add guardrails. Eg. per-agent rate limits, anomaly detection—the same business-logic checks a human user faces. Bottom line: treat agent traffic with zero trust: validate tokens every time, enforce scopes strictly, and watch for abuse.

5. Compliance and privacy

If your application deals with sensitive data (health info, financial info, etc.), make sure that allowing AI agents access via OAuth complies with any regulations. The user’s consent should clearly explain what data the agent may access or actions it may take. Maintain audit logs of agent actions performed under a user’s token. This can be quite important for compliance audits or investigating issues. OAuth gives you a framework to tie actions to a token (and thus a user+agent), which is better than an API key that might not be user-specific.

6. Consistency with human users

Try to align your agent access patterns with normal user access patterns. For example, if certain data is only accessible to users of a certain role or subscription tier, an agent acting for that user should be bound by the same rule.

OAuth scopes can encapsulate some of this, but your application logic should also check the user’s permissions. The agent is essentially another client acting as the user. It shouldn’t magically bypass checks. One way to enforce this is to include the user’s roles/permissions in the token claims (a common practice with JWTs and custom claims), so that when the agent calls the API, your authorization layer sees “user is admin or not, user has premium subscription or not,” etc., and can allow or deny actions accordingly.

Conclusion

Agent-to-agent OAuth is quickly becoming the foundation for how AI agents securely integrate with modern applications. By combining OAuth 2.0 with protocols like MCP, we get both seamless connectivity and a battle-tested security model — enabling AI assistants to operate across users’ digital lives with consent and control.

This article outlined how agents use OAuth to obtain access tokens, manage their lifecycle, and securely interact with APIs. Agent-to-agent OAuth isn’t a reinvention, but a smart extension of existing standards into the AI space. Tools like Stytch Connected Apps and other identity platforms now make it easier than ever to support these patterns — handling token issuance, JWKS publishing, introspection, and revocation endpoints in compliance with RFCs (7662, 7009).

As AI agents grow in capability, building agent-ready APIs will be a key differentiator. With Authorization Code + PKCE as the default (implicit flows deprecated), the option to use RAR for fine-grained permissions, DPoP or mTLS for token binding, and .well-known/oauth-protected-resource metadata for discoverability, developers have a clear and secure path forward.

With OAuth as the foundation, MCP as the transport, and Stytch Connected Apps providing the identity layer, you can build the next generation of connected, intelligent apps with both developer simplicity and enterprise-grade security.

Authentication & Authorization

Fraud & Risk Prevention

© 2025 Stytch. All rights reserved.