Back to blog

Top techniques for effective API rate limiting

Auth & identity

Oct 24, 2024

Author: Alex Lawrence

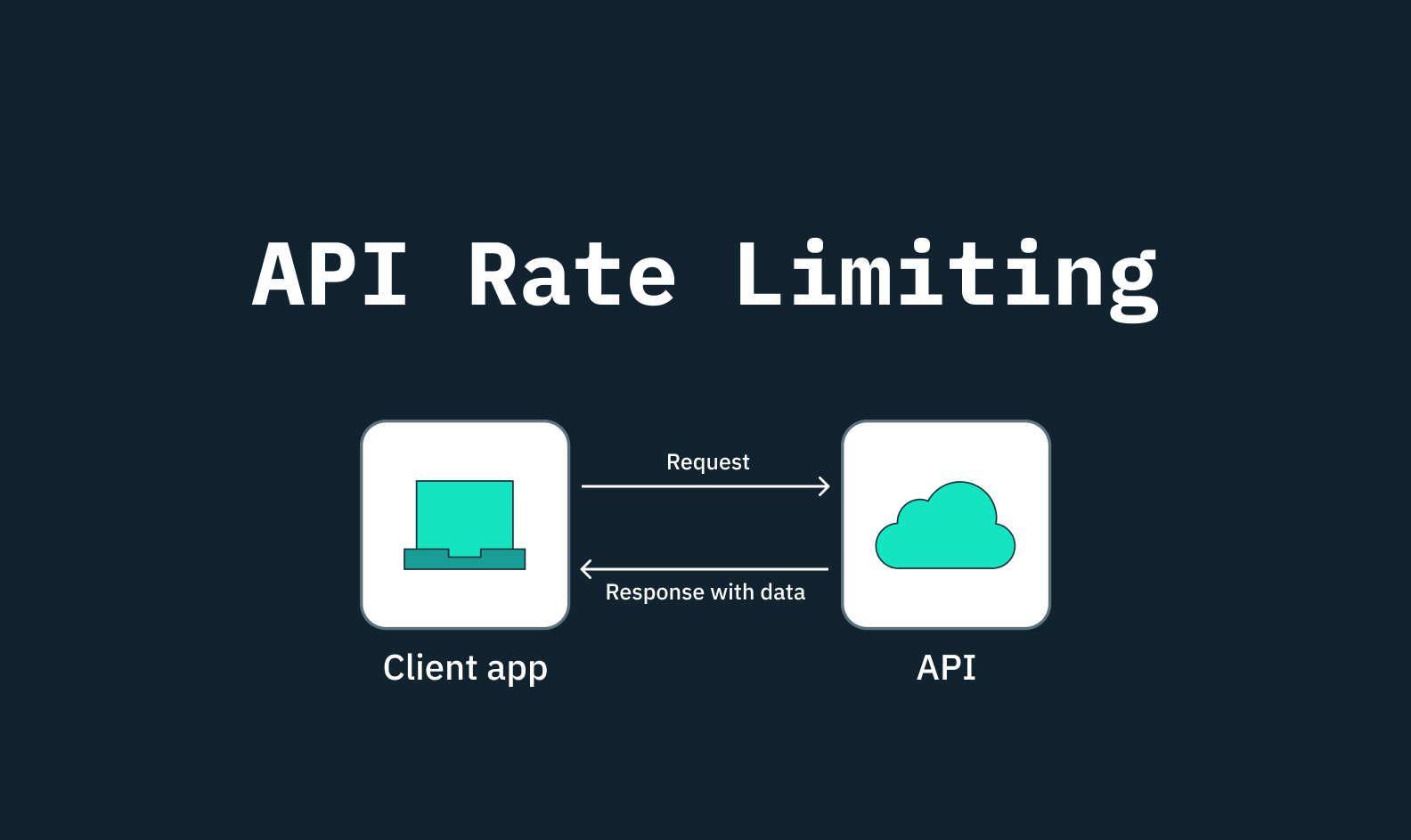

API rate limiting is a critical mechanism that governs the number of requests a client can make to an API within a specific time frame. For developers, it acts as a safeguard against certain types of attacks and excessive use of resources, preventing potential server overloads that can lead to degraded performance or downtime.

This article will walk you through what API rate limiting is, its importance in protecting against attacks, and some best practices to effectively implement rate limiting strategies.

Understanding API rate limiting

API rate limiting is a set of rules or logic that limits how often a user or application can interact with an API. This practice serves multiple purposes, including preventing server overload, safeguarding the API, ensuring stability, security, and fairness, and ultimately improving user experience. API rate limits are typically expressed as the maximum number of requests allowed within a defined time frame.

Rate limiting supports fair usage by preventing resource monopolization and allowing equal access for all users. With no api rate limit exceeded, no single user or application can consume all resources, maintaining a balanced and efficient API service for all users.

Why does API rate limiting exist?

API rate limiting as a concept did not have a single inventor but rather evolved over time as the need for more reliable and scalable web services grew. Rate limiting techniques began to be more formally defined and implemented by major companies during the mid-2000s. One of the early adopters was Twitter, which introduced rate limiting to manage the massive influx of requests from third-party developers building on its API. Google and other tech giants soon followed, realizing that without such measures, their APIs could be overwhelmed, leading to service outages or degraded performance for all users.

Protecting against bot attacks

One of the most significant benefits of API rate limiting is its ability to serve as a frontline defense against various forms of abuse, particularly denial-of-service (DoS) attacks. In a DoS attack, an attacker attempts to overwhelm a server with a massive volume of requests using a network of bots and machines, with the goal of exhausting the server's resources and rendering the service unavailable to legitimate users.

Beyond DDoS attacks, bad actors also leverage bots to abuse APIs for other malicious activities like scraping data, attempting brute-force login attempts, or abusing rate-limited resources. This makes bots a persistent threat to any API.

API rate limiting is a crucial protective measure that helps mitigate such attacks by controlling the volume of requests that can reach the server within a given time period.

Prevent free account abuse

Implementing rate limits is crucial to ensure that legitimate users can access the API without disruptions incurred from abusive or excessive requests. A common example of abuse occurs with free-tier accounts, where users may create multiple accounts or use automated scripts to exceed the allotted limits, exploiting the system for unauthorized gains.

By preventing the misuse of API resources, rate limiting allows legitimate users to utilize the services without facing interruptions. This ensures a fair distribution of resources, preventing any single user from monopolizing the API.

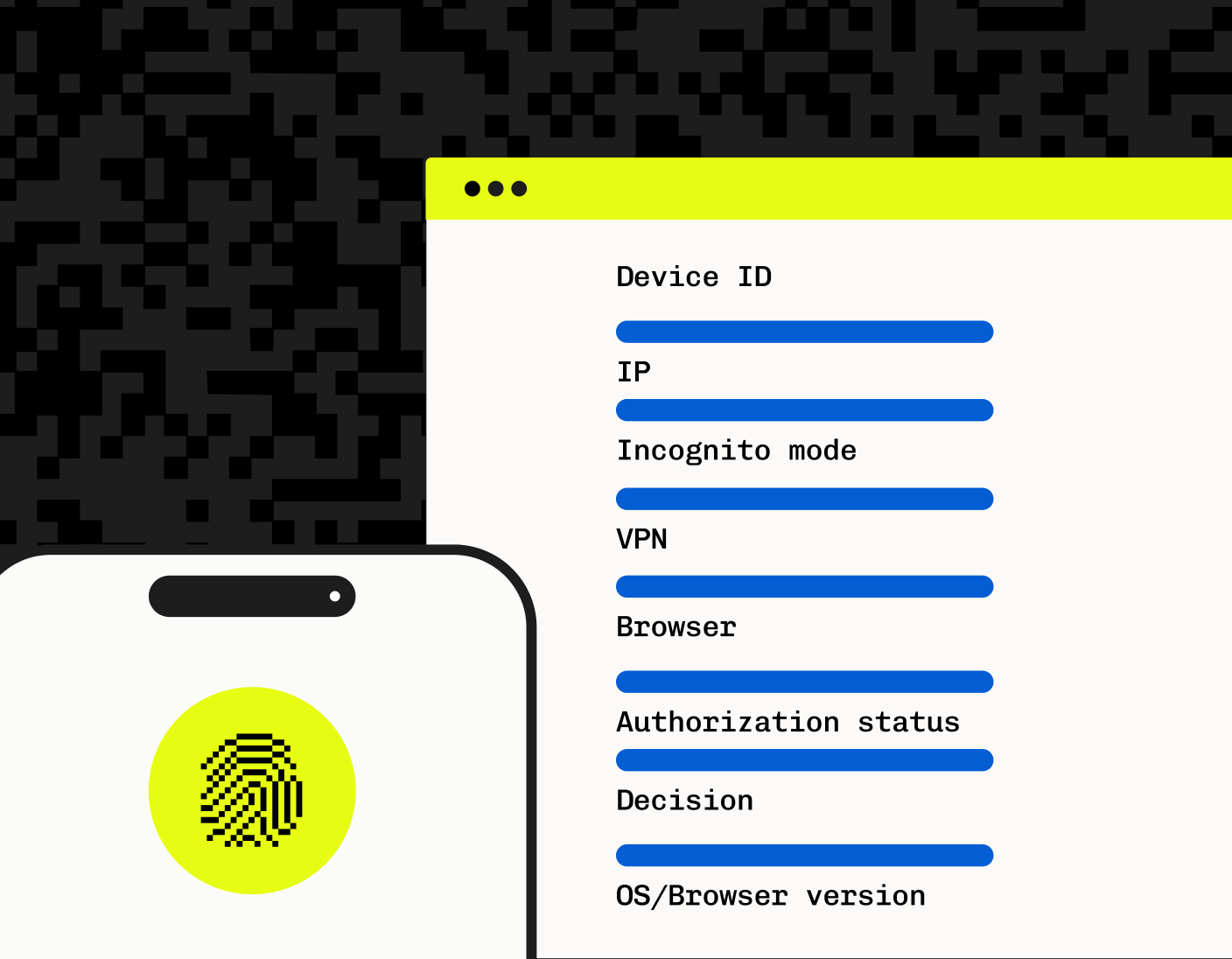

Device fingerprinting (DFP) technology further enhances rate limiting by uniquely identifying devices, which allows for intelligent and dynamic rate limiting strategies. By accurately identifying individual devices, DFP helps enforce fair usage and improve overall API performance and security. More on Stytch DFP below.

Stopping accidental misuse

Not all high-traffic scenarios are the result of malicious intent. Sometimes, well-meaning developers can accidentally misuse an API by sending too many requests, perhaps due to a bug in their code or a misunderstanding of the API's expected usage patterns. Rate limiting helps to protect your infrastructure from these accidental bursts of traffic. By setting clear limits, you can mitigate rate limit errors by ensuring that your API remains stable even when clients inadvertently exceed intended usage levels.

Improving API performance

Rate limiting is essential for balancing customer access and server stability. Regulating incoming requests significantly enhances overall system responsiveness, boosts customer satisfaction, and manages network traffic. Think of it as driving on the highway during rush hour—without control, the traffic would come to a standstill.

Managing the flow of API requests prevents slow performance and ensures that high-volume requests do not overwhelm the system. This results in a more efficient and memory-efficient API service, benefiting both the API providers and the users.

Rate limiting in practice

Understanding real-world examples of how some major platforms implement API rate limiting can provide valuable insights into best practices and rate limiting challenges. We’ll look at GitHub, LinkedIn, and Google Maps, each employing unique strategies to manage their api calls and API traffic.

GitHub

GitHub’s API rate limits allow up to 5,000 requests per hour for each user access token or OAuth-authorized application. Users can check their current rate limit status on GitHub by sending a GET request to the /rate_limit endpoint. The response provides details such as the user’s limit, the number of used requests, and the remaining requests categorized around each endpoint.

This approach ensures that developers can monitor their API usage and avoid disruptions by staying within their rate limits.

LinkedIn employs a tiered rate limiting strategy that varies based on the type of API and the user’s access level. For example, LinkedIn's API imposes a different API rate limit for various endpoints, such as profile requests, company lookups, and messaging. The platform provides developers with detailed rate limit headers in API responses, which include information on the current usage and the time until the rate limit resets. LinkedIn’s approach helps balance the need for access to vast amounts of professional data with the necessity of maintaining system stability and preventing misuse. Additionally, LinkedIn may impose stricter rate limits or quotas on specific high-impact endpoints to protect user privacy and data integrity.

Google Maps

Google Maps uses a combination of rate limiting and quota management to ensure fair and efficient usage of its services. Developers can track their API usage through Google Cloud’s console, which provides detailed usage reports and alerts when usage approaches predefined limits. Rate limiting helps manage server load, particularly during peak times or when multiple applications access high-demand services like geocoding or directions.

Google Maps also offers flexible pricing plans that adjust rate limits based on the developer’s chosen tier, allowing for scalability and optimized performance according to the application's needs.

Best techniques for implementing rate limiting

Effective rate limiting requires a balance between enforcing limits to prevent abuse and ensuring that legitimate users can access your services without unnecessary disruption.

API throttling, or throttle control in APIs, restricts requests temporarily and is more aggressive than rate limiting, which focuses on overall request count. While throttle control is effective in preventing immediate overloads, it can be too blunt an instrument when it comes to balancing customer access and maintaining server stability.

For a more balanced approach, API rate limiting methods such as Token Bucket, Fixed Window Counter, and Sliding Window Algorithm, are often used to manage api request traffic in different ways, helping ensure that API services remain stable and responsive under varying load conditions.

Token Bucket Algorithm

The Token Bucket Algorithm manages API requests by using tokens to control allowed requests. Tokens are placed into a bucket at a fixed rate, and each request consumes one token. If there are enough tokens in the bucket, the request is processed; otherwise, it is denied.

This method allows for bursts of requests while ensuring that the overall rate does not exceed the predefined limit. Tokens are replenished at a fixed rate, allowing for a maximum number of requests to be processed over time. This approach is highly effective for managing variable traffic patterns, such as those you might see in e-commerce platforms during flash sales, social media sites experiencing viral content spikes, or streaming services during peak viewing times.

Fixed Window Counter

The Fixed Window Counter method uses an incremental counter to track requests within a defined time frame. This method limits the requests a user can make in a predetermined time frame, resetting after the period ends. If the user exceeds the request limit, additional requests are discarded.

While simple to implement, the Fixed Window Counter method can lead to spikes in traffic at the boundary of the time frame, which may cause instability. However, it remains a popular choice for its straightforward implementation and effectiveness in managing request rates within fixed intervals, and is used commonly for scenarios where predictable, evenly distributed traffic is expected, such as in scheduled data synchronizations, batch processing tasks, or APIs with steady, low-variability workloads.

Sliding Window Algorithm

The Sliding Window Algorithm manages request limits dynamically based on traffic patterns. Unlike the Fixed Window Counter, the sliding window method updates request limits based on a continuously shifting time frame. It uses a cumulative counter for a set period, assessing the previous window to smooth out traffic bursts.

This mechanism protects against rapid bursts that could overwhelm the system by managing the request pace, such as with APIs handling real-time data streams, online gaming platforms, or financial services where transactions occur frequently and unpredictably. When a request exceeds the limit threshold, requests are queued to maintain orderly processing. This dynamic approach helps avoid stampeding issues, ensuring a more stable API service.

Stytch Device Fingerprinting: Intelligent API rate limiting

Despite the many best practices available today, optimizing API rate limiting remains a challenge without the incorporation of highly granular device intelligence.

This is where Stytch Device Fingerprinting (DFP) comes into play. By leveraging Stytch DFP, you can confidently identify every device within your web traffic, allowing you to create smarter, more effective, and dynamic API rate limits. Stytch's fingerprinting technology offers industry-leading accuracy in bot detection, device intelligence, and fraud prevention.

{

"created_at": "2024-01-01T00:00:00Z",

"expires_at": "2034-01-01T00:00:00Z",

"external_metadata": {

"external_id": "user-123",

"organization_id": "organization-123",

"user_action": "LOGIN"

},

"fingerprints": {

"browser_fingerprint": "browser-fingerprint-0b535ab5-ecff-4bc9-b845-48bf90098945",

"browser_id": "browser-id-99cffb93-6378-48a5-aa90-d680232a7979",

"hardware_fingerprint": "hardware-fingerprint-4af7a05d-cf77-4ff7-834f-0622452bb092",

"network_fingerprint": "network-fingerprint-b5060259-40e6-3f29-8215-45ae2da3caa1",

"visitor_fingerprint": "visitor-fingerprint-6ecf5792-1157-41ad-9ad6-052d31160cee",

"visitor_id": "visitor-6139cbcc-4dda-4b1f-b1c0-13c08ec64d72"

},

"status_code": 200,

"telemetry_id": "026ac93b-8cdf-4fcb-bfa6-36a31cfecac1",

"verdict": {

"action": "ALLOW",

"detected_device_type": "...",

"is_authentic_device": true,

"reasons": [...]

}

}Device Fingerprinting (DFP) technology empowers you to go beyond traditional rate limiting by tailoring limits to specific individual devices. This approach enhances both the performance and security of your API by allowing more nuanced control over traffic. Rather than applying blanket limits across all users, Stytch DFP enables you to adjust rate limits dynamically, based on the unique characteristics and patterns of each device like the hardware, browser, TLS and network.

Stytch’s Device Fingerprinting product offers the capability to precisely identify and track devices, ensuring that API resources are allocated fairly and abuse is minimized. By implementing DFP, you can protect your API from malicious activities while optimizing the user experience for legitimate users. This advanced level of device intelligence allows for the creation of adaptive rate limits that respond to real-time traffic conditions, providing a more robust and resilient API infrastructure.

Build Fraud Prevention

Pricing that scales with you • No feature gating • All of the auth solutions you need plus fraud & risk

Authentication & Authorization

Fraud & Risk Prevention

© 2025 Stytch. All rights reserved.