Back to blog

Application security in the age of artificial intelligence: adapting to new challenges and opportunities

Auth & identity

Jun 25, 2024

Author: Reed McGinley-Stempel

Introduction

As AI continues to transform the digital landscape, organizations must remain vigilant and adapt their application security strategies to address new threats and opportunities. By understanding the implications of AI on identity and access management (IAM) and taking proactive measures, businesses can stay ahead of the curve and protect their digital assets in an increasingly interconnected world.

AI-fueled risks include more sophisticated bot traffic, more believable phishing attacks, and the rise of legitimate AI agents accessing customers’ online accounts on behalf of users. In effect, applications will need to gain confidence in their ability to do the following:

- Accurately discern human vs. bot traffic (made more difficult in a world where CAPTCHA and JA3 for TLS fingerprinting are effectively broken)

- Detect whether bot traffic is malicious or user-directed via an autonomous AI agent

In this article we’ll take a look at the main threats to cybersecurity posed by recent advances in AI, and provide concrete actions companies can take today to protect their applications and customers.

Table of contents

AI today - what’s so different now?

Threat I - Reverse engineering

Threat II - More sophisticated phishing attacks

Threat III - Outdated access management models

What you can do

AI today - what’s so different now?

Even if you’ve heard of or even tried ChatGPT, it can be hard to sift through the media chatter to understand the real risks and real promises of this technology. At a CIAM company like Stytch, we’re far less concerned about a possible Hal or Blade Runner scenario, and much more concerned about what happens as the advances of AI become available to human beings already in the business of fraud and hacking.

While there’s quite a bit of nuance to how different kinds of AI tech are improving today, the crux of the issue from a cybersecurity perspective comes down to developments in sophistication and availability.

Sophistication

If you’ve caught the pope sporting some pretty pricey threads or gotten a strange voicemail from a close friend or relative, this larger point may be self-evident. But the past decade has given AI scientists both the time and resources to sharpen their deep learning models, paired with vastly greater computer power. As a result, AI has gotten, well, impressively good. While there are of course areas where AI still has room to improve, the gap between the output a computer and a human can generate has gotten much smaller.

Availability

AI technology is more widely and openly available for anyone to try than it ever was before. This is true on two fronts: both that there are more consumer AI products on the market (think ChatGPT) that anyone can try using, and with the advances in this technology there’s been a proliferation of startups and companies that rely on or advertise AI as a core differentiator. As of 2023, the number of AI companies in the world has doubled.

Like with other technological developments, if you see legitimate industry leaders getting excited about a new development, you can be sure hackers and fraudsters are paying attention too.

Let’s look closer at what precisely the risks are, and why they’re so urgent to address.

Threat I - Reverse engineering

While a lot of the initial discussion around AI and cybersecurity focused on how hackers may use AI tools to better imitate human behavior, many are also interested in the sheer computing power exposed by legitimate AI applications that make their resources publicly available.

How this works

Historically, whenever a resource has been made available online, cybercriminals will seek methods to exploit it if there are sufficient financial incentives. Namely, hackers reverse engineer sites to piggyback on open resources, allowing them to automate and scale the reach of their attacks or fraudulent activity quickly and easily.

Past resources hackers have exploited include:

- Credit Card + Bank Credentials

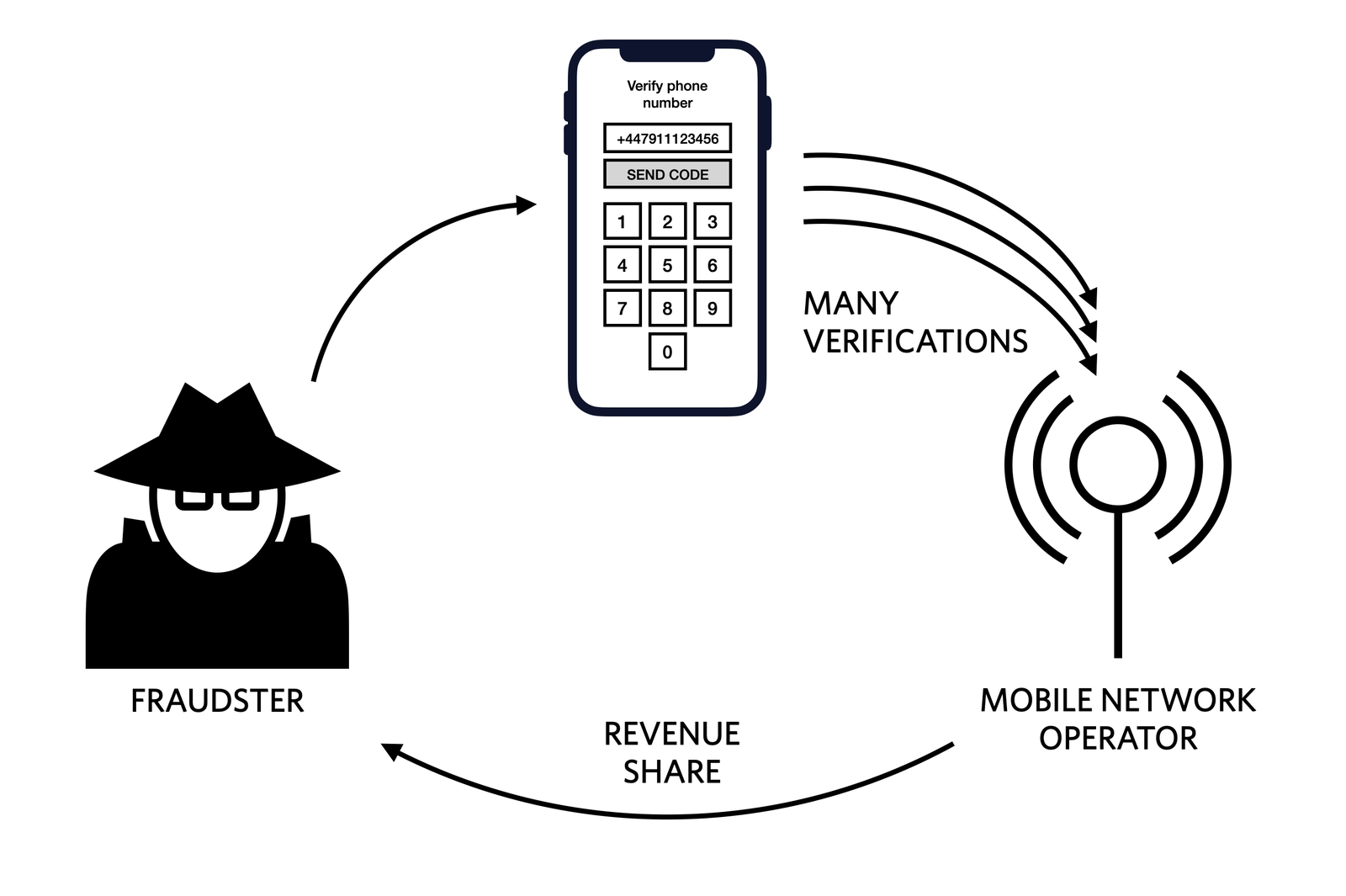

Stripe and Plaid offer API endpoints that validate credit card numbers and bank account details, which are then targeted by attackers to conduct account validation attacks so that the accounts can ultimately be taken over and drained of their funds. - SMS messaging

In Twilio’s case, cybercriminals engage in SMS toll fraud to send costly SMS traffic via partner mobile network operators (MNOs) internationally and split the earnings with them. Customers using Twilio’s services outside of North America are at particularly high risk of seeing an eye-popping bill when this takes place, and Twilio goes so far as providing docs on how customers should protect their apps. - Compute resources

Companies like Replit, AWS, Github, Railway, and Heroku deliberately expose valuable compute resources as part of their product offerings to developers, but this also makes them prime targets for attackers looking to steal free computational power. To quote the Railway CEO, it’s “frankly outrageous number of bad actors trying to spam free compute from our service.”

A Twilio diagram describing the fraud ring involved in SMS toll fraud (aka “sms pumping”).

Increased risk for AI

With the rise of open AI resources over the past couple of years, this last kind of compute resource attack has become especially profitable. In this model, bots piggyback on an application’s AI resources using a man-in-the-middle approach. Attackers then sell access to the API outputs to a third party while leveraging a legitimate application’s API pathways to avoid paying for the AI APIs.

There are four main aspects to AI specifically that make this compute resource exploitation so attractive:

- Cost of resource: AI APIs are costly, making them valuable targets for attackers seeking to exploit their output.

- Fungible output: The output from AI API requests is both fungible and accessible client-side, allowing attackers to use reverse-engineered AI endpoints to complete custom tasks and collect responses from the abused application. This differs from other APIs like SMS messaging or financial credential validation.

- Rolling availability: The latest large language model (LLM) APIs are released on a rolling basis, incentivizing reverse engineering for early access. For example, GPT-4 API access is limited, encouraging those without access to reverse engineer it via a company granted early access by OpenAI or other providers of similar LLM APIs.

- Higher monetization potential: AI endpoints have a higher total monetization potential for fraudsters compared to compute, financial validation APIs, or SMS messaging. They share a unique combination of valuable elements from each API attack surface: as fungible as compute, soon-to-be exposed by as many apps as SMS endpoints or payment APIs, and as defenseless as SMS endpoints (since AI APIs are integrated directly via the backend, lacking large company client-side protection against bots).

In other words, the same computing power that makes a search engine like You.com impressive or a productivity tool like Tome so helpful for a non-malicious user is equally available and perhaps more attractive for illicit purposes.

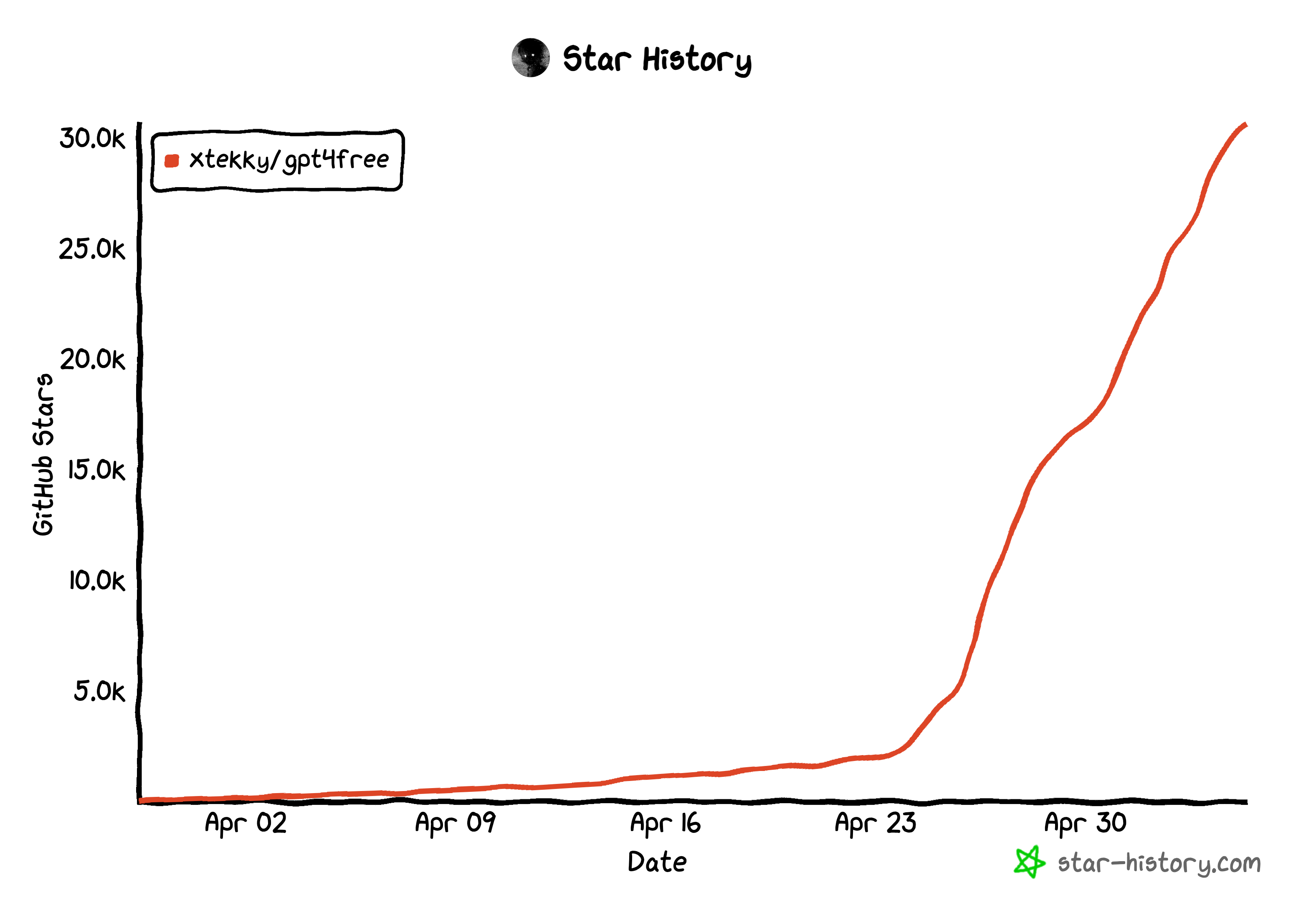

If you doubt the temptation of open AI resources, just look into GPT4Free. Just last week, this GitHub project dedicated to reverse engineering sites to piggyback on GPT resources accumulated 15,000+ stars on GitHub in just a few days. If over 15,000 developers saved this repository in just a few days, it’s daunting to imagine how many fraudsters might attempt to use it in the course of the coming months or year.

GPT4Free is an open-source project that reverse engineers LLM APIs in order to provide users with free access to AI capabilities by piggybacking on sites that pay for their usage.

Threat II - More sophisticated phishing attacks

If you find it uncanny how human-like AI can seem, either with audio, visuals, or text, you can be sure those imitative capabilities have also caught the eye of hackers. Namely, AI today accelerates three major “developments” along the phishing attack vector: believability, efficiency, and scale.

1. Complexity and believability

Aided by AI, phishing is already becoming much more complex and believable when it comes to the linguistics and visual interfaces generated by attackers to deceive users. Cybersecurity firm Darktrace saw the linguistic complexity jump in phishing emails nearly 20% in Q1 2023. Additionally, 37% of organizations reported deep fake voice fraud attacks and 29% reported deep fake videos being used for phishing.

As the cost of building a fake landing page and login portal plummets ever lower, security experts expect attackers’ sophistication in spinning up convincing sites to continue to rise.

2. Efficiency and affordability

Fraudsters are continuing to chase efficiency and scalability in their attacks. In the case of phishing, this means turning to programmatic ways to bypass MFA defenses.

In addition to generating phishing campaigns and sending them programmatically, attackers are also employing bots more frequently to automate the actual validation of phished 2FA codes (e.g. OTP, TOTP) in real-time. To achieve this, attackers operate low-volume bots that appear normal traffic-wise to applications (after all, there’s a real user on the other end) and submit the stolen codes to the phished application. Doing so eliminates the need for fraudsters to employ humans to perform this action and ensures stolen credentials aren’t stale.

Want better fraud prevention for your app? Switch to Stytch.

Pricing that scales with you • No feature gating • All of the auth solutions you need plus fraud & risk

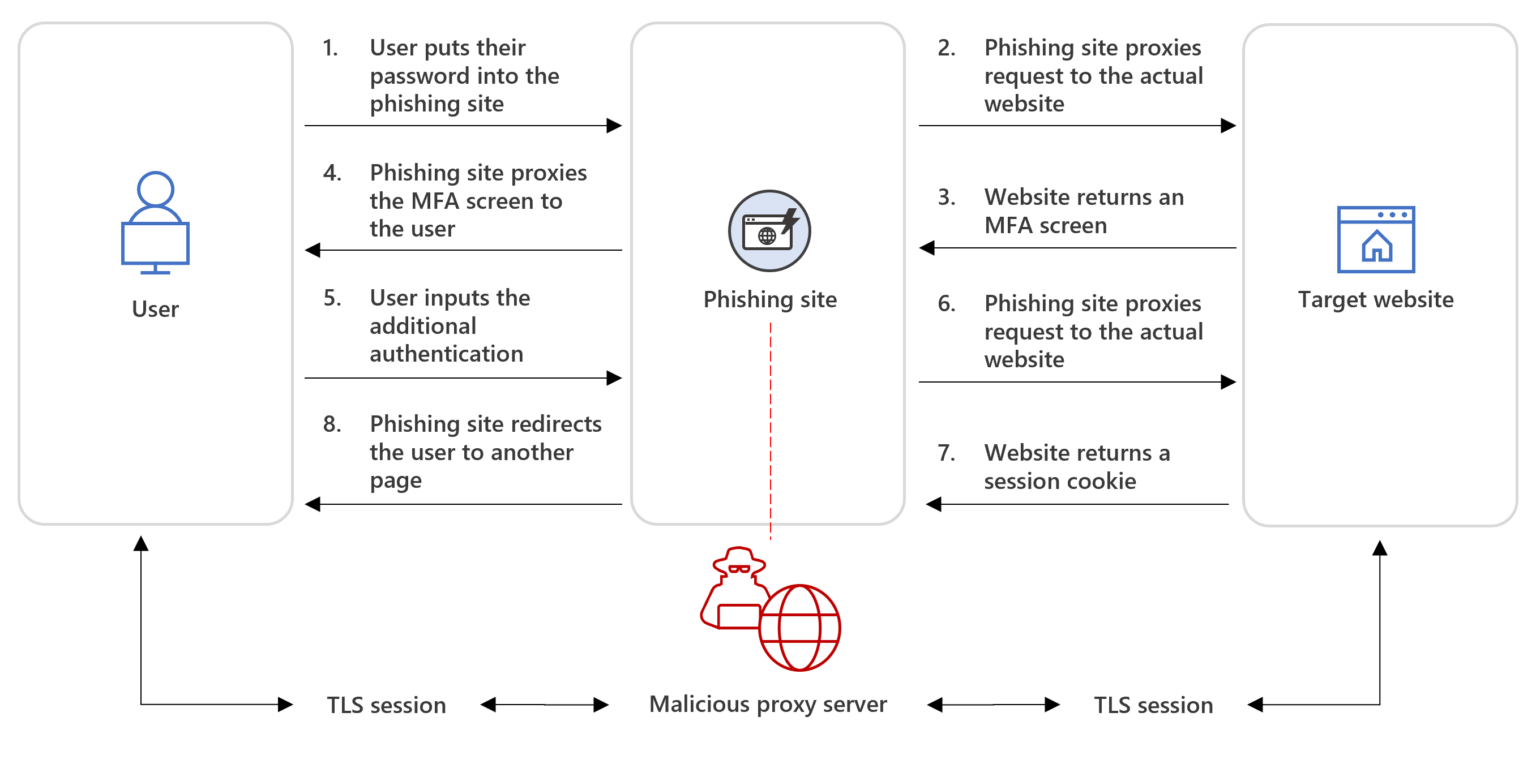

As an example, Microsoft Threat Intelligence researchers recently discovered a phishing kit that is behind more than 1 million malicious emails daily and can bypass multi-factor authentication (MFA) systems using time-based one-time passwords (TOTPs). The kit, which sells for $300 (standard) or $1,000 (VIP), includes advanced features for deploying phishing campaigns and evading anti-phishing defenses. The technique, known as Adversary in the Middle (AitM), places a phishing site between the targeted user and the site they are attempting to log in to, capturing TOTPs in real time. The phishing kit also uses features such as CAPTCHA and URL redirection to bypass automated defenses and block lists.

Diagram courtesy of Microsoft's Threat Intelligence team and their research on phishing kits

Security experts have long lauded MFA methods like OTP and CAPTCHA is important prophylactics against phishing attacks, so the ability of fraudsters to get around these blockers proves that greater protection will soon be needed on a global scale.

3. Scale and ROI

As phishing attacks become both trivial to generate with AI and much more believable, this also changes the cost-benefit analysis behind how attackers choose who to target.

We often mock the errors found in phishing schemes because they seem obvious to the discerning user. However, these typos have traditionally been a feature (not a bug) for attackers – they’re intentional and designed to weed out more intelligent targets so that attackers are more likely to hear back from only the most gullible of victims.

This design choice derives from phishing’s historically manual and human labor-intensive architecture: historically, hackers have needed real people to continue the conversation with potential victims to convince them to forward their password or MFA passcode. With this very real labor constraint, attackers have been forced to find clever ways to increase their probability of success, and focus their efforts only on targets they feel like both offer a high probability of success and offer sufficient reward.

Unfortunately, conversational-level AI like ChatGPT changes this cost benefit analysis drastically. Without human labor as a constraint, we can expect a sharp uptick in the number of phishing attempts and the sophistication of those conversations, as fraudsters may abandon the typo strategy and generally target a much wider audience with far more believable attacks.

Threat III - Outdated access management models

The last major change we’re seeing from AI is the most nascent but also potentially the most fundamental shift for every application’s authentication strategies: the emergence of AI agents.

AI agents are designed to browse the web and perform tasks on behalf of users. In terms of benefits, these agents promise to eliminate tedious tasks in users’ personal and professional lives. In some ways, they’re like a more intelligent form of robotic process automation (RPA). But instead of requiring specific instructions, users can provide general directions, and the AI agent can problem-solve to fulfill the request.

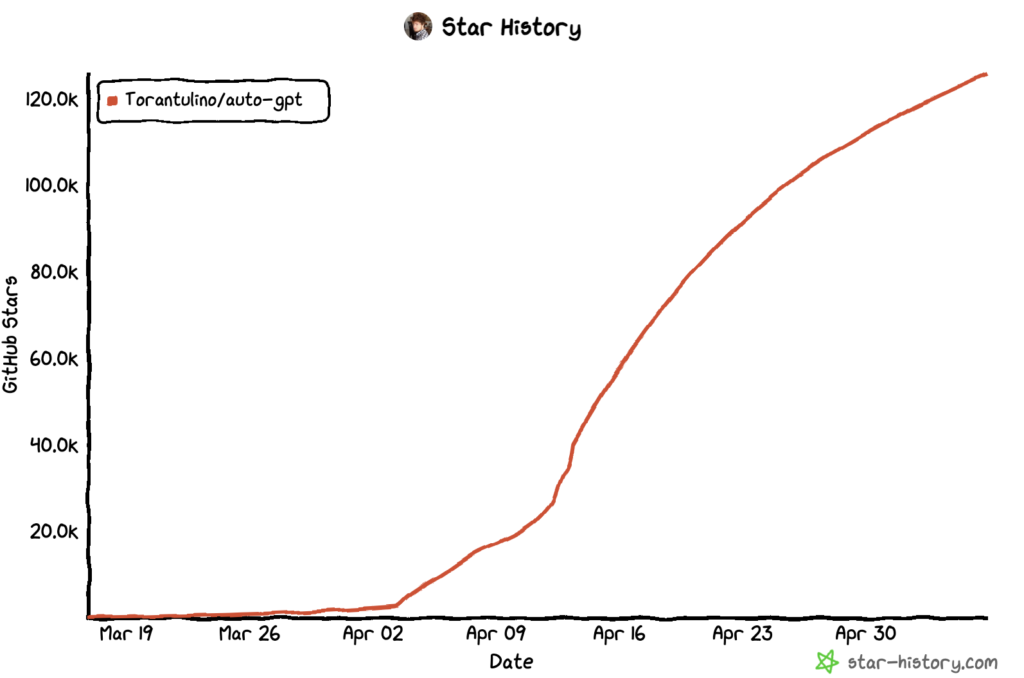

This not only increases efficiency for end users but also brings significant advantages for businesses. AI agents can become adept at navigating unfamiliar user interfaces, reduce the customer support load for apps, and as a result, increase the likelihood of consumer or organizational adoption because users don’t have to learn the nooks and crannies of every app’s UI that they want to use. It’s no wonder agents like AutoGPT have been growing in popularity over the past couple of months.

The AutoGPT project is one of the fastest Github projects to 100k+ stars in history, and it has the potential to dramatically change how users and businesses interact with applications.

At the same time, AI agents also introduce an entirely new set of risks for applications to manage. Most consumers and businesses may not want these agents to have unrestricted access to all their application settings due to their autonomous nature. An AI agent could mistakenly delete crucial profile information, sign up for a more expensive subscription than intended, expose sensitive account access data, or take any other number of self-directed actions that harm the user, the application, or both parties. As of today, companies’ authentication and authorization models still have to catch up to these new kinds of divisions of labor.

ChatGPT now has a version with browsing capabilities in alpha access

What’s to be done

With the pace of AI advancement showing no signs of slowing down, it may feel daunting to think about the implications for your individual product.

Fortunately, there are already tools out there that can help combat fraudulent use of AI – devs just have to know what capabilities to prioritize. To prepare their application’s security model in the age of artificial intelligence, engineers should consider the following:

- Invest in advanced fraud and bot mitigation tools that can detect reverse engineering to protect AI APIs from unauthorized access and abuse.

- Prioritize phishing-resistant authentication methods, such as biometrics or YubiKeys, to safeguard against more sophisticated and believable phishing attacks.

- Modernize their authorization logic and data models to accommodate the rise of AI agents, differentiating between actions that require human intervention and those that can be executed autonomously.

1. Invest in advanced fraud and bot mitigation

To mitigate these risks, organizations should invest in advanced fraud and bot mitigation tools to prevent reverse engineering of AI APIs. Common anti-bot methods like CAPTCHA, rate limiting, and JA3 (a form of TLS fingerprinting) can be valuable in defeating run-of-the-mill bots that have little incentive to invest in evading these protections. But for more sophisticated bot problems like those facing AI endpoints, these common methods are easily defeated. Attackers with any moderate sophistication can defeat these methods:

- Defeating CAPTCHA: Attackers use services like https://anti-captcha.com/ to cheaply outsource the solving of CAPTCHA challenges via a mechanical turk style system.

- Defeating rate limits: Attackers route traffic through residential IPs via services like Bright Data, churn their user agents, and leverage less detectable headless browsing techniques

- Defeating TLS fingerprinting (JA3): Chrome’s latest changes make this significantly less valuable for defending your app against bots

Protecting against reverse engineering requires more sophisticated tooling. More advanced options include custom CAPTCHAs via Arkose or javascript fingerprinting tools like FingerprintJS.

There are of course many device fingerprinting solutions to choose from, but there are a few differentiators we’ve invested in at Stytch that are particularly effective at mitigating AI-driven attacks:

- Our DF solution combines hardware, network, and browser-level fingerprinting with the massive volumes of authentication data we have visibility into as a CIAM platform.

- While other fingerprinting tools use native javascript, which is itself more susceptible to being reverse engineered and defeated by those abusing your site, we use web assembly for a best-in-class approach to prevent reverse engineering.

- We use full at-rest encryption internally with on-the-fly runtime decryption on payload execution for all critical collection logic. We have also built a significant amount of tamper-resistant and tamper-evident features into our solution via cryptographic signing, which ensures that if our payload internals are tampered with, the results won’t be accepted by Stytch servers for access.

- Our tool was built from the ground up by professional reverse-engineers who used to build and sell commercial reverse-engineering tools in their previous roles. They’ve designed our product to be painful and vexing in ways they know from prior experience would have made them throw their hands up had encountered it in a reverse engineering attempt.

Whether you invest in Stytch’s product or decide on a competitor, the most important takeaway here is that organizations that prioritize detecting and stopping reverse engineering will be better equipped to protect their AI-powered applications against malicious actors.

2. Prioritize phishing-resistant authentication methods

The best way to counter these types of account takeover attacks is to invest in phishing-resistant MFA. Unlike one-time and time-based passcodes, phishing-resistant methods like WebAuthn can’t be accidentally forwarded along to an attacker, making it a valuable security measure in the face of AI-generated phishing emails and websites. WebAuthn includes two distinct categories of device-based authentication factors that help confirm a user’s identity:

- Device-based biometrics, such as TouchID and FaceID, belong to the “something-you-are” category.

- External hardware keys, like Yubikey, belong to the “something-you-have” category. These authentication factors involve a physical security key that is connected to the user’s device for verification purposes.

With the increasing sophistication of phishing attacks, organizations should prioritize integrating phishing-resistant authentication methods. And if your organization isn’t yet ready to adopt to these more advanced MFA methods, the best defense is a fine-grained bot detection layer. In the above diagram, steps 2 and 6 require fraudsters to proxy user inputs from a fake application to the phished application and can be thwarted by investing in a fingerprinting approach (like Stytch’s) that can detect proxied traffic that otherwise appears to be normal.

3. Modernize their authorization logic and data models

To address these challenges, companies must adapt their permission and access control models to accommodate these well-intentioned bots. It’s crucial to establish fine-grained role-based access controls (RBAC) to mitigate security concerns arising from autonomous AI conducting tasks unsupervised on behalf of real users. Businesses may need to require human-in-the-loop MFA for certain actions initiated by AI agents or restrict some access entirely to strike the right balance between convenience and security.

Want to chat about how AI might affect your customers?

Whether you’re building an AI company or reevaluating AI-driven attack vectors that might affect your application, we’d love to chat. Let’s talk about how Stytch can secure your product.

Authentication & Authorization

Fraud & Risk Prevention

© 2025 Stytch. All rights reserved.