Back to blog

Beating back bot fraud in 2024

Auth & identity

Dec 14, 2023

Author: Alex Lawrence

The rise of bot fraud has been astounding in the past few years and is estimated to cost businesses billions of dollars annually. According to Sift’s Q2 2023 Digital Trust & Safety Index, account takeover (ATO) bot attacks spiked by a staggering 427% in Q1 2023, compared to all of 2022.

Bot fraud involves the use of automated software programs, known as bots, to conduct illicit activities online. These activities range from unauthorized access to e-commerce and bank accounts, to the inflation or falsification of reviews online, to ever more sophisticated financial frauds.

In this article, we’ll delve into the different types of bot fraud and the measures that can be taken to combat them.

Understanding bot fraud

Bot fraud is characterized by the deployment of bots to mimic human behavior and interact with our digital systems, often with malicious intent. These bots can automate tasks at superhuman scale and speed, making them a potent tool for fraudsters.

Bot fraud can take many forms. Below are 7 of the many suits commonly worn by bots:

- Click fraud: In this type of fraud, bots simulate clicks on online advertisements, artificially inflating the number of clicks and leading to a waste of advertising budgets.

- Account takeover (ATO): Bots often try to access user accounts by guessing or stealing login credentials (often bank accounts), using the stolen credentials to carry out various fraudulent activities.

- Spam messaging: Bots can be used to send spam emails, messages, and comments on social media platforms, often promoting illegal products or services.

- Impersonation: Some bots are designed to impersonate humans on online platforms, creating fake profiles and generating fake engagement such as likes and follows.

- Inventory hoarding: In this type of fraud, bots are used to buy up large quantities of limited edition products or concert tickets, reselling them at inflated prices.

- Web scraping: Bots can be programmed to automatically gather data from websites, often for competitive intelligence or price comparison purposes.

- Fake reviews and ratings: Bots can manipulate online reviews and ratings, creating false positive or negative feedback to influence consumer decisions.

- Credit card and bank credential fraud: Bots attempt ATO attacks to gain control over user accounts for financial exploitation. Services like Stripe and Plaid, which offer API endpoints for credit card and bank account verification, are frequent targets of these types of attacks.

- SMS toll fraud: Bad bots send expensive SMS messages through international mobile operators. This fraudulent activity is more common with international mobile networks.

- Compute resource fraud: Platforms offering compute resources, such as Replit, AWS, Github, Railway, and Heroku, attract bad bots seeking free computational power. The open availability of these valuable resources makes these platforms prime targets.

The evolution of bot fraud

In their early days, bots were simple scripts used for repetitive tasks like data scraping or auto-filling online forms. Over time, bots have benefited from technological advancements in parallel with humans, wielding sophisticated tools capable of executing complex fraud attacks. Eliza sure has come a long way.

Modern-day bots can easily bypass traditional security measures, forcing their human counterparts to scramble, invent, and scale increasingly complex, intricate prevention measures. The rise of artificial intelligence and machine learning has only compounded this challenge. Bots now learn from user behavior and adapt their tactics accordingly, making it harder to detect and stop them. As a result, bot fraud has become a lucrative business for cybercriminals.

To leapfrog the next new bot-based scheme, a quick study of known bot attack methods can help prepare you for battle with these persistent digital foils.

Malicious bots: methods behind the mayhem

Malicious bots have become a prevalent tool for cybercriminals and can be used to commit identity theft, launch DDoS attacks, steal sensitive data, and spread malware. While we can learn from the past, there’s always a new bot attack method brewing — but today’s prevalent methods give some clues as to where an organization should focus its prevention efforts.

Bot attacks on user accounts

Accessing existing accounts, particularly those linked to financial institutions, are prime targets for bot attacks. These attacks represent a significant portion of online fraud and identity theft incidents. Malicious bots use various methods to access user accounts, including credential stuffing, brute force attacks, and phishing.

- Credential stuffing: This popular password cracking method uses login credentials from data breaches on multiple websites to gain unauthorized access, essentially by ‘stuffing’ as many stolen credentials into a login attempt until successful.

- Brute force attacks: This is carried out by repeatedly trying different combinations of usernames and passwords until the correct combination is discovered.

- Phishing attacks: These common attacks trick users into providing their login credentials through fake websites or malicious links.

Techniques of automated bot attacks

Automated bots can do the work of thousands of humans in one sweep, achieving an incredible scale of impact and finding ‘weak links’ in traditional authentication or security gateways.

One example of an automated bot attack is the use of “headless” browsers. These are browser-like applications that do not have a user interface but can perform web-based tasks automatically. Attackers use headless browsers to carry out malicious activities, such as account takeover attempts or click fraud, without being detected by traditional security measures.

Another technique used in automated bot attacks is API abuse. APIs (application programming interfaces) allow different software systems to communicate with each other. Attackers can manipulate APIs to gain unauthorized access to user accounts or steal sensitive information.

The automated nature of such attacks allows for rapid, efficient execution of complex tasks, using advanced algorithms that mimic human behavior to bypass CAPTCHAs and exploit system vulnerabilities. It all begs the question — could the problem be cut off at the source if bad bot traffic could be spotted more easily?

Stopping bot attacks in their tracks

To answer that, it’s important to note that not all bots are malicious; some perform legitimate tasks like indexing for search engines. However, distinguishing between good and bad bots ain’t as simple as calling the kettle black — and plenty of bad bots masquerade rather surreptitiously as benign internet creatures.

There’s a simple (generalized) rule of thumb that sets good and bad bots apart: good bots typically follow set rules and patterns, whereas bad or malicious bots often exhibit erratic or suspicious behavior.

A combination of prevention techniques such as behavioral analysis, machine learning, modern authentication methods and network monitoring can help identify malicious bots, and slow or even stop bot attacks. These methods can help identify abnormal patterns or behaviors that indicate bad bot activity. However, attackers are also constantly evolving their tactics, making it an ongoing battle that will likely never see an ‘end.’

How Stytch can help

Strong, bot-resistant authentication for systems and devices is one of the most reliable methods at keeping bad bots out. Stytch can help developers and organizations monitor and prevent bot attacks using both physical and API-powered device security measures.

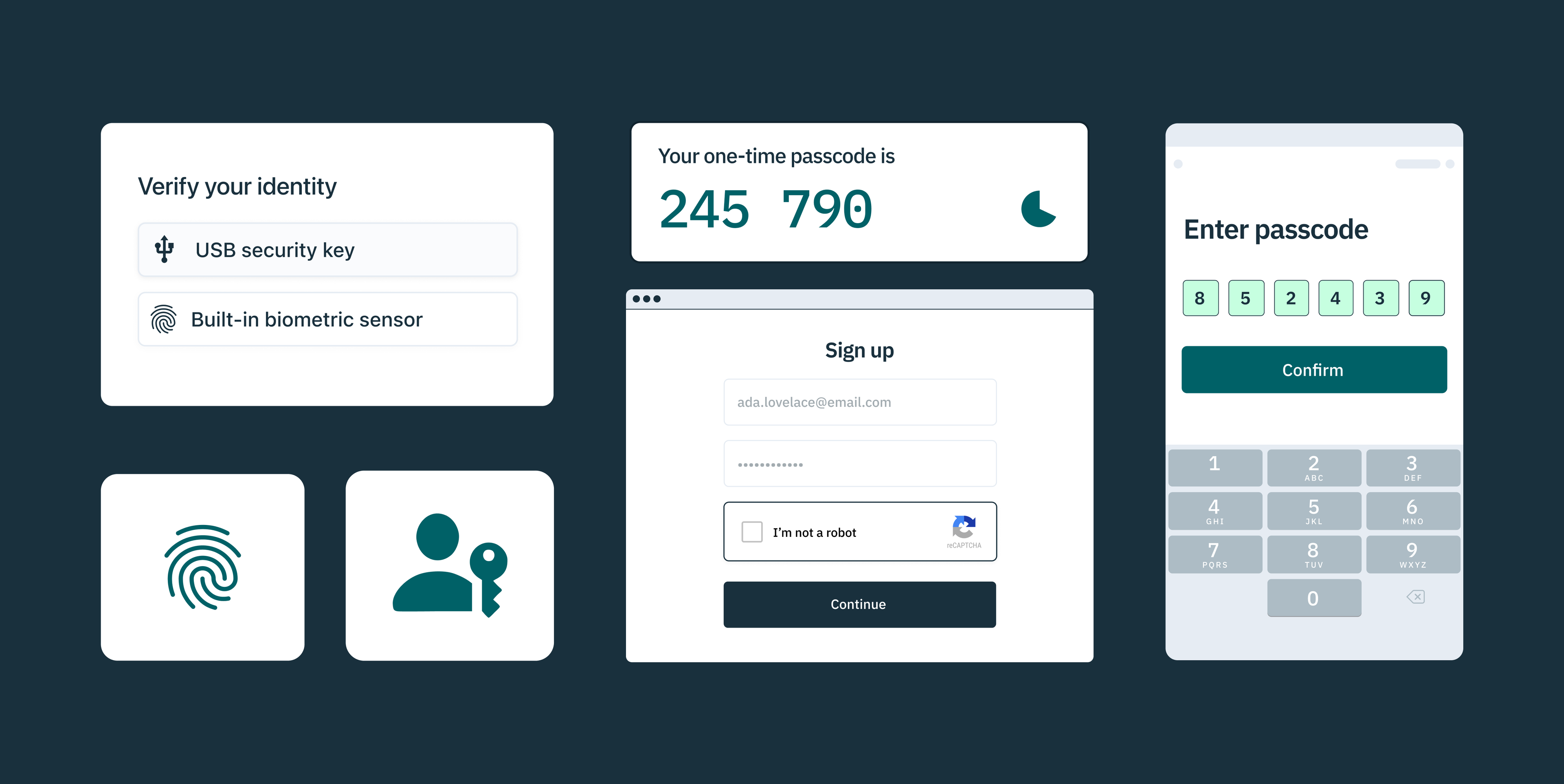

Device fingerprinting identifies unique characteristics of a user’s device, such as the operating system, browser version, screen resolution, and even unique identifiers like IP address, helping safeguard against bot attacks and other potential breaches.

A step beyond traditional CAPTCHA, Stytch’s Strong CAPTCHA uses complex challenges that are easy for humans but difficult for bots to solve, effectively distinguishing between legitimate users and automated systems.

Step-up authentication allows for more rigorous authentication processes like additional verification steps if a user logs in from a new location or attempts to conduct a high-risk transaction. By leveraging real-time risk assessment, step-up authentication ensures that stronger security measures kick in only when they’re most needed – without adding friction or compromising on user experience.

Stytch also offers ‘unphishable’ multi-factor authentication (MFA) that require users to authenticate their identities using multiple factors, including something they know (like a password), something they have (like a hardware token or a registered device), and something they are (like a fingerprint or other biometric data) — making it challenging for bots and cybercriminals to gain access.

To learn more about how these or other solutions work, reach out to an auth expert to start a conversation, or get started on our platform for free today.

Build auth with Stytch

Pricing that scales with you • No feature gating • All of the auth solutions you need plus fraud & risk

Authentication & Authorization

Fraud & Risk Prevention

© 2025 Stytch. All rights reserved.