Back to blog

Bot mitigation for identity and access management

Auth & identity

Feb 6, 2023

Author: Gedney Barclay

A crucial but sometimes overlooked aspect of customer identity and access management (CIAM) is the need to discern whether something interacting with your application is a real human user or a bot. This is also called bot mitigation.

Most CIAM work understandably focuses on how to correctly validate identities and provide the appropriate access to those users, but bot detection and mitigation is an essential tool in building signup and login flows that provides protection to both your application and users.

When done correctly, bot mitigation helps stop attacks at the perimeter of your application before bot attacks like phishing, credential stuffing, or account exploitation can take place.

What is a bot? How are malicious bots different?

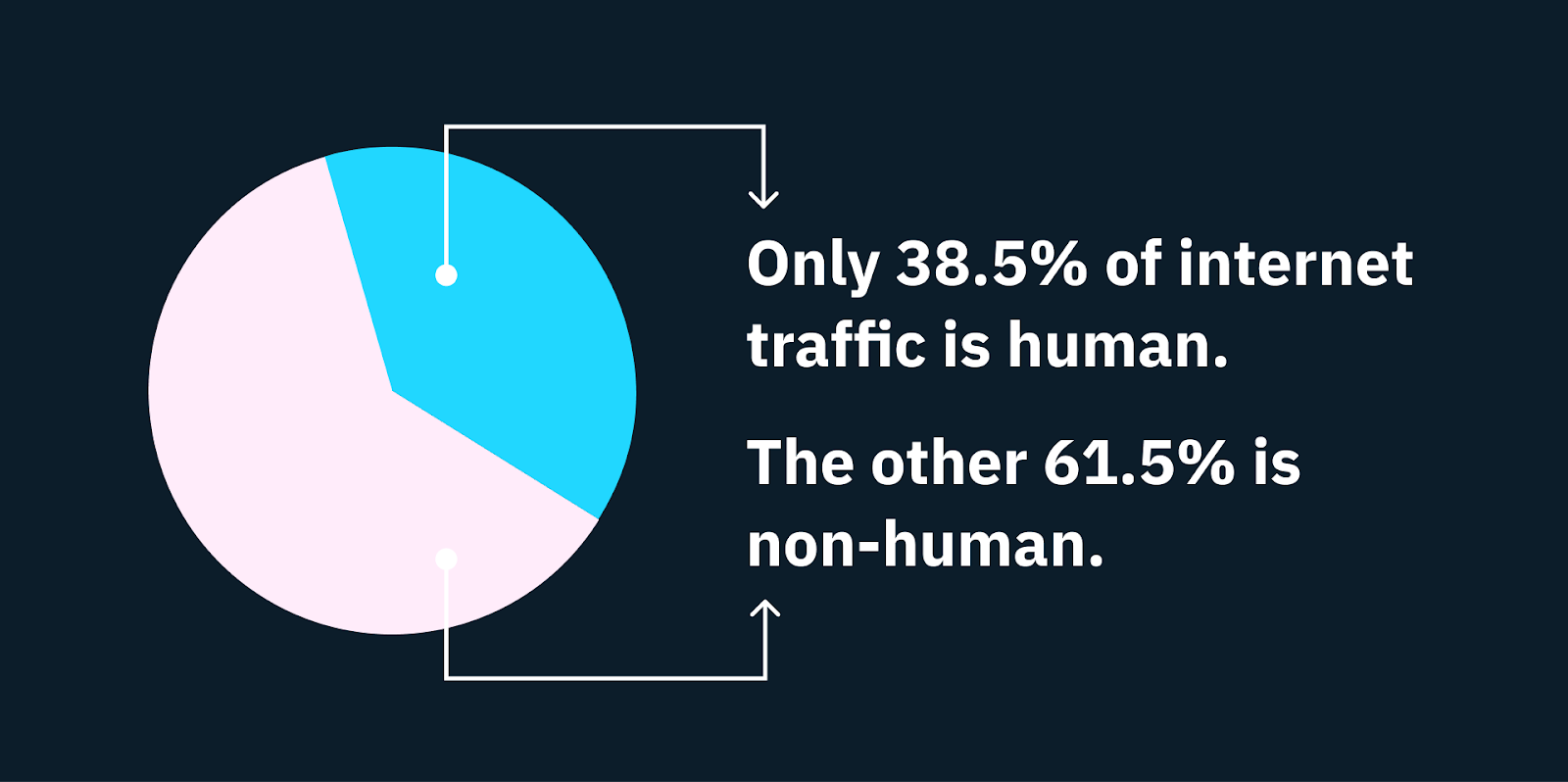

Human beings make up less than 40% of all internet traffic. The other 60+%? Bots. And over half of that traffic is malicious bots.

Bots are software that's been programmed to perform specific, repetitive, automated tasks online. They can be used benevolently — like to answer common customer questions in an ecommerce chat box — but they can also be used by hackers to automate and amplify cyber attacks.

For example, bots can crawl the internet intercepting usernames and passwords, which they then mobilize to breach user accounts on different apps and websites. With bot attacks on the rise, it's no wonder that bot mitigation solutions are increasingly a part of conversations about broader CIAM and fraud and risk prevention.

Common types of bad bots

When it comes to CIAM, malicious bots enter the equation in a couple different ways and are a common weapon used in both first-party and third-party fraud. Before we dive into the specific ways bad bots are used in each fraud vector to attack applications, let’s align on two distinct fraud types: first-party fraud and third-party fraud.

First-party fraud

This is when the true account owner is the perpetrator (rather than the victim) of the fraud. There are a number of reasons an attacker would want to create a fraudulent first-party account with your application. Here are some of the most common examples we see:

Abusing signup rewards

In this attack, the intent is to create numerous fake accounts to take advantage of a limited offer (e.g. $10 in cashback for taking a certain onboarding action).

Stockpiling items for resale

Instead of signup, some bots target checkout flows to purchase limited resources only to resell at a higher price on the secondary market. This is particularly common with ticket sales (think Taylor Swift) and sneaker drops.

Creating fintech accounts

For certain digital investing, banking, and payment apps, bad bots target them by taking advantage of policies in which users can spend money before a deposit actually settles in the account. For example, if I can buy crypto before my ACH payment settles, that presents an opportunity for the fraudster to take advantage of this time delay.

Third-party fraud

This is when the account owner is the victim of the fraud. The user owns a legitimate account with an application, and that account is stolen by an attacker. This type of attack is called an account takeover or ATO, and the purpose is to exploit the underlying value of the account, which may hold money or sensitive data that the attacker can monetize. Here are a few of the attacks that can lead to account takeover via this vector:

Credential stuffing

In these cases, bots will try to log in with large numbers of credentials that they’ve stolen from other data breaches. If credentials are validated during this automated attack, they know those credentials can be used to breach the account.

Phishing

This can be perpetrated by real humans or bots. The intent is to trick a user into unwittingly providing their credentials (password, one-time passcode, etc.) to an attacker. Users often believe they’re providing their credentials to a secure site or to a bonafide support representative at the targeted application.

What are botnets? Is that the same as first-party fraud?

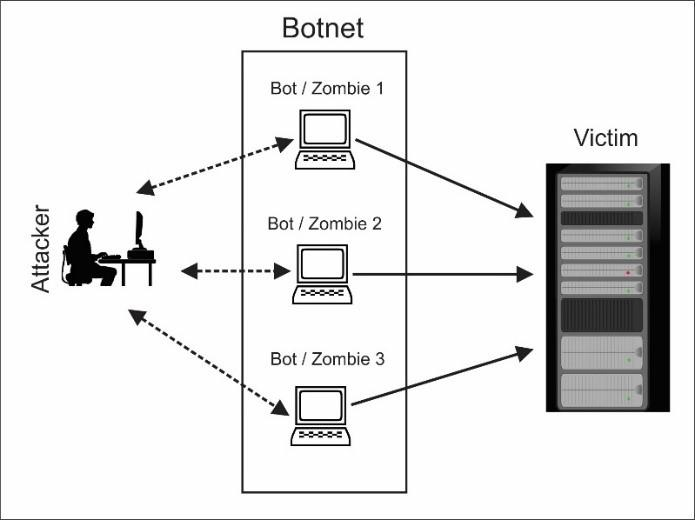

A botnet – short for “robotic network” – is a kind of malware that carries out tasks on other machines on behalf of an attacker, called the bot-herder.

Botnets are typically established when the bot herder tries to gain unauthorized access by installing the bot on other peoples’ machines. They do this through file sharing, emails, or other protocols in which people are tricked into downloading and opening a malicious file without them ever knowing anything has happened. Once the bot is installed on a foreign machine, the bot-herder can control its actions remotely.

A diagram of how botnets leverage other machines to perpetrate attacks.

Botnets can be used for both first-party and third-party fraud. Botnets can infect millions of machines at a given time, giving the hacker controlling them a great deal of computing power. For attacks of scale that are best carried out by a large number of bots performing the same action (credential stuffing, stockpiling the latest Nike drop, etc.), they can be incredibly effective – and harmful.

Because botnets can be used for both first- and third-party fraud, the bot mitigation solutions for botnets vs. a single bot are usually the same.

Want bot mitigation for your app? Try device fingerprinting from Stytch

Pricing that scales with you • No feature gating • All of the auth solutions you need plus fraud & risk

Which bot attacks should I worry about?

Many companies face both types of fraud, but which attack surface (signup vs. login) is more frequently and aggressively targeted depends on which vector provides the most potential monetization value to attackers.

Put another way, the incentives are different for bots attacking signup and checkout forms vs. login flows. Most first-party fraud focuses on the account creation flow, and most third-party fraud focuses on the login flow. First-party fraud abuses your system by creating fake accounts, while third-party fraud abuses your system by stealing existing ones.

So, if you’re an ecommerce site or marketplace with limited time rewards and deals, or a Fintech company with more flexible allowances for your customers ability to pay before deposits go through, your signup and account creation flows are more likely to be a target for bot attacks than your login flow for existing customers. That’s likely where you want to focus your anti-fraud protections the most.

If on the other hand your customer or employee accounts have access to large amounts of sensitive data, there’s a higher incentive for attackers to target your login flow through third-party fraud tactics like credential stuffing and phishing. In that case, you’d likely want to invest in bot mitigation as well as unphishable authentication methods as your most probable attack vectors.

Bot mitigation – telling bots from humans

To understand the field of bot mitigation, it can be helpful to first think about where and when online users provide signal as to who they are and what they’re trying to do – signals beyond their username and password.

There are several junctures in the signup or login process where bots may give away their, well, bot-ness (some of these are also general indications of fraud, whether from a human or a bot):

Speed

Bots can perform tasks on the web much faster than humans – this is one of their biggest advantages as a tool both for good and for ill. So if a user is creating an account or logging in at a super-human speed, it’s quite possible it’s bot traffic.

Volume

Similarly, most people don’t try to log in to their account thousands of times in an hour. They’ll usually try a few times and then call customer service or initiate a password reset. If a “user” is attempting certain repetitive tasks at a volume no typical user would benefit from, it might be a bot.

Intelligence

Bots are usually designed to perform a very limited number of tasks, and thus don’t have a lot of flexibility to perform tasks outside of what it was programmed to do.

Consistency

Like human fraud, bots also may give signal with behavior or characteristics that are inconsistent with a user’s typical behavior. If a user usually logs in from the same IP address, zip code, or device, and is suddenly halfway across the world on a new machine, there’s a strong possibility the “user” is a bot, fraudulent, or both.

There are of course more technical nuances to these categories of bot detection methods, but they give a general overview of what bot mitigation tools use to identify and stop bot traffic.

Bot mitigation products

Today, two of the most common tools to thwart malicious bot traffic are CAPTCHA and device fingerprinting.

CAPTCHA

CAPTCHA, or Completely Automated Public Turing Test to Tell Computers and Humans Apart, is a bot mitigation solution that uses computer-generated puzzle or question to stump most bots. Bots are programmed to carry out specific, repetitive tasks within a narrowly defined scope. CAPTCHA puzzles are designed to give bots tasks outside of that scope, like identifying objects in a picture, performing basic math equations, or transcribing audio.

Different types of CAPTCHA

It’s worth noting though that not all CAPTCHA products are created equal, either for security or customer experience. Invisible reCAPTCHA from Google is a more recent kind of CAPTCHA that evaluates traffic without ever disrupting the user experience (hence the invisible part). But there are also hackers who use something calledCAPTCHA fraud. Also called CAPTCHA farms, these companies hire real humans to complete CAPTCHA puzzles on behalf of using a loophole in CAPTCHA’s source code.

This is the landing page of one of the largest CAPTCHA solving services. Its website highlights why you should choose it over competitors: it’s cheap and reliable.

Bot mitigation solutions like Stytch’s Strong CAPTCHA have protections built against CAPTCHA fraud, but not all CAPTCHA services do. Depending on the sophistication of your company’s typical attacker, you may want to consider more thorough or ironclad CAPTCHA services. Because CAPTCHA puzzles introduce a bit of friction into the user flow, they’re best reserved for situations that are either highly sensitive or in which fraud is suspected for other reasons.

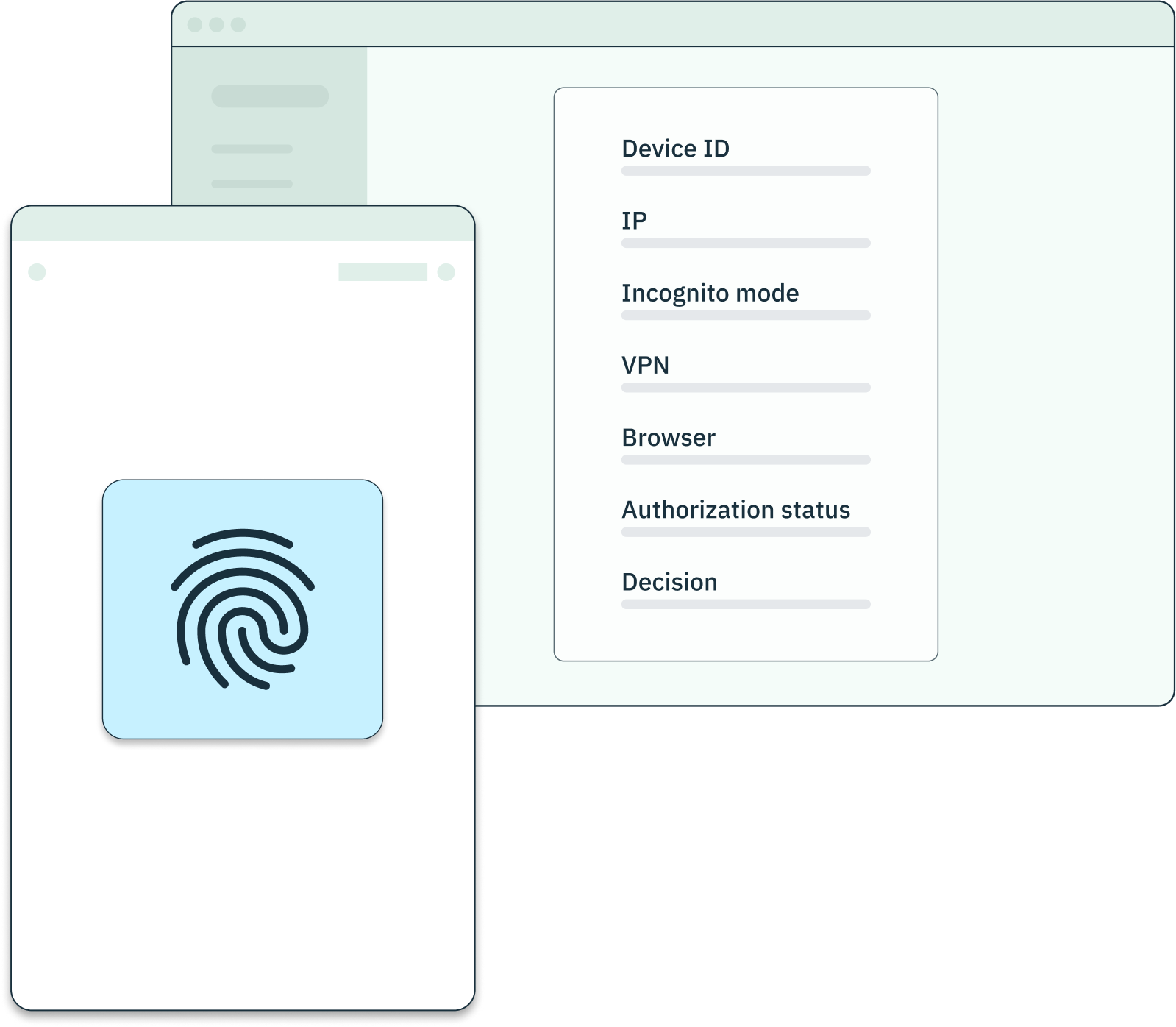

Device fingerprinting

Device fingerprinting is a bot mitigation solution that identifies devices that are accessing a website or application, and store and associate those devices with different user accounts. A device’s identity can be composed of a number of attributes that an application detects when the user accesses the site or app that are then associated with a unique ID. These attributes range from things like an IP address or browser type, to things like graphics card models, browser default language, etc.

Different types of device fingerprinting

Similar to CAPTCHA, not all device fingerprinting services are created equally.

In part, this is because as device fingerprinting has become more common, hackers have designed more sophisticated bots to make them appear more like humans. For instance, many hackers automate speed-mitigation, so bots move and perform actions at a speed that is more akin to a person. They can also use VPNs or incognito browsers to fake or mask IP addresses.

So when looking at device fingerprinting vendors, it’s important to ask which factors / attributes the fingerprint collects, and what other kinds of bot mitigation or authentication are stacked with it. Some solutions like Stytch’s Device Fingerprinting, use highly unique combinations of device properties, such as operating system, browser version, and IP address, whereas others just use a combination of browser make and model and time zone.

Remember: the more unique the fingerprint, the stronger the solution at stopping bots in their tracks.

Unlike CAPTCHA, device fingerprinting is completely unobtrusive to the user experience unless fraud is suspected. So while CAPTCHA solutions may only be introduced in a case-by-case basis, device fingerprinting is a broader bot mitigation solution that’s easier to implement without disrupting a user’s normal signup or login flow.

Which bot mitigation solutions are best for me?

The kind of arms race between cybersecurity professionals and hackers may seem overwhelming, especially if you’re just trying to sell shoes or provide a B2B data analytics tool.

But like other areas of authentication, not every hacker on the internet is using the most sophisticated techniques to commit fraud. In fact, like white hat businesspeople, they’re usually going to invest only as much as they think they need to to be successful to maximize their ROI.

When evaluating different bot mitigation services, you need to understand what those hackers are trying to do, and how hard they’re willing to try to evade detection. The more valuable and sensitive the information they’re after, the more sophisticated their techniques will likely get.

Choose bot mitigation based on risk and sophistication

At Stytch, we believe that authentication and fraud prevention should only introduce friction where it’s absolutely critical. When talking with customers, we usually scale our recommendations for fraud prevention based on the amount of risk and the sophistication of the hacker, to minimize any unnecessary disruption to the user flow.

- For the least sophisticated attackers + spammers, invisible CAPTCHA will likely do the trick.

- For medium-to-highly sophisticated attackers, device fingerprinting is recommended to pierce the various deceptions they may be attempting.

- For extremely advanced attackers, it’s best to layer CAPTCHA on top of device fingerprinting. If hackers are investing a lot of money to look like a legitimate device + browser, they’re also likely willing to invest in something like a CAPTCHA farm, so it’s worth also making them perform a Turing test in case their device deception is highly effective.

The other important layer to consider beyond hacker sophistication of course is your company’s level of risk and the sensitivity and/or value of the data hackers are after. If you’re curious about how bot mitigation tools could help protect your product and customers, talk with an auth expert at Stytch today.

Authentication & Authorization

Fraud & Risk Prevention

© 2025 Stytch. All rights reserved.