Back to blog

Top strategies to prevent web scraping and protect your data

Auth & identity

Oct 2, 2024

Author: Alex Lawrence

Top strategies to prevent web scraping and protect your data

Web scraping is the automated process of collecting and extracting data from websites. This technique typically involves the use of software tools or scripts, bots, or headless browsers that navigate web pages and gather information, which can then be stored and analyzed for various purposes, such as market research, content aggregation, or competitive analysis.

As with many useful tools and techniques, fraudulent actors often take advantage of web scraping to expose private data by scraping it from various websites. As a result, more businesses and organizations are taking measures to prevent web scraping and protect their data.

In this article, we'll go over what web scraping is, its legitimate uses, as well as how it's used for fraud and the top strategies you can implement to safeguard your data from these automated threats.

Understanding web scraping

Web scraping is automated web data extraction from websites, a process that has become increasingly sophisticated over time. Initially, web scrapers used simple bots to extract data, but modern techniques now include the use of AI-driven tools that employ machine learning to interpret and adapt to dynamic web environments. This evolution makes the task of distinguishing between legitimate users and malicious bots more challenging than ever.

Scripts, bots and browsers

The core of web scraping involves programming bots to mimic user interactions with websites. These bots use technologies such as automated scripts, headless browsers, and HTTP requests to extract data by impersonating legitimate (human) users. For instance, a web scraper might simulate a user browsing through pages and clicking on links to gather information. This can be particularly problematic for websites that rely on user engagement and interaction for their business models as such undesirable traffic not only threatens security but heavily skews engagement metrics

To the credit of how the technology has evolved, web scrapers are often indistinguishable from real users. They can load the initial HTML web page, parse the content, and extract data such as product prices and stock levels to user reviews and contact information with ease. The scraped data is then stored and used for various purposes such as useful competitive analysis or malicious data theft. We'll cover the former benign utility of web scraping first to better understand how the technique came to be so prevalent .

Legitimate uses of web scraping

Web scraping is a powerful tool employed by developers for various legitimate purposes across multiple industries, such as:

- QA testing for web apps: Developers can use web scraping to automate testing processes, ensuring that web applications perform consistently across different environments and updates. Scraping tools can simulate user interactions, helping to identify bugs or issues in user-facing features.

- API development and verification: Web scraping helps developers ensure that APIs are working correctly by extracting data and verifying responses. This is particularly useful when testing integrations between web applications and external services or APIs.

- Data pipeline creation: Developers use web scraping to gather and structure large datasets from different sources as part of data pipeline creation. Scraped data can be used for machine learning models, analytics, or other data-driven applications.

- Continuous integration/continuous deployment (CI/CD) verification: During CI/CD processes, developers can use web scraping to verify that updates to a web application do not break existing functionality. Automated scripts can scrape content after deployments to confirm that changes are successfully integrated.

- Automated workflows and form submissions: Scraping tools can automate repetitive tasks such as filling out forms or navigating through workflows, which is particularly useful for internal testing or operational automation. Developers use this technique to streamline processes like user onboarding or data entry validation.

Malicious Web Scraping

Web scraping's darker side is perpetrated by individuals and organizations who misuse the technology for harmful purposes. Here are some of the most common forms of malicious web scraping:

- Data harvesting: Collecting personal information such as emails, phone numbers, and social profiles to sell to third parties, infringing on user privacy.

- Click fraud: Automated bots click on online advertisements to generate false revenue, leading to financial losses for advertisers.

- Price scraping: Competitors scrape pricing data to undercut legitimate businesses, disrupting fair market practices.

- Content theft: Copying content from websites without permission, potentially damaging the original creator's brand and search engine rankings.

- API Abuse/Resource Abuse: Automated scraping tools excessively call APIs or abuse server resources, overwhelming infrastructure and leading to increased costs or degraded performance for legitimate users. This abuse can result in higher operational costs and potential service outages for businesses.

A strong awareness of the above methods can help organizations, developers and their teams implement effective strategies to combat harmful scraping activities. Historically, this has been a difficult challenge, requiring strong bot and automated browser detection alongside refined, well-rehearsed mitigation techniques.

Technology and techniques to prevent web scraping

Preventing web scraping is challenging due to the broad targeting capabilities of scrapers, which can access any public-facing application within a domain. Scrapers often mimic real user behavior, such as browsing pages and interacting with forms, and most scraping attacks use simple HTTP GET requests that are hard to differentiate from normal traffic, complicating detection.

Despite these challenges, implementing a combination of best practices and advanced techniques is crucial to protect valuable data, maintain website performance, and ensure fair use of online resources. These methods, while not foolproof, provide a solid foundation for protecting your data.

IP Blocking

IP blocking restricts access based on a user’s IP address to prevent web scraping, effectively blocking known malicious IPs. While useful, it has limitations, as scrapers often rotate IP addresses and use proxy servers or VPNs, complicating detection. Geographic restrictions can enhance its effectiveness, but IP blocking should be combined with other security measures to ensure comprehensive protection.

CAPTCHA

CAPTCHA challenges help distinguish between humans and bots by requiring users to complete puzzles or tasks. Adaptive CAPTCHAs adjust their difficulty based on user behavior, making them effective against scrapers. However, they can frustrate users and be bypassed by sophisticated scripts. Despite these drawbacks, CAPTCHAs provide an additional layer of security when combined with other methods like IP blocking and firewalls.

Firewalls

Firewalls, especially Web Application Firewalls (WAF), filter traffic and block requests that exhibit scraping behavior. They can identify patterns like repeated access from the same IP or traffic spikes and prevent scrapers from extracting data. Proper configuration and regular updates are essential to avoid blocking legitimate users, and firewalls should be part of a multi-layered defense strategy alongside IP blocking and CAPTCHA.

Rate limiting and request throttling

Rate limiting controls the number of requests a single IP or user agent can make within a specific timeframe, slowing down scrapers and protecting server resources. Request throttling complements this by controlling the speed at which requests can be made, reducing the overall traffic pace without fully blocking access. Together, these methods can temporarily block or slow down IPs that exceed set thresholds, making them effective in mitigating the impact of high-volume scrapers.

Obfuscation and dynamic content loading

Obfuscation and dynamic content loading are advanced techniques that make data extraction harder for scrapers. Obfuscation involves altering HTML structures, IDs, and classes, or using JavaScript to dynamically load content, complicating scraper efforts. Dynamic content loading, such as lazy loading or AJAX requests, delays content display, making it harder for basic HTML parsers to access data. However, these techniques can also hinder real users and complicate legitimate access, so balancing them with user experience is essential.

Stytch Device Fingerprinting

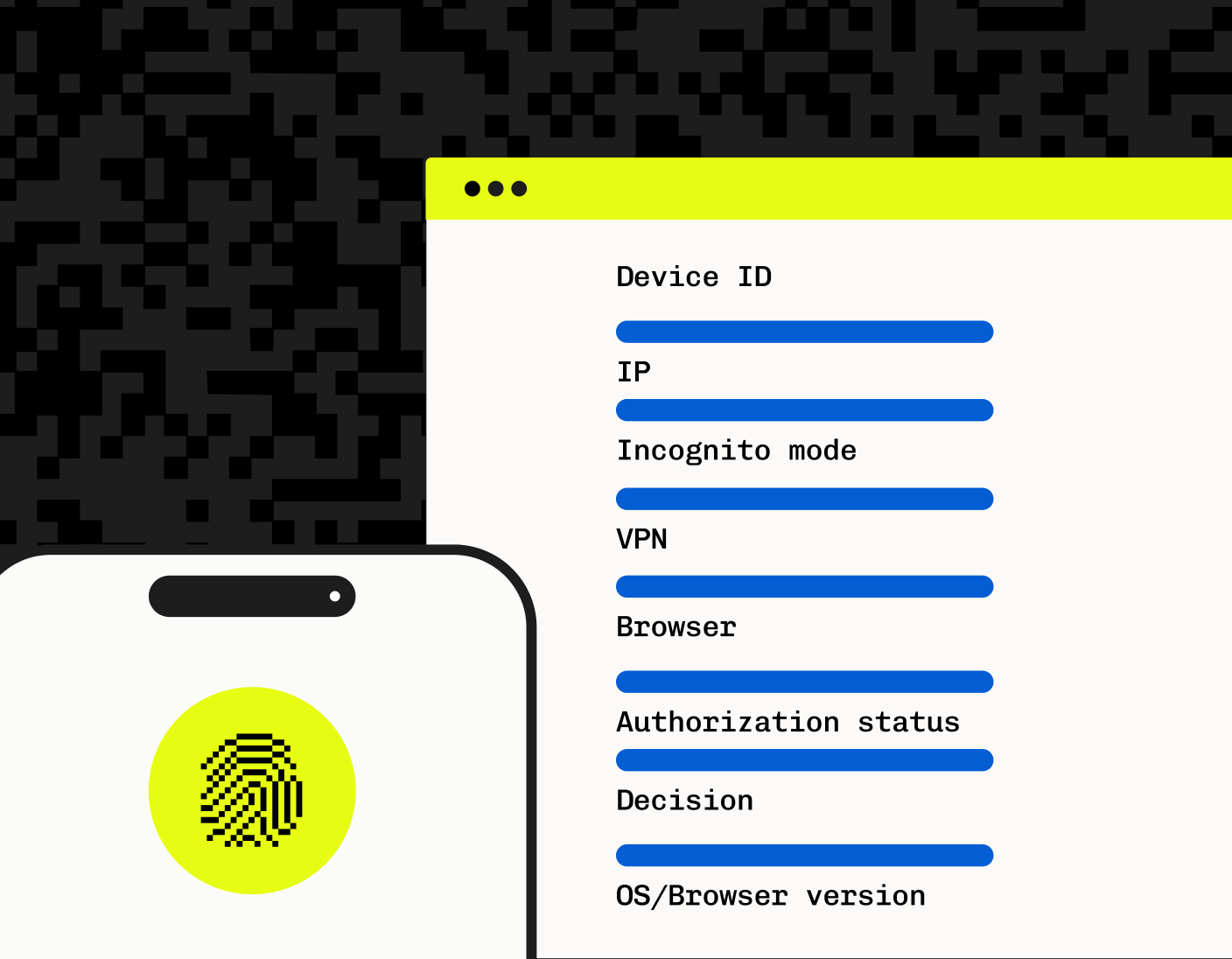

Stytch's Fraud & Risk Prevention solution offers major improvements over all traditional anti-web scraping technologies by leveraging Device Fingerprinting (DFP), which uniquely identifies devices accessing a website by collecting various attributes from user devices. This includes details like network, screen resolution, operating system, and active plugins, which are combined to create a unique identifier for each device. Accurately identifying devices through DFP aids in the precise detection of scraping activities.

With device fingerprinting, Stytch's Fraud & Risk solution enables developers to use features such as:

- Invisible CAPTCHAs: Deliver stronger, invisible CAPTCHAs that use Stytch fingerprinting to ensure the device solving them is the same one that received them, not a CAPTCHA farm or an AI agent.

- Intelligent Rate Limiting: Dynamically rate limit anomalous traffic based on analysis of device, user & traffic sub-signals.

- Security Rules Engine: Programmatically define access rules for different types of traffic visiting your app

- ML-Powered Device Detection: Gain zero-day threat detection and protect your app with Stytch's supervised ML model to detect and categorize new threats in real time.

Stytch Device Fingerprinting creates unique identifiers and leverages advanced techniques to combine various device attributes, such as browser type, screen size, and operating system, to create a robust and tamper-resistant fingerprint. The solution provides clear action recommendations, such as ALLOW, BLOCK, and CHALLENGE, based on the detected device’s behavior.

By employing Stytch Device Fingerprinting, businesses can enhance their detection capabilities against web scraping attempts, ensuring comprehensive data protection and maintaining the integrity of their online resources.

To learn more about Stytch DFP and it’s powerful ability to identify devices across your web traffic to prevent web scraping, get in touch with an auth expert, or get started using Stytch, today.

Build Fraud & Risk Prevention

Pricing that scales with you • No feature gating • All of the auth solutions you need plus fraud & risk

Authentication & Authorization

Fraud & Risk Prevention

© 2025 Stytch. All rights reserved.