Back to blog

What is a bot and how do they work

Auth & identity

Nov 21, 2023

Author: Alex Lawrence

What is a bot, exactly?

A bot, short for robot, refers to computer software programmed to perform automated tasks. Bots are everywhere, from chatbots on customer service platforms that can simulate human conversation, to search engine bots that index web pages.

Bots operate under a wide umbrella of functions, with some designed to help human users navigate the vastness of the internet. Others may serve more dubious purposes, and we’ll cover the mechanics of both in this article.

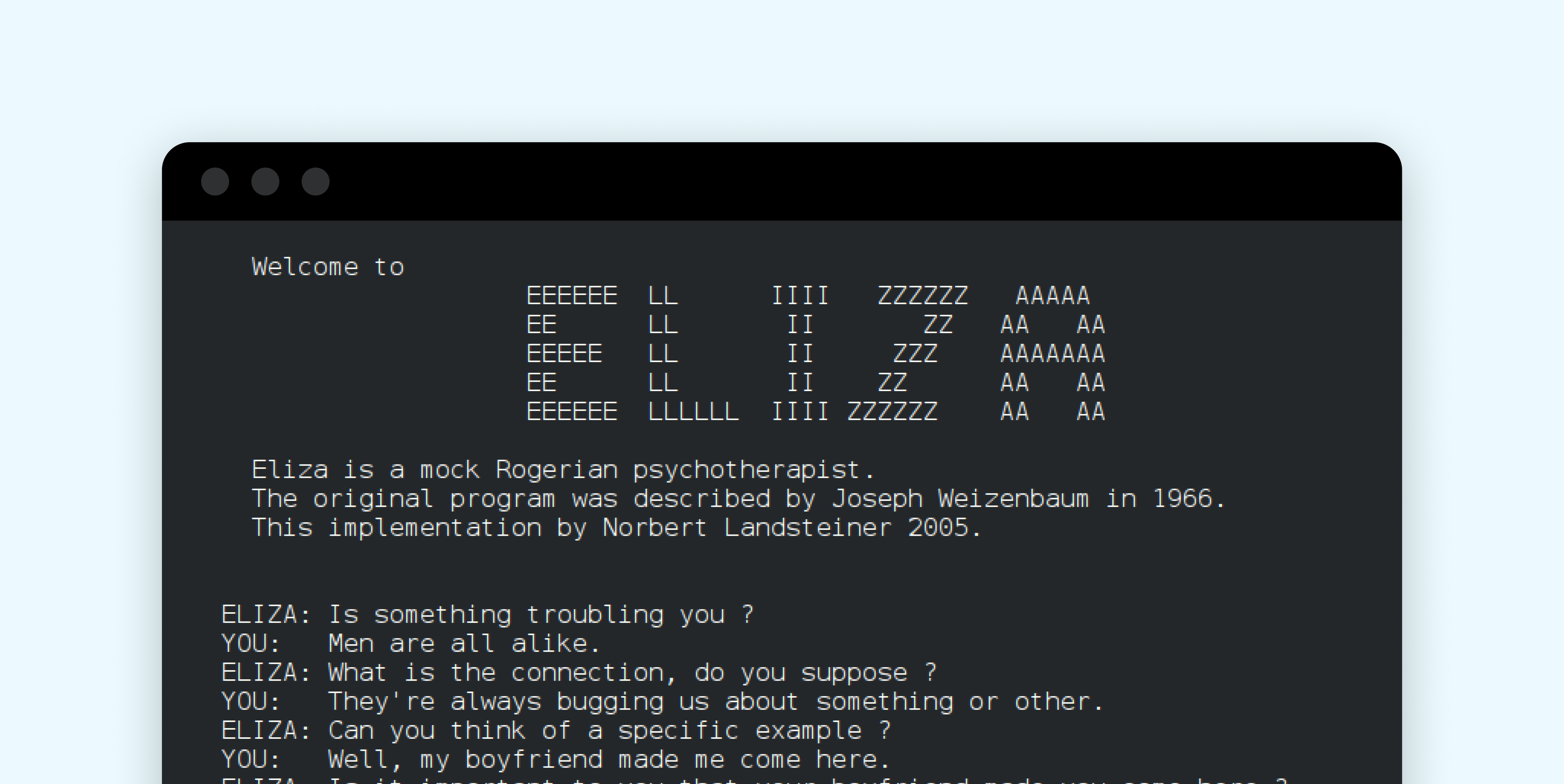

ELIZA: the birth of a bot

Bots have been around since the early days of computing, with the first one being developed in 1966 by MIT Professor Joseph Weizenbaum. Named ELIZA, this chatbot was programmed to simulate a psychotherapist by responding with scripted phrases based on keywords from the user’s input. It sparked widespread interest and set off a wave of innovation in the field of artificial intelligence, leading to some of the great innovations we’re seeing today.

The early experiments with bots, such as ELIZA, laid the groundwork for their expansion in our digital lives. These rudimentary systems primarily utilized pattern matching and substitution methodologies to simulate conversation, and over the decades, the significant advances in Machine Learning (ML), Artificial Intelligence (AI) and Natural Language Processing (NLP) have revolutionized the capabilities of bots.

Today, these social bots are integral to search engines, aiding in web crawling and indexing, and play a crucial role in engagement on social media platforms.

Types of Bots

So what is a bot in real life, today? There are various types of bots, each designed for specific purposes. They include:

- Web scraping bots: These bots crawl through web pages, extracting data such as prices, reviews, and product information.

- Transactional bots: These bots handle transactions automatically, such as booking flights or making purchases.

- Chatbots: These bots simulate human conversation and are commonly used for customer service purposes.

- Social media bots: These bots can be used to automatically post content, like posts, and follow/unfollow accounts on social media platforms. They can also be used for malicious purposes, such as spreading fake news or manipulating public opinion.

- Entertainment bots: These bots are designed to entertain users through conversation or gameplay. Examples include virtual assistants like Siri and Alexa, and gaming bots on platforms like Discord.

- Health bots: These bots assist in healthcare tasks such as scheduling appointments, providing medical advice, and monitoring health data.

- Finance bots: Sometimes known as robo-advisors, these bots handle financial tasks such as stock trading, personal budgeting, and fraud detection.

In recent years, there has been a surge in the use of chatbots vs. other bots, particularly in the realm of customer service. Companies are leveraging chatbot technology to automate repetitive tasks and provide quick responses to common inquiries, freeing up human employees to focus on more complex issues.

Major tech companies like Google, Facebook, and Amazon have all invested heavily in chatbot development, with the goal of creating more efficient and streamlined interactions for their users. But as useful as they’ve proven to be, there’s a substantial risk constantly evolving under the helm.

Bots make up half of the internet

Ever wonder if that person really has 100k followers? Bots account for a significant amount of internet traffic — so much so that they’ve blended right into the human cohorts of many online platforms. We rub shoulders with bots just about every time we hop on a website or social media platform.

According to reports, 47.4% of all internet traffic comes from bots, indicating that nearly every second click, view, or interaction online could be attributed to a bot rather than a human. They are pervasive on social media platforms and various online sectors, including e-commerce sites where they simulate customer interactions, to news platforms where they can amplify specific articles or narratives. Search engines also see significant bot traffic, with bots performing automated queries or scraping content. Even online gaming isn’t immune, with bots often used to automate gameplay or manipulate in-game payments.

The dual nature of bot traffic

Just like humans online, bots can be both benevolent and malicious.

Good bots, like those that power search engines and provide instantaneous customer service, have become essential to the efficiency of online services. While these good bots help manage the flow and sharing of information, increasing engagement and facilitating smoother user experiences, bad bots can turn it all right on its head, leading to a host of cybersecurity challenges.

Malware bots, spam bots, OTP bots, and other malicious bots are frequently implicated in fraudulent activities and identity theft, and can even disrupt services through bot attacks like DDoS.

Understanding this dual nature is crucial for anyone navigating the digital world — from individual users to large corporations.

Bot behavior: The good

No place is more fertile ground for human imitation than the web, where an internet bot can be programmed to act as a human user, creating user accounts and interacting with content. These functions can arguably have both positive effects, like boosting engagement for good causes, and negative ones, such as spreading disinformation.

The staggering power of machine learning and artificial intelligence has made all of this possible at a new scale of impact. We’ll start with the encouraging positive impacts of these powerful virtual machines.

Machine learning and AI: breakthrough bot behaviors

Modern bots have advanced far beyond simple, repetitive tasks. Today, various types of bots leverage cutting-edge technologies such as artificial intelligence (AI) and machine learning (ML) to learn from their interactions and improve their responses over time. This allows them to mimic human-like behaviors, making them harder to detect and block. For instance, a chatbot may use natural language processing (NLP) and machine learning to better understand and respond to human language over time, improving its ability to provide customer service.

Internet bots can also employ advanced techniques like rotating IP addresses or changing user agents to evade detection mechanisms. Some sophisticated internet bots may even employ captcha-solving services to bypass security measures — necessitating stronger CAPTCHA design.

In essence, the complexity of tasks a bot can execute is only limited by the sophistication of its programming. These advanced capabilities make them a double-edged sword – while they can enhance efficiency and productivity when used responsibly, they can also cause significant harm when used maliciously.

Friendly bots on social media

First, the good news: when utilized ethically, and due to the incredible advancements in AI/ML, bots can significantly enhance the user experience on social media platforms. They play a crucial role in customer service, handling common queries automatically and freeing up humans to handle more complex issues.

Bots can also aid in content curation, helping users discover content tailored to their interests and behavior. They’re also able watchdogs, and can be programmed to monitor a platform for harmful behavior such as hate speech or cyberbullying, providing a safer and more inclusive environment for users.

Want to stop fraud? Get Device Fingerprinting from Stytch.

Pricing that scales with you • No feature gating • All of the auth solutions you need plus fraud & risk

Customer service bots

Customer service bots, powered by natural language processing, can handle a multitude of user requests simultaneously, from answering FAQs to guiding users through troubleshooting processes. These bots work by analyzing the input from the user and providing the most accurate response based on their programming and learned experiences.

In high-traffic customer service scenarios, bots can manage excessive inquiries with aplomb, ensuring that human conversation with live agents is reserved for more complex issues. This type of bot is an amazing time-saver for users, and relieves the workload of human customer service agents, allowing them to focus on more complex tasks and improving overall efficiency.

On the commerce side, advanced customer service bots can personalize interactions by accessing user accounts and their history to provide tailored assistance. This level of service, which mimics human conversation and attention to detail, has become a benchmark for modern customer support.

Bot behavior: The bad & the ugly

Unfortunately, it’s not all sunny days with the advent of machine learning, particularly on social media. Using ML, bots can create human-like posts and build networks to amplify their (often harmful or deceptive) messages. This has made it an essential challenge for social media platforms to detect and prevent malicious bot activity through the spread of dangerous or false information.

Given their capability of mimicking human interactions to a degree that can often deceive actual human users, bot management on these platforms is crucial.

Bot management on social media

Malicious bot activity is an evergreen threat these days, and social media companies invest heavily in bot management solutions to differentiate between legitimate human users and bots. This involves detecting patterns that are not characteristic of human behavior, such as the same address requests being made in a short period (indicating a potential bot attack).

In addition to technical measures, some platforms also rely on user reports and community moderation to identify and remove malicious bot accounts. The constant evolution and sophistication of bots make it a continual challenge with a steep and learning curve.

What is a bot attack?

A bot attack refers to a malicious attempt by one or more bots to compromise, disrupt, or manipulate digital systems, networks, or online services. Bad bots can perform a range of activities, from brute force attacks that aim to break into user accounts to sophisticated schemes that mimic human activity to evade detection. This makes malicious bots a threat to many different types of industries – particularly banks, businesses, and financial institutions.

Risks associated with malicious bot activity

Some of the dangers to businesses and individuals posed by malicious bots & attacks include:

- Website scraping: Bots can be used to scrape large amounts of data from websites. These download bots can potentially expose sensitive information.

- Account takeover attacks: Bots can attempt to login to user accounts using stolen credentials or brute force techniques. This technique is often seen in Phishing scams.

- Price and inventory manipulation: Bots can be used to artificially inflate or deflate prices and manipulate inventory levels.

- Ad fraud: Bots can mimic human behavior and generate fake clicks on online ads, costing businesses millions of dollars each year.

- Website takedowns: These are often led by Distributed Denial of Service (DDoS) bots that can take down websites by overwhelming them with traffic.

Putting a stop to the malicious bot economy

There’s an entire underground economy based around malicious bots and the profitable criminal opportunities they offer, and monitoring bots and their many associated pathways to fraud is no easy feat.

Given that bots can penetrate an organization’s defenses in numerous ways, a multi-faceted approach is needed to stop malicious bots, including web app security platforms that can detect and block bot traffic, machine learning tools that can learn and adapt to new bot behaviors, and vigilant monitoring by bot managers to continuously update defense mechanisms.

Here are some of the ways businesses and organizations are shutting down malicious bots through technology:

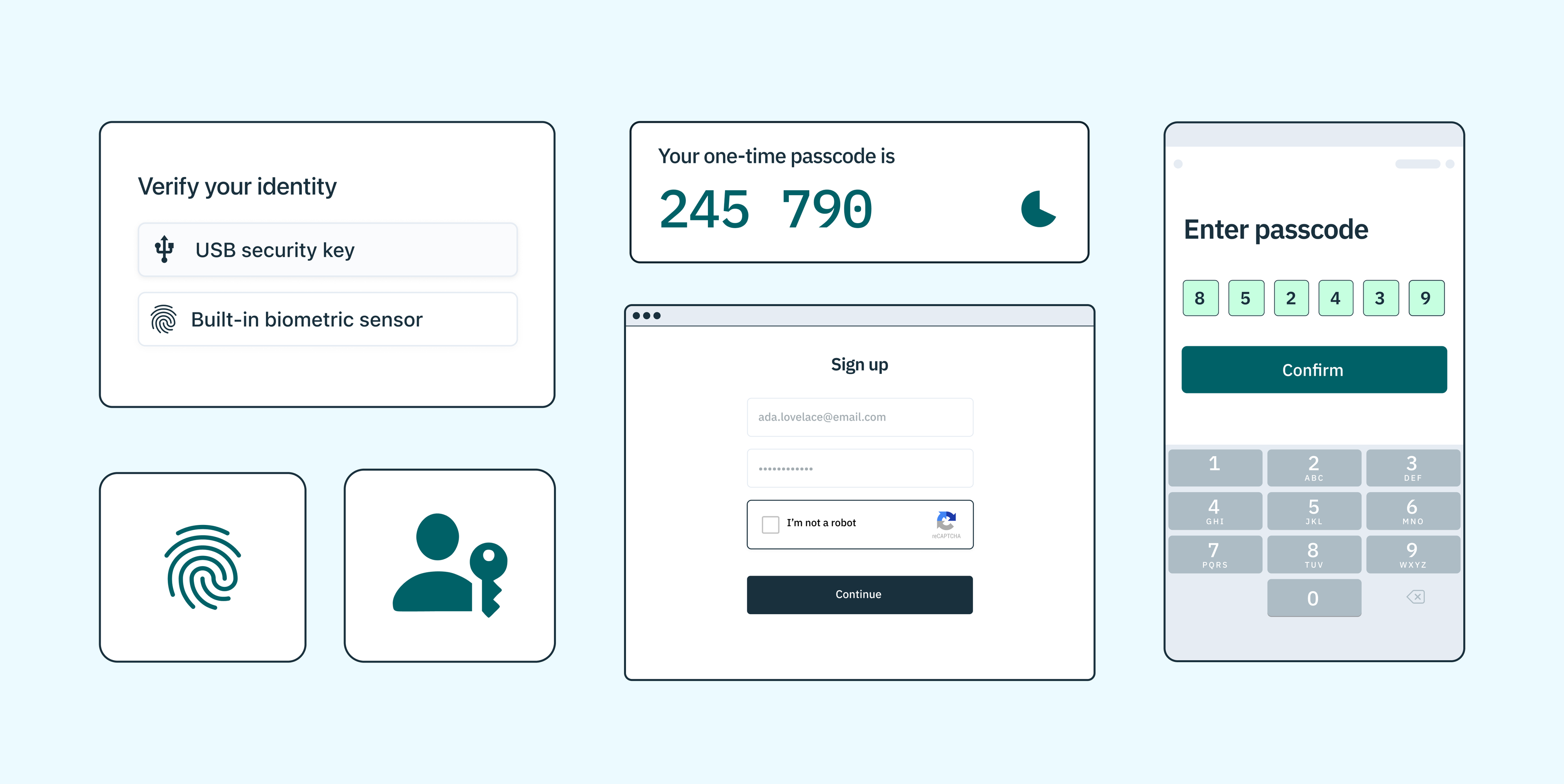

- CAPTCHA: Completely Automated Public Turing test to tell Computers and Humans Apart (CAPTCHA) is a tool that challenges users to prove that they are human. This is often done through tasks that are difficult for bots to perform, like identifying distorted text or images.

- Behavioral Analysis: By monitoring how users interact with a website, it is possible to distinguish between human and bot behavior. Behavioral biometrics, also known as passive biometrics, is an emerging authentication method that analyzes user behavior patterns to identify and verify individuals.

- Device Fingerprinting: This involves collecting information about a device’s browser, operating system, and settings to create a unique “fingerprint” that can be used to track and block bots.

- Two-Factor Authentication (2FA): Requiring a second form of identification, typically a code sent to a user’s mobile device, can prevent malicious bots from gaining unauthorized access to accounts.

- IP Analysis and Blacklisting: Analyzing the IP addresses of incoming traffic can reveal bots, especially if multiple requests are coming from the same address in a short period. Once identified, these IPs can be blacklisted, preventing them from accessing the site.

How Stytch can help

Strong, bot-resistant authentication for systems and devices is one of the most reliable methods at keeping bad bots out. Stytch can help developers and organizations monitor and prevent bot attacks using both physical and API-powered device security measures.

Device fingerprinting identifies unique characteristics of a user’s device, such as the operating system, browser version, screen resolution, and even unique identifiers like IP address, helping safeguard against bot attacks and other potential breaches.

A step beyond traditional CAPTCHA, Stytch’s Strong CAPTCHA uses complex challenges that are easy for humans but difficult for bots to solve, effectively distinguishing between legitimate users and automated systems.

Step-up authentication allows for more rigorous authentication processes like additional verification steps if a user logs in from a new location or attempts to conduct a high-risk transaction. By leveraging real-time risk assessment, step-up authentication ensures that stronger security measures kick in only when they’re most needed – without adding friction or compromising on user experience.

Stytch also offers ‘unphishable’ multi-factor authentication (MFA) that require users to authenticate their identities using multiple factors, including something they know (like a password), something they have (like a hardware token or a registered device), and something they are (like a fingerprint or other biometric data) — making it challenging for cybercriminals to gain access.

To learn more about how these or other solutions work, reach out to an auth expert to start a conversation, or get started on our platform for free today.

Authentication & Authorization

Fraud & Risk Prevention

© 2025 Stytch. All rights reserved.