Back to blog

AI agent authentication: securing your app for autonomous agent access

Auth & identity

Sep 29, 2025

Author: Stytch Team

More and more, people want to interact with your application through AI agents originating from other apps and tools like Claude and ChatGPT. But those interactions can introduce a lot of risks if they aren’t secure and properly authenticated. Luckily, the building blocks already exist with OAuth 2.0 and Model Context Protocol (MCP). This article explains what these standards are, why they matter, and how to use them to get AI agent authentication working securely in your app.

Where existing human-centric authentication flows break for AI agents

Users can already log in to your app by manually entering their credentials or by using a form of federated login, such as “Sign in with Google/Microsoft/Facebook.” This flow is ubiquitous and familiar: The user logs in or authorizes an app (and optionally shares data between the services), creating a long-lived session with broad privileges. This makes sense when the owner of an account is an actual person logging in and interacting with it, but it is not suitable for securing AI agents.

Human-focused authentication flows assume that once the user has logged in, most actions can proceed under that initial consent, though high-risk operations may still trigger additional checks such as step-up authentication or explicit confirmation prompts. This model works when a human is exercising judgment at each step, but it is not a safe assumption with AI agents. For example, an AI agent with free rein over a user’s CRM could misinterpret instructions and delete important data, with real-world business impacts. API keys suffer the same weaknesses: There’s no way to delegate, and managing scopes or fine-grained permissions is cumbersome.

Part of the authentication process should be the owner granting granular control over what an agent can and can’t do, with full transparency and understanding of the potential actions it can take. These need to be backed by hard guardrails set by your service that don’t rely on an AI agent’s interpretation (or misinterpretation) of prompts.

OAuth is ready to authenticate AI agents, and MCP helps them find what they need

This doesn’t mean that existing authentication technologies aren’t up to the security challenges AI poses — it’s just that AI agent authentication requires new flows that recognize the differences between AI and human users. While many OAuth and OIDC flows are designed around a human authenticating via a web browser, the protocols themselves also support non-interactive, machine-to-machine scenarios.

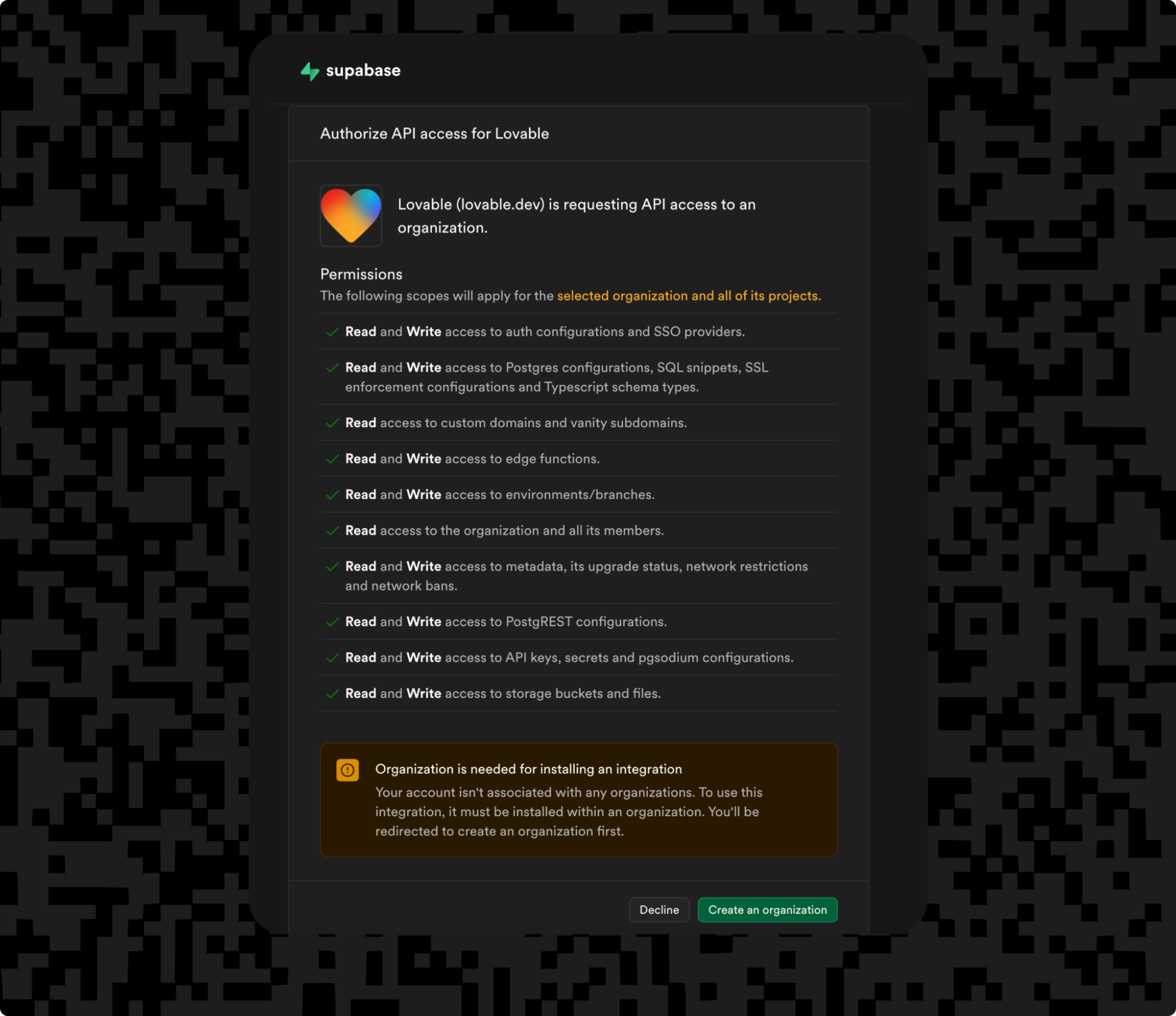

Recent updates to the OAuth 2.1 spec (including mandatory PKCE and stronger refresh token requirements) have made it appropriate for AI agent authentication that requires short-lived, context-bound, and dynamically provisioned credentials. This is demonstrated in the example below, where Lovable and its AI agent request access to Supabase on behalf of a user. Before the agent can access data, the user is shown a consent screen to grant it different scoped permissions.

With OAuth 2.1 now more than capable of securing AI agents (in fact, we think it’s perfect for the job), the problem becomes how to best communicate to AI agents what functionality is available for them to use within your app or service.

This is exactly where MCP comes in. It provides a standardized way for agents to discover and interact with multiple services. Because it’s an open standard that incorporates OAuth, implementing an MCP server means any MCP-compatible AI agent can securely authenticate with your app. The agent receives scoped permissions using OAuth and then finds out exactly what it can do with those permissions using MCP. Users can then interact with their agent, setting it to tasks, confident that they are fully aware of its scope of work and able to receive feedback from it based on what they’re asking and what functionality it can perform.

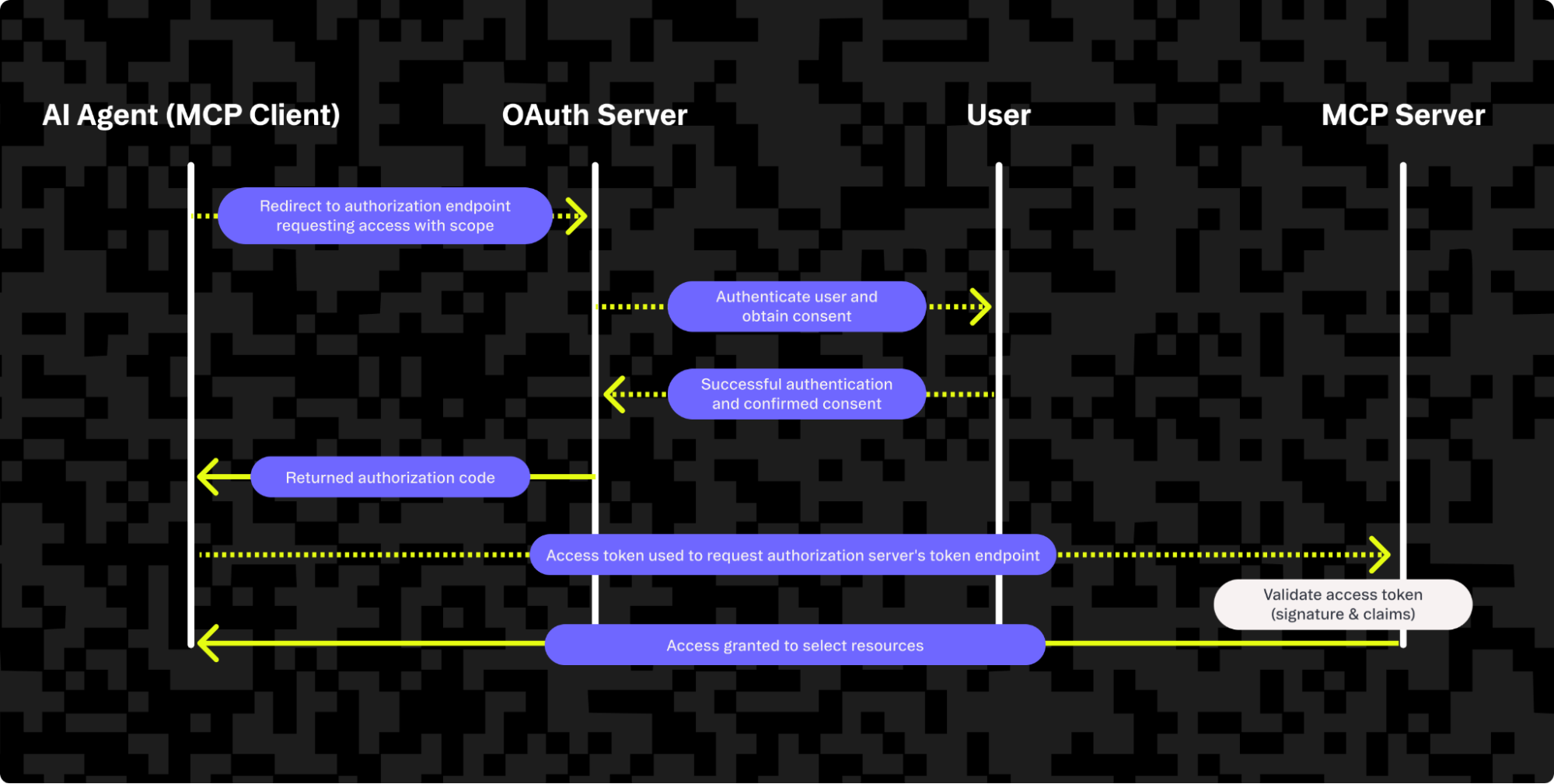

At a high level, MCP works as follows:

- Service discovery: The AI agent locates an MCP server endpoint that describes what functionality (tools, methods) is available.

- Authentication & authorization (via OAuth 2.1):

- The agent registers or identifies itself using client metadata / registration (DCR, or now with CIMD).

- The user is redirected to the OAuth authorization endpoint, authenticates, and grants consent for requested scopes.

- The agent receives an authorization code, then exchanges it for an access token (and optional refresh token).

- Capability discovery & invocation: Using the access token, the agent queries the MCP server to retrieve definitions of available capabilities (methods, inputs/outputs), then invokes them as allowed by the granted permissions.

In practice, MCP relies on OAuth 2.1 for authentication and authorization. The diagram below shows the standard OAuth authorization code flow that secures MCP connections and keeps AI agents from accessing resources they aren’t approved for.

The MCP server returns a list of tool definitions, which are standardized, machine-readable descriptions of functions that are available to the agent. The tool definitions are a significant enabling factor for AI agents. This allows a standardized way to exchange information about what an agent can do on your platform. For example, the MCP tool definition below describes a function that an AI agent can use to create a blog post on the user’s behalf:

{

"name": "create_blog_post",

"description": "Create a blog post for the user.",

"input_schema": {

"type": "object",

"properties": {

"title": { "type": "string", "maxLength": 80 },

"body": { "type": "string" },

"status": { "type": "string", "enum": ["draft", "published"], "default": "draft" },

"publish_date": {

"type": "string",

"format": "date-time",

"description": "ISO 8601 timestamp; if it is in the future, schedule publishing."

}

},

"required": ["title", "body"]

}

}This not only helps the AI agent understand what it can do (including providing context and validation requirements) but also helps your app process requests from the agent using consistent names and inputs. This is part of building AI-friendly APIs that will be ready for future AI scenarios as these technologies continue to advance.

You can see how OAuth and MCP work together in more detail with this real-world example, including a live demo, and see the process for securing AI agents in more detail, with code examples, in our MCP authentication and authorization implementation guide.

What you need to implement AI agent authentication

There are different authentication flows for different AI agent use cases, supported by technologies for extending OAuth:

- Authorization code + PKCE: A basic OAuth 2.0 flow that allows users to delegate access to an AI agent. Authorization code flow is needed for MCP authentication, and PKCE has become an OAuth 2.1 mandatory security enhancement.

- Client Credentials Grant: A basic OAuth 2.0 flow that allows an AI agent to authenticate as itself, rather than on behalf of a user. This is useful for machine-to-machine auth but isn’t needed for MCP.

- On-behalf-of (OBO) token exchange: The gold standard for AI agent authentication, this flow builds on the authorization code + PKCE flow and allows for chained delegation across different systems (for example, making it possible for a company to give an app permission to act on behalf of an employee without getting their consent each time).

- Rich Authorization Requests (RAR): An OAuth extension that allows agents to have very fine-grained permissions. So, instead of a broad scope like “purchase flights,” an agent might be given permission to “purchase flights from Chicago to Beijing on a particular day with a spending limit of $800.”

- Client-Initiated Backchannel Authentication (CIBA): An OIDC specification that allows you to keep the auth flow entirely within your app without the redirects that come with a standard OAuth flow.

If you’re using MCP alongside OAuth, extensions like RAR and OBO can provide additional flexibility beyond basic authorization code flows, especially when agents need very fine-grained or multi-hop delegation.

In practice, implementing these agent authentication flows means your app needs to act as an OIDC-compliant OAuth 2.1 Authorization Server — a role that requires you to handle everything from consent to token issuance to fine-grained scope enforcement. Implementing this by hand is complex and requires manually coding and maintaining components such as:

- Token lifecycle management, including automatic rotation patterns and short-lived credentials for agents

- Interfaces for granting fine-grained permissions to AI agents, as well as underlying permissions models

- Scope-based restrictions and boundaries in the functions that AI agents can call

- Certificate-based authentication with mutual TLS for agents

- The ability to immediately revoke tokens

- The ability for enterprises to set policies (for example, as part of OBO to grant access on behalf of their employees)

- An MCP implementation with protected resource metadata discovery, as well as resource indicators from clients

OAuth/MCP best practices have to be followed at every step, including zero-trust for users and AI agents and using cryptographically sender-constrained tokens or single-use refresh tokens.

In production, you also need to deploy and monitor all activity, watching for suspicious behavior and verifying compliance across tenants, users, and agents. This, combined with all the complexities listed above, is leading many development teams to make the shift to fully managed authentication services that take care of all of these required functionalities and security complexities and provide APIs and SDKs that replace complex code with simple calls.

Updating your authentication to handle AI agents is an ideal time to harden your application’s authentication and make it more flexible by enabling human, AI agent, and machine-to-machine authentication, just as Supabase and Crossmint have.

Security and compliance don’t end once an AI agent has authenticated

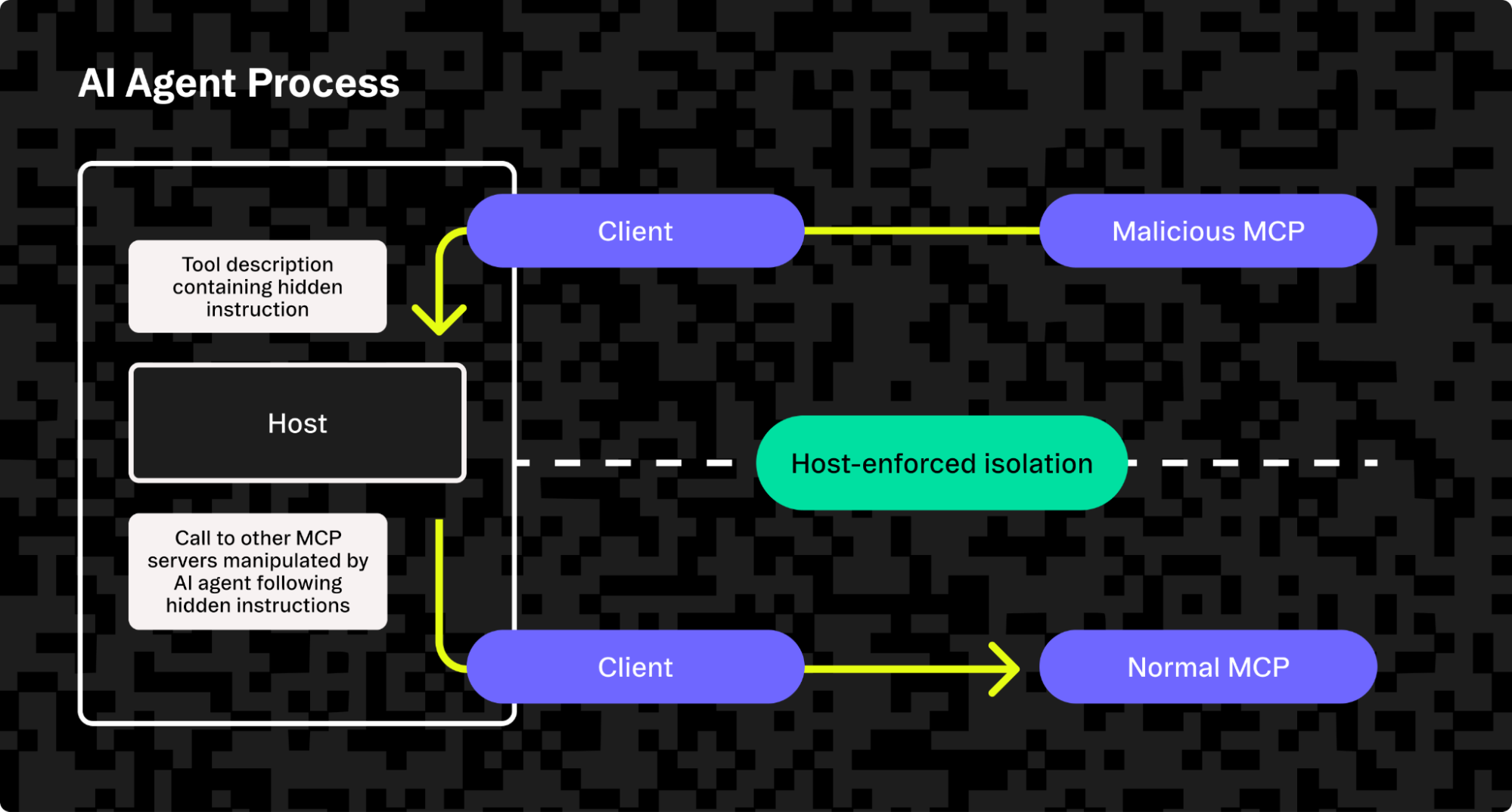

OAuth secures AI agent authentication, and MCP provides a secure, standardized way for agents to discover and call tools. However, it’s still up to you to implement rigid guardrails in your code to make sure agents can only do what they’re told based on what’s agreed to by the user during the authorization process. Prompt injection and MCP tool attacks are famous AI-specific exploits, but your code also needs to protect against lesser-known attacks, such as token theft, agent impersonation, and context poisoning.

While it solves many problems, MCP also presents its own vulnerabilities that are inherent in its purpose, including “line jumping,” or prompt injection via tool descriptions, where hidden instructions are added to tool definition responses by a malicious MCP server in an attempt to break host-enforced isolation of different connected tools.

Many users are also wary of giving over full control of important information and tasks to automated tools. Human-in-the-loop (HITL) workflows and asynchronous authorization can be implemented for sensitive operations to prevent AI from autonomously interacting with critical systems without oversight. Escalation procedures can also be established for certain tasks, limiting what actions an AI agent can take without approval while allowing it to operate independently on less valuable systems or where its behavior is battle tested.

AI agents also present a data privacy concern. For compliance and security purposes, they should be limited by the same data access controls that a real user would be limited by and given access only to the data they require for a specific task — especially during the retrieval augmented generation (RAG) processes. All data access and activities should be monitored and logged for auditing purposes so that malicious activity can be detected and you can make sure that AI agents are utilizing the resources you give them responsibly.

Use Stytch to secure AI agent access to your app

With Stytch, you can secure AI agents with full-featured authentication and authorization that integrates with your existing auth stack using Connected Apps. Or, if you prefer a single, future-proof provider, Stytch can serve as your complete customer identity platform for humans, organizations, and agents.

Once you’ve integrated Stytch with your app, users can log in with their existing accounts or by using SSO and can then grant their AI tools access to your application. Stytch handles discovery, consent, and ongoing monitoring of activity to identify misbehaving or malicious AI, allowing you to take action and maintain control. Stytch can also detect AI agents as they arrive so that they can be directed to the right endpoints (like your MCP servers) using isAgent.

In addition to abstracting the complexities and potential security pitfalls of authentication, Stytch also includes battle-tested bad bot detection and tools for detecting AI agent use and abuse.

You can get up and running with Stytch and start securely connecting AI agents to your app in just three steps: Sign up for Stytch and create your organization and project, add the Stytch identity provider component to your authorization page, and then set up and configure Connected Apps in your Stytch Dashboard.

Get started now for free or chat with a Stytch team member.

Authentication & Authorization

Fraud & Risk Prevention

© 2025 Stytch. All rights reserved.