Stytch events with Orb: securing and pricing AI products

As AI takes off, there’s a lot to be excited about. Incorporating AI is already allowing companies of all sizes to delight users, make their products stickier, and generate new revenue streams.

However, as companies start to productionize AI, they also need to ensure their products are priced in a way that still allows for viable unit economics, and are secure against a quickly evolving threat landscape.

In our recent fireside chat, Stytch co-founders Julianna Lamb and Reed McGinley Stempel sat down with Alvaro Morales, the CEO and co-founder of usage-based billing company Orb, to chat about some of the pricing and security challenges that companies need to consider when incorporating AI into their products.

We’ve highlighted a few of our favorite takeaways from the discussion.

1. In the long run, you’ll need pricing that scales with your costs.

“If you look at OpenAI, they charge ~$0.0004 per thousand API tokens, whereas Notion adds an $8 per member per month add-on to leverage all of their AI capabilities. In the long run, OpenAI’s pricing is better positioned to account for an increase of costs that come with scale, whereas Notion’s flat fee could leave them vulnerable to decreasing margins over time.”

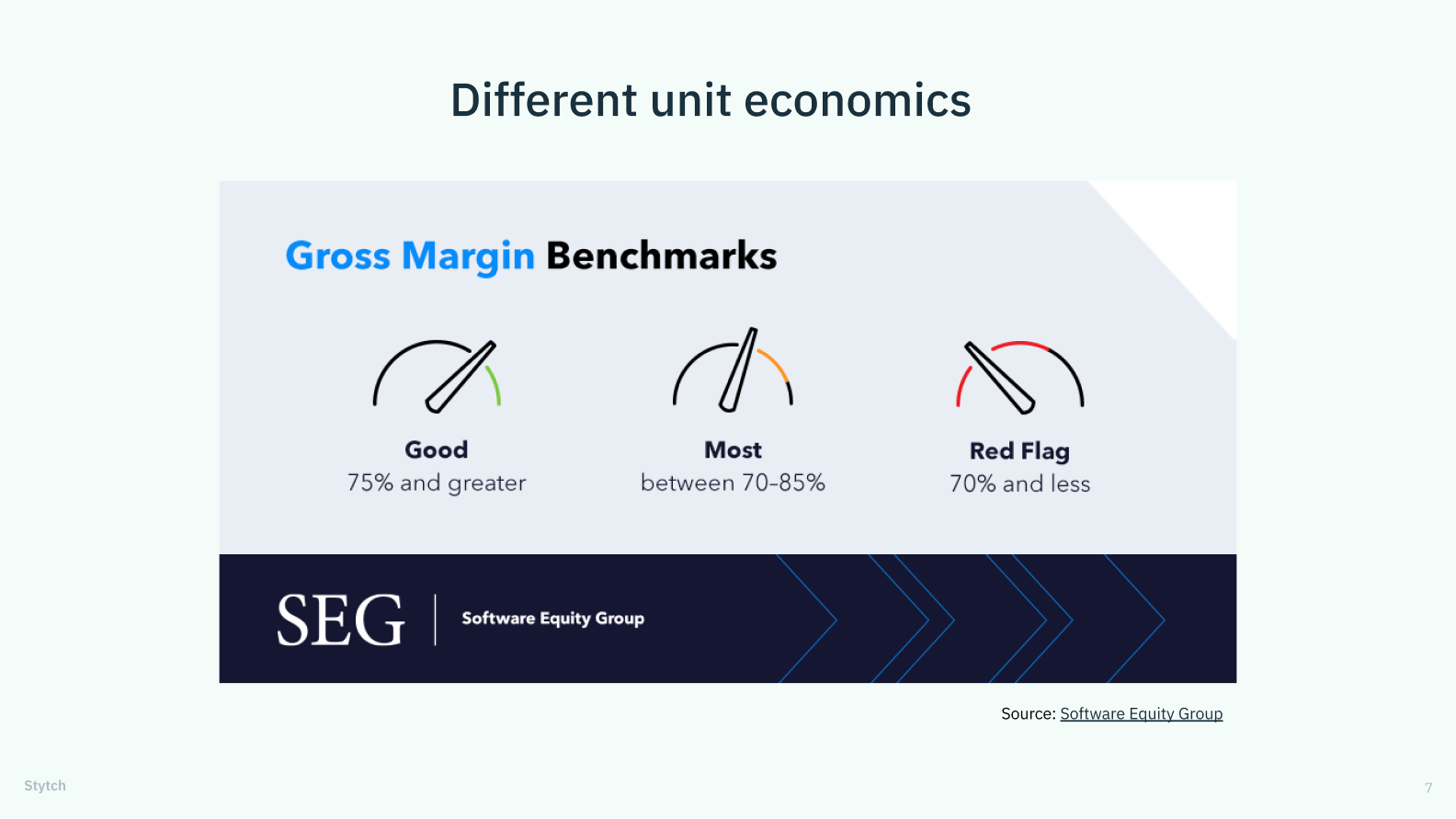

If we look at typical industry benchmarks, most application-level software companies are expected to have around an 80 to 85% gross margin profile. Most infrastructure-level companies hover at around 70% or above margins.

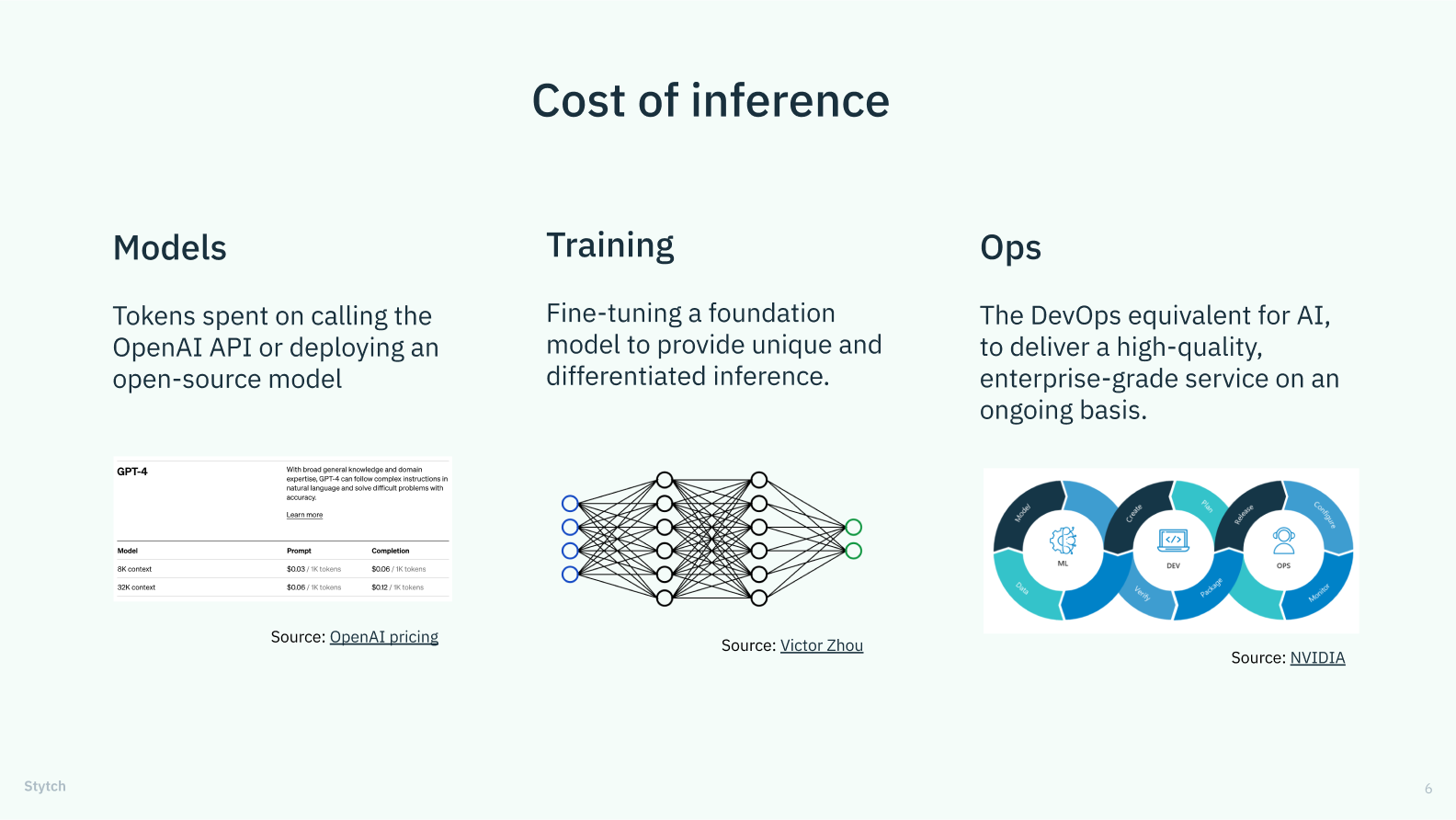

This makes sense if you're an application-level software company that can take advantage of capabilities like multi-tenancy: the marginal cost of delivering software is generally quite low. But AI has a rather unique cost structure that adds a few dimensions to cost:

- Models: First, there’s a cost that comes from the foundational models that you're leveraging to power these capabilities. This could be calls to the API where you're being charged on a token basis, or it could be the hosting cost of deploying an open source model.

- Training: AI companies also need to consider the cost of training and fine tuning their models. If you’re taking a powerful foundation model and adapting and tailoring it to your individual use case, that requires some significant compute costs.

- Operations: This is the DevOps analog in the AI space – something you might call “ML Ops” or “AI Ops.” AI companies need these operations to deliver an enterprise-grade, production-ready service over time, but it certainly doesn’t come free.

Given these three cost factors, as an AI feature is adopted more and more, it will hurt a company’s margins if their pricing doesn’t adjust with scale.

2. In the short term, don’t be afraid to experiment with pricing.

“It's a common pitfall in software that companies delay pricing changes for a long time. This often leads to a massive, painful change management effort to raise prices to catch up with the value being delivered. Instead, I believe it's more effective to make pricing an iterative and ongoing exercise. As you add new AI capabilities and the value of your product changes rapidly, you might want to start experimenting more.”

There can be a lot of anxiety around how to approach pricing in the new AI landscape. But we think a lot of this anxiety can be reduced if companies approach this initial phase with an attitude of experimentation, learning, and iteration.

If we look back to the Notion example, it makes sense that they’ve started with a relatively simple pricing model – the more interesting question is how they’ll evolve it. In this initial phase, they can learn a lot about the value they can derive from adding new AI capabilities. Based on customer engagement, they can examine how AI features impact customer retention, churn, and lifetime value, and experiment with the improvements and features that will make their AI features stickier.

To get to the heart of these questions, it makes a lot of sense to start with pricing models that get customers on board as quickly as possible. The first six months to a year when companies introduce new AI features is not necessarily the best time to optimize for margins. Instead, it’s the time to study and experiment: as companies learn, they should not shy away from experimenting with their pricing alongside experiments in the product.

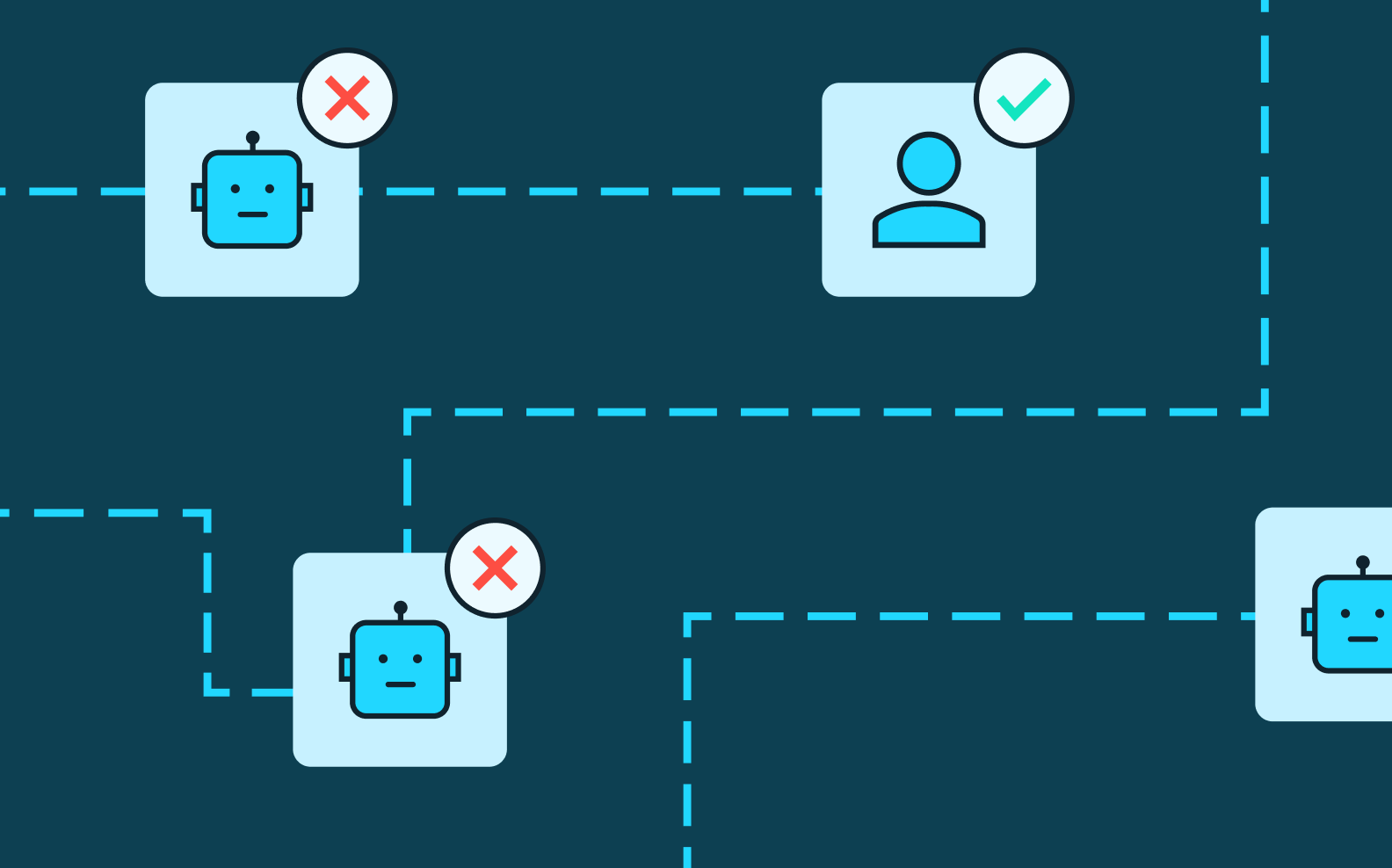

3. Be aware of new attack surfaces introduced by adding AI features.

“Similar to how AI companies need to be aware of how their pricing models are changing, they also need to be monitoring their API usage. This will help them to catch reverse engineering attacks early and, ideally, be prepared to put something like device fingerprinting in place to block bot attacks without dissuading legitimate users.”

Introducing AI definitely introduces new potential revenue streams, but it also adds new attack surfaces to your products.

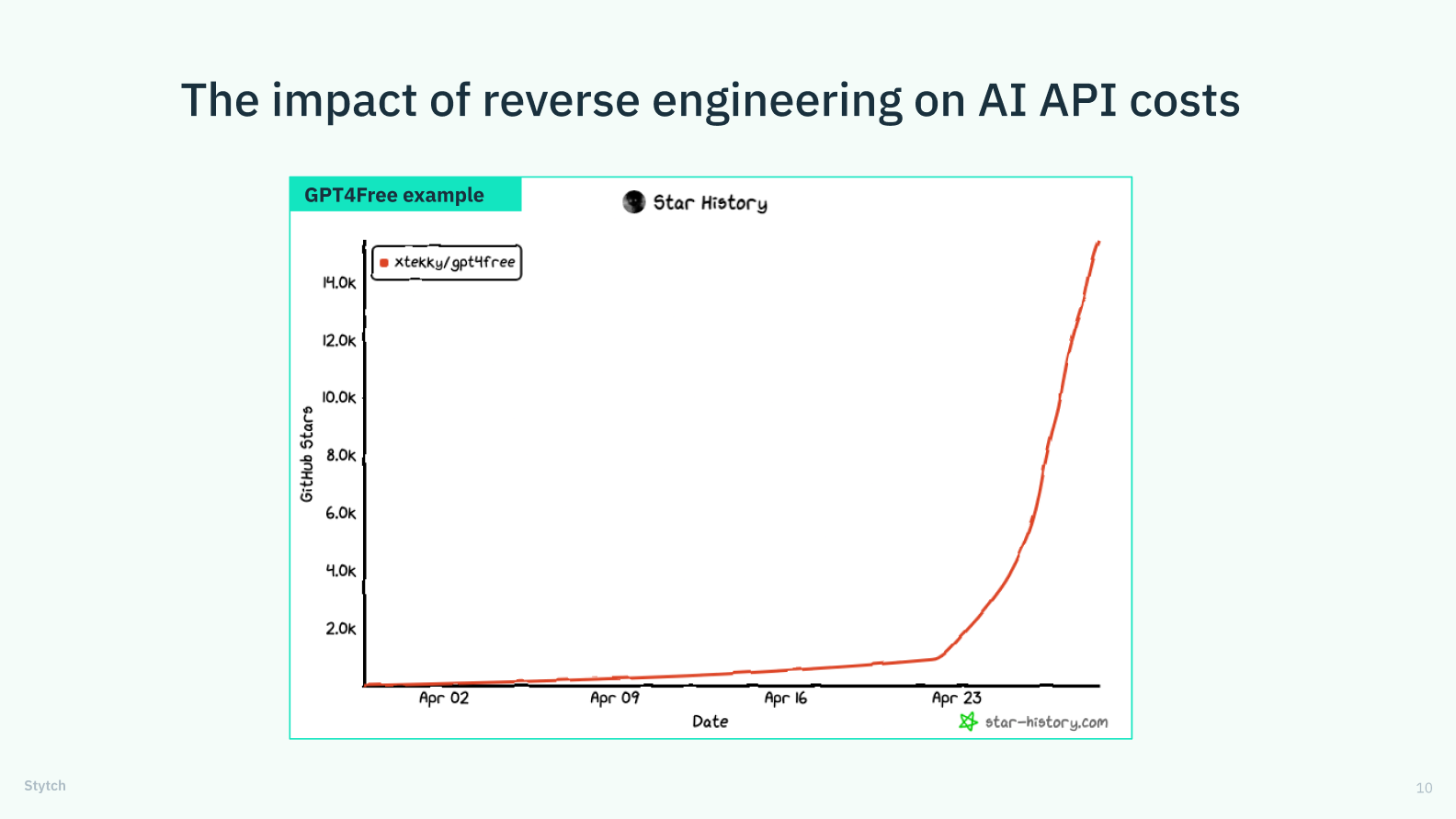

One major risk is the threat of reverse engineering. For example, when working with customers like You.com and Hex, Stytch discovered the surprising economic incentives to reverse engineer apps that incorporate AI functionality.

One example of this in action is GPT for Free, an open-source project on GitHub. It has gained over 30,000 stars, and it focuses on reverse engineering sites that offer AI capabilities.

The motivation behind this attack is to fool the site into thinking that a bot is a real human user, thereby exploiting the valuable AI feature without paying for it. This is particularly relevant because serving AI features is expensive but relatively fungible, allowing attackers to monetize API endpoints and get free API usage.

However, the most interesting attacks are carried out on sites not listed by GPT for Free, because they’re performed by individuals who haven't open-sourced their methods. For instance, one of Stytch’s clients recently discovered that 20% of their traffic, consisting of millions of fingerprints per day, was from headless browser automation.

Even bot mitigation tactics like CAPTCHAs are easy to bypass with tools like anti-captcha.com. For attackers, it’s worth it to pay a small fee to get past a CAPTCHA, because they can extract significant value from reverse engineering AI APIs.

This threat is quite technical, but often overlooked when organizations focus on product features and use cases, neglecting the adverse impacts and security risks associated with incorporating AI resources. Thankfully, there are tools that can help, like Device Fingerprinting.

4. Consider future security considerations around autonomous AI.

“While [AI agents] may not necessarily pose nefarious risks to users today, they do challenge how we think about how apps need to serve customers. What if the user is separated from the browser, and we now have autonomous agents acting on our behalf? This shift introduces new questions about serving users, access controls, and handling sensitive data.”

Another trend to watch is the rise of autonomous AI agents, like the project AutoGPT. Unlike with Chat GPT, where you're always in the loop, AutoGPT allows the AI to prompt itself. This means that, given a broad directive, the AI agent can carry out the tasks independently.

This new kind of AI potentially overhauls everything we’ve come to take for granted about user/computer interactions: what things should an AI agent be allowed to do on our behalf? How does that change depending on the service? Sure, you might let an AI agent book the best flights for you based on your schedule, but would you let it, say, refinance your mortgage? More importantly, how do those permissions get set and managed?

Today, we have something called role-based access controls, or RBAC, that designates permissions and actions by user. But if every user is now comprised of one human being and one or several AI agents, that introduces new constraints these human-based systems need to account for.

Although we're not seeing security pitfalls from this yet (the technology is still very new), we're intrigued by the underlying trend. It introduces some interesting ideas around authorization and fraud detection that will only become more prevalent as the technology catches on.

Interested in learning more?

If you’d like to dive deeper into AI, check out Stytch’s piece in TechCrunch about securing AI applications. You can also follow Stytch and Orb on Twitter to get a heads up about future events.

About Stytch: Stytch is a developer-first authentication platform with solutions for B2C and B2B, including breach-resistant passwords, passwordless auth flows, MFA, SSO with SAML and OIDC, and fraud and risk protection. Learn more at stytch.com.

About Orb: Orb is a modern pricing platform that helps companies automate billing for seats, usage-based consumption, and everything in between. Learn more at withorb.com.

Our thanks to ChatGPT for drafting the initial version of this blog post from an AI-generated transcript.